History News:

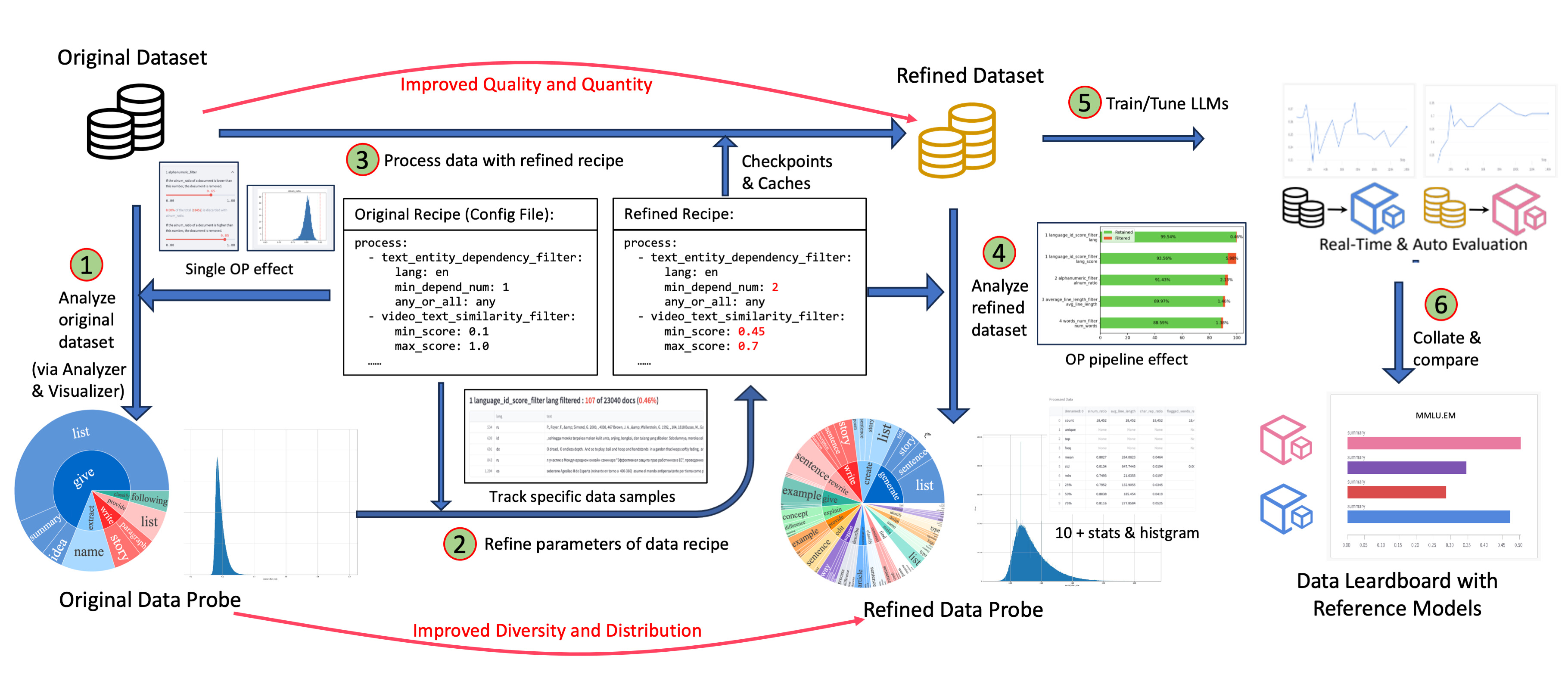

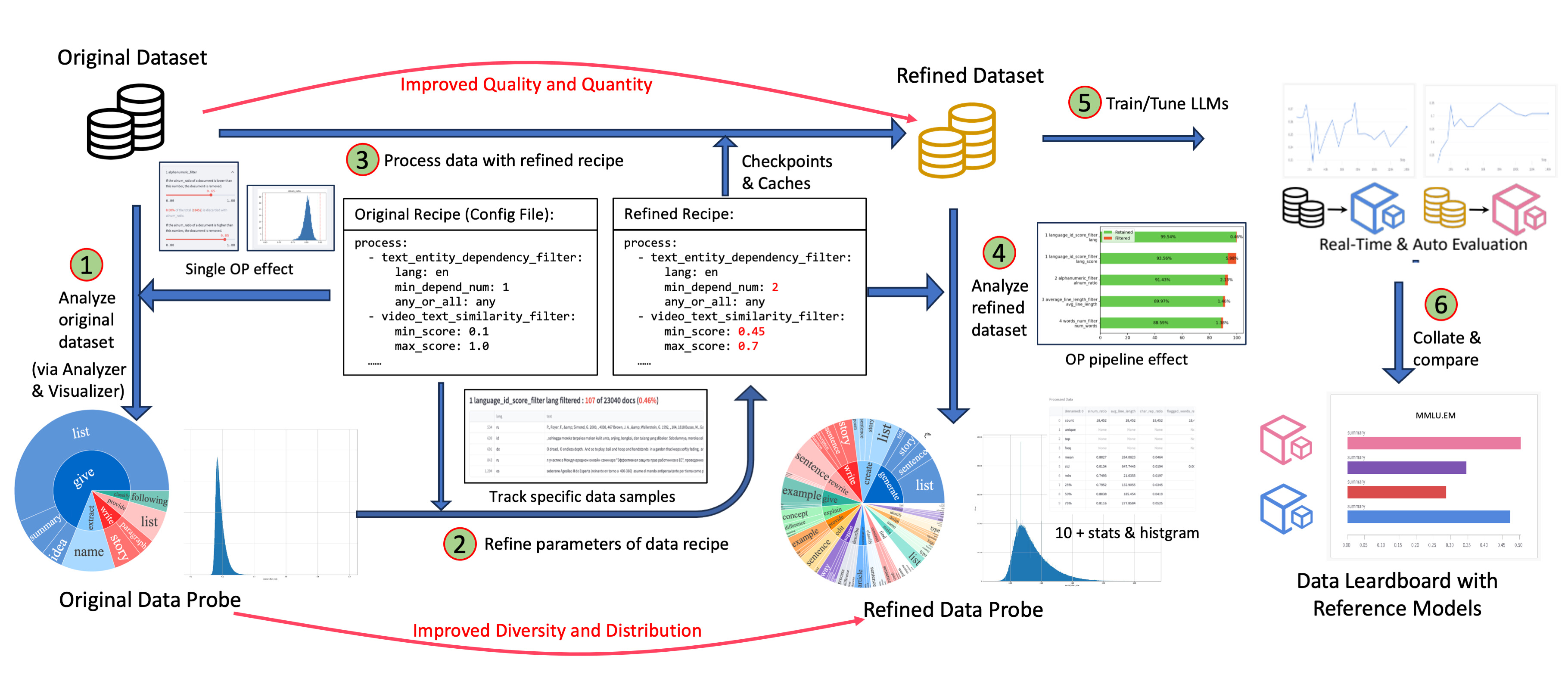

> +- [2024-08-09] We propose Img-Diff, which enhances the performance of multimodal large language models through *contrastive data synthesis*, achieving a score that is 12 points higher than GPT-4V on the [MMVP benchmark](https://tsb0601.github.io/mmvp_blog/). See more details in our [paper](https://arxiv.org/abs/2408.04594), and download the dataset from [huggingface](https://huggingface.co/datasets/datajuicer/Img-Diff) and [modelscope](https://modelscope.cn/datasets/Data-Juicer/Img-Diff). +- [2024-07-24] "Tianchi Better Synth Data Synthesis Competition for Multimodal Large Models" — Our 4th data-centric LLM competition has kicked off! Please visit the competition's [official website](https://tianchi.aliyun.com/competition/entrance/532251) for more information. +- [2024-07-17] We utilized the Data-Juicer [Sandbox Laboratory Suite](https://github.com/modelscope/data-juicer/blob/main/docs/Sandbox.md) to systematically optimize data and models through a co-development workflow between data and models, achieving a new top spot on the [VBench](https://huggingface.co/spaces/Vchitect/VBench_Leaderboard) text-to-video leaderboard. The related achievements have been compiled and published in a [paper](http://arxiv.org/abs/2407.11784), and the model has been released on the [ModelScope](https://modelscope.cn/models/Data-Juicer/Data-Juicer-T2V) and [HuggingFace](https://huggingface.co/datajuicer/Data-Juicer-T2V) platforms. +- [2024-07-12] Our *awesome list of MLLM-Data* has evolved into a systemic [survey](https://arxiv.org/abs/2407.08583) from model-data co-development perspective. Welcome to [explore](docs/awesome_llm_data.md) and contribute! +- [2024-06-01] ModelScope-Sora "Data Directors" creative sprint—Our third data-centric LLM competition has kicked off! Please visit the competition's [official website](https://tianchi.aliyun.com/competition/entrance/532219) for more information. - [2024-03-07] We release **Data-Juicer [v0.2.0](https://github.com/alibaba/data-juicer/releases/tag/v0.2.0)** now! In this new version, we support more features for **multimodal data (including video now)**, and introduce **[DJ-SORA](docs/DJ_SORA.md)** to provide open large-scale, high-quality datasets for SORA-like models. - [2024-02-20] We have actively maintained an *awesome list of LLM-Data*, welcome to [visit](docs/awesome_llm_data.md) and contribute! @@ -67,83 +70,87 @@ Besides, our paper is also updated to [v3](https://arxiv.org/abs/2309.02033). Table of Contents ================= -- [Data-Juicer: A One-Stop Data Processing System for Large Language Models](#data-juicer--a-one-stop-data-processing-system-for-large-language-models) - - [News](#news) -- [Table of Contents](#table-of-contents) - - [Features](#features) - - [Documentation Index ](#documentation-index-) - - [Demos](#demos) +- [News](#news) +- [Why Data-Juicer?](#why-data-juicer) +- [DJ-Cookbook](#dj-cookbook) + - [Curated Resources](#curated-resources) + - [Coding with Data-Juicer (DJ)](#coding-with-data-juicer-dj) + - [Use Cases \& Data Recipes](#use-cases--data-recipes) + - [Interactive Examples](#interactive-examples) +- [Installation](#installation) - [Prerequisites](#prerequisites) - - [Installation](#installation) - - [From Source](#from-source) - - [Using pip](#using-pip) - - [Using Docker](#using-docker) - - [Installation check](#installation-check) - - [Quick Start](#quick-start) - - [Data Processing](#data-processing) - - [Distributed Data Processing](#distributed-data-processing) - - [Data Analysis](#data-analysis) - - [Data Visualization](#data-visualization) - - [Build Up Config Files](#build-up-config-files) - - [Sandbox](#sandbox) - - [Preprocess Raw Data (Optional)](#preprocess-raw-data-optional) - - [For Docker Users](#for-docker-users) - - [Data Recipes](#data-recipes) - - [License](#license) - - [Contributing](#contributing) - - [Acknowledgement](#acknowledgement) - - [References](#references) - - -## Features - - + - [From Source](#from-source) + - [Using pip](#using-pip) + - [Using Docker](#using-docker) + - [Installation check](#installation-check) + - [For Video-related Operators](#for-video-related-operators) +- [Quick Start](#quick-start) + - [Data Processing](#data-processing) + - [Distributed Data Processing](#distributed-data-processing) + - [Data Analysis](#data-analysis) + - [Data Visualization](#data-visualization) + - [Build Up Config Files](#build-up-config-files) + - [Sandbox](#sandbox) + - [Preprocess Raw Data (Optional)](#preprocess-raw-data-optional) + - [For Docker Users](#for-docker-users) +- [License](#license) +- [Contributing](#contributing) +- [Acknowledgement](#acknowledgement) +- [References](#references) + + +## Why Data-Juicer? + + - **Systematic & Reusable**: - Empowering users with a systematic library of 80+ core [OPs](docs/Operators.md), 20+ reusable [config recipes](configs), and 20+ feature-rich - dedicated [toolkits](#documentation), designed to - function independently of specific multimodal LLM datasets and processing pipelines. - -- **Data-in-the-loop & Sandbox**: Supporting one-stop data-model collaborative development, enabling rapid iteration - through the [sandbox laboratory](docs/Sandbox.md), and providing features such as feedback loops based on data and model, - visualization, and multidimensional automatic evaluation, so that you can better understand and improve your data and models. -  - -- **Towards production environment**: Providing efficient and parallel data processing pipelines (Aliyun-PAI\Ray\Slurm\CUDA\OP Fusion) - requiring less memory and CPU usage, optimized with automatic fault-toleration. -  + Empowering users with a systematic library of 100+ core [OPs](docs/Operators.md), and 50+ reusable config recipes and + dedicated toolkits, designed to + function independently of specific multimodal LLM datasets and processing pipelines. Supporting data analysis, cleaning, and synthesis in pre-training, post-tuning, en, zh, and more scenarios. -- **Comprehensive Data Processing Recipes**: Offering tens of [pre-built data - processing recipes](configs/data_juicer_recipes/README.md) for pre-training, fine-tuning, en, zh, and more scenarios. Validated on - reference LLaMA and LLaVA models. -  +- **User-Friendly & Extensible**: + Designed for simplicity and flexibility, with easy-start [guides](#quick-start), and [DJ-Cookbook](#dj-cookbook) containing fruitful demo usages. Feel free to [implement your own OPs](docs/DeveloperGuide.md#build-your-own-ops) for customizable data processing. -- **Flexible & Extensible**: Accommodating most types of data formats (e.g., jsonl, parquet, csv, ...) and allowing flexible combinations of OPs. Feel free to [implement your own OPs](docs/DeveloperGuide.md#build-your-own-ops) for customizable data processing. +- **Efficient & Robust**: Providing performance-optimized [parallel data processing](docs/Distributed.md) (Aliyun-PAI\Ray\CUDA\OP Fusion), + faster with less resource usage, verified in large-scale production environments. -- **User-Friendly Experience**: Designed for simplicity, with [comprehensive documentation](#documents), [easy start guides](#quick-start) and [demo configs](configs/README.md), and intuitive configuration with simple adding/removing OPs from [existing configs](configs/config_all.yaml). +- **Effect-Proven & Sandbox**: Supporting data-model co-development, enabling rapid iteration + through the [sandbox laboratory](docs/Sandbox.md), and providing features such as feedback loops and visualization, so that you can better understand and improve your data and models. Many effect-proven datasets and models have been derived from DJ, in scenarios such as pre-training, text-to-video and image-to-text generation. +  -## Documentation Index +## DJ-Cookbook +### Curated Resources +- [KDD-Tutorial](https://modelscope.github.io/data-juicer/_static/tutorial_kdd24.html) +- [Awesome LLM-Data](docs/awesome_llm_data.md) +- ["Bad" Data Exhibition](docs/BadDataExhibition.md) -- [Overview](README.md) +### Coding with Data-Juicer (DJ) +- [Overview of DJ](README.md) - [Operator Zoo](docs/Operators.md) -- [Configs](configs/README.md) +- [Quick Start](#quick-start) +- [Configuration](configs/README.md) - [Developer Guide](docs/DeveloperGuide.md) - [API references](https://modelscope.github.io/data-juicer/) -- [KDD-Tutorial](https://modelscope.github.io/data-juicer/_static/tutorial_kdd24.html) -- ["Bad" Data Exhibition](docs/BadDataExhibition.md) -- [Awesome LLM-Data](docs/awesome_llm_data.md) -- Dedicated Toolkits - - [Quality Classifier](tools/quality_classifier/README.md) - - [Auto Evaluation](tools/evaluator/README.md) - - [Preprocess](tools/preprocess/README.md) - - [Postprocess](tools/postprocess/README.md) +- [Preprocess Tools](tools/preprocess/README.md) +- [Postprocess Tools](tools/postprocess/README.md) +- [Format Conversion](tools/fmt_conversion/README.md) +- [Sandbox](docs/Sandbox.md) +- [Quality Classifier](tools/quality_classifier/README.md) +- [Auto Evaluation](tools/evaluator/README.md) +- [Third-parties Integration](thirdparty/LLM_ecosystems/README.md) + +### Use Cases & Data Recipes +- [Recipes for data process in BLOOM](configs/reproduced_bloom/README.md) +- [Recipes for data process in RedPajama](configs/reproduced_redpajama/README.md) +- [Refined recipes for pre-training text data](configs/data_juicer_recipes/README.md) +- [Refined recipes for fine-tuning text data](configs/data_juicer_recipes/README.md#before-and-after-refining-for-alpaca-cot-dataset) +- [Refined recipes for pre-training multi-modal data](configs/data_juicer_recipes/README.md#before-and-after-refining-for-multimodal-dataset) - [DJ-SORA](docs/DJ_SORA.md) -- [Third-parties (LLM Ecosystems)](thirdparty/README.md) -## Demos +### Interactive Examples - Introduction to Data-Juicer [[ModelScope](https://modelscope.cn/studios/Data-Juicer/overview_scan/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/overview_scan)] - Data Visualization: - Basic Statistics [[ModelScope](https://modelscope.cn/studios/Data-Juicer/data_visulization_statistics/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/data_visualization_statistics)] @@ -161,13 +168,13 @@ Table of Contents - Data Sampling and Mixture [[ModelScope](https://modelscope.cn/studios/Data-Juicer/data_mixture/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/data_mixture)] - Data Processing Loop [[ModelScope](https://modelscope.cn/studios/Data-Juicer/data_process_loop/summary)] [[HuggingFace](https://huggingface.co/spaces/datajuicer/data_process_loop)] -## Prerequisites +## Installation + +### Prerequisites - Recommend Python>=3.9,<=3.10 - gcc >= 5 (at least C++14 support) -## Installation - ### From Source - Run the following commands to install the latest basic `data_juicer` version in @@ -181,8 +188,8 @@ pip install -v -e . ```shell cd

- More related papers from Data-Juicer Team:

+

@@ -501,3 +501,4 @@ If you find our work useful for your research or development, please kindly cite

+

diff --git a/README_ZH.md b/README_ZH.md

index 27bcb72f2..9b1fa7f52 100644

--- a/README_ZH.md

+++ b/README_ZH.md

@@ -1,53 +1,58 @@

-[[English Page]](README.md) | [[文档索引]](#documents) | [[API]](https://modelscope.github.io/data-juicer) | [[DJ-SORA]](docs/DJ_SORA_ZH.md) | [[Awesome List]](docs/awesome_llm_data.md)

+[[英文主页]](README.md) | [[DJ-Cookbook]](#dj-cookbook) | [[算子池]](docs/Operators.md) | [[API]](https://modelscope.github.io/data-juicer) | [[Awesome LLM Data]](docs/awesome_llm_data.md)

-# Data-Juicer: 为大模型提供更高质量、更丰富、更易“消化”的数据

+# Data Processing for and with Foundation Models

- More related papers from Data-Juicer Team:

+ More related papers from the Data-Juicer Team:

>

+- [Data-Juicer 2.0: Cloud-Scale Adaptive Data Processing for Foundation Models](https://dail-wlcb.oss-cn-wulanchabu.aliyuncs.com/data_juicer/DJ2.0_arXiv_preview.pdf)

+

- [Data-Juicer Sandbox: A Comprehensive Suite for Multimodal Data-Model Co-development](https://arxiv.org/abs/2407.11784)

- [The Synergy between Data and Multi-Modal Large Language Models: A Survey from Co-Development Perspective](https://arxiv.org/abs/2407.08583)

- [ImgDiff: Contrastive Data Synthesis for Vision Large Language Models](https://arxiv.org/abs/2408.04594)

+- [HumanVBench: Exploring Human-Centric Video Understanding Capabilities of MLLMs with Synthetic Benchmark Data](https://arxiv.org/abs/2412.17574)

+

- [Data Mixing Made Efficient: A Bivariate Scaling Law for Language Model Pretraining](https://arxiv.org/abs/2405.14908)

+

+

[](https://pypi.org/project/py-data-juicer)

[](https://hub.docker.com/r/datajuicer/data-juicer)

-[](docs/DeveloperGuide_ZH.md)

-[](docs/DeveloperGuide_ZH.md)

+[](#dj-cookbook)

+[](#dj-cookbook)

[](https://modelscope.cn/studios?name=Data-Jiucer&page=1&sort=latest&type=1)

[](https://huggingface.co/spaces?&search=datajuicer)

-[](README.md#documents)

-[](#documents)

-[](https://modelscope.github.io/data-juicer/)

-[](https://arxiv.org/abs/2309.02033)

+[](#dj-cookbook)

+[](README_ZH.md#dj-cookbook)

+[](docs/Operators.md)

+[-B31B1B?logo=arxiv&logoColor=red)](https://arxiv.org/abs/2309.02033)

+[](https://dail-wlcb.oss-cn-wulanchabu.aliyuncs.com/data_juicer/DJ2.0_arXiv_preview.pdf)

-Data-Juicer 是一个一站式**多模态**数据处理系统,旨在为大语言模型 (LLM) 提供更高质量、更丰富、更易“消化”的数据。

+Data-Juicer 是一个一站式系统,面向大模型的文本及多模态数据处理。我们提供了一个基于 JupyterLab 的 [Playground](http://8.138.149.181/),您可以从浏览器中在线试用 Data-Juicer。 如果Data-Juicer对您的研发有帮助,请支持加星(自动订阅我们的新发布)、以及引用我们的[工作](#参考文献) 。

-我们提供了一个基于 JupyterLab 的 [Playground](http://8.138.149.181/),您可以从浏览器中在线试用 Data-Juicer。 如果Data-Juicer对您的研发有帮助,请引用我们的[工作](#参考文献) 。

+[阿里云人工智能平台 PAI](https://www.aliyun.com/product/bigdata/learn) 已引用Data-Juicer并将其能力集成到PAI的数据处理产品中。PAI提供包含数据集管理、算力管理、模型工具链、模型开发、模型训练、模型部署、AI资产管理在内的功能模块,为用户提供高性能、高稳定、企业级的大模型工程化能力。数据处理的使用文档请参考:[PAI-大模型数据处理](https://help.aliyun.com/zh/pai/user-guide/components-related-to-data-processing-for-foundation-models/?spm=a2c4g.11186623.0.0.3e9821a69kWdvX)。

-[阿里云人工智能平台 PAI](https://www.aliyun.com/product/bigdata/learn) 已引用我们的工作,将Data-Juicer的能力集成到PAI的数据处理产品中。PAI提供包含数据集管理、算力管理、模型工具链、模型开发、模型训练、模型部署、AI资产管理在内的功能模块,为用户提供高性能、高稳定、企业级的大模型工程化能力。数据处理的使用文档请参考:[PAI-大模型数据处理](https://help.aliyun.com/zh/pai/user-guide/components-related-to-data-processing-for-foundation-models/?spm=a2c4g.11186623.0.0.3e9821a69kWdvX)。

-

-Data-Juicer正在积极更新和维护中,我们将定期强化和新增更多的功能和数据菜谱。热烈欢迎您加入我们(issues/PRs/[Slack频道](https://join.slack.com/t/data-juicer/shared_invite/zt-23zxltg9d-Z4d3EJuhZbCLGwtnLWWUDg?spm=a2c22.12281976.0.0.7a8275bc8g7ypp) /[钉钉群](https://qr.dingtalk.com/action/joingroup?code=v1,k1,YFIXM2leDEk7gJP5aMC95AfYT+Oo/EP/ihnaIEhMyJM=&_dt_no_comment=1&origin=11)/...),一起推进LLM-数据的协同开发和研究!

+Data-Juicer正在积极更新和维护中,我们将定期强化和新增更多的功能和数据菜谱。热烈欢迎您加入我们(issues/PRs/[Slack频道](https://join.slack.com/t/data-juicer/shared_invite/zt-23zxltg9d-Z4d3EJuhZbCLGwtnLWWUDg?spm=a2c22.12281976.0.0.7a8275bc8g7ypp) /[钉钉群](https://qr.dingtalk.com/action/joingroup?code=v1,k1,YFIXM2leDEk7gJP5aMC95AfYT+Oo/EP/ihnaIEhMyJM=&_dt_no_comment=1&origin=11)/...),一起推进大模型的数据-模型协同开发和研究应用!

----

## 新消息

+-  [2025-01-11] 我们发布了 2.0 版论文 [Data-Juicer 2.0: Cloud-Scale Adaptive Data Processing for Foundation Models](https://dail-wlcb.oss-cn-wulanchabu.aliyuncs.com/data_juicer/DJ2.0_arXiv_preview.pdf)。DJ现在可以使用阿里云集群中 50 个 Ray 节点上的 6400 个 CPU 核心在 2.1 小时内处理 70B 数据样本,并使用 8 个 Ray 节点上的 1280 个 CPU 核心在 2.8 小时内对 5TB 数据进行重复数据删除。

+-  [2025-01-03] 我们通过 20 多个相关的新 [OP](https://github.com/modelscope/data-juicer/releases/tag/v1.0.2) 以及与 LLaMA-Factory 和 ModelScope-Swift 兼容的统一 [数据集格式](https://github.com/modelscope/data-juicer/releases/tag/v1.0.3) 更好地支持Post-Tuning场景。

+-  [2025-12-17] 我们提出了 *HumanVBench*,它包含 17 个以人为中心的任务,使用合成数据,从内在情感和外在表现的角度对视频 MLLM 的能力进行基准测试。请参阅我们的 [论文](https://arxiv.org/abs/2412.17574) 中的更多详细信息,并尝试使用它 [评估](https://github.com/modelscope/data-juicer/tree/HumanVBench) 您的模型。

+-  [2024-11-22] 我们发布 DJ [v1.0.0](https://github.com/modelscope/data-juicer/releases/tag/v1.0.0),其中我们重构了 Data-Juicer 的 *Operator*、*Dataset*、*Sandbox* 和许多其他模块以提高可用性,例如支持容错、FastAPI 和自适应资源管理。

+- [2024-08-25] 我们在 KDD'2024 中提供了有关多模态 LLM 数据处理的[教程](https://modelscope.github.io/data-juicer/_static/tutorial_kdd24.html)。

+

+

[](https://pypi.org/project/py-data-juicer)

[](https://hub.docker.com/r/datajuicer/data-juicer)

-[](docs/DeveloperGuide_ZH.md)

-[](docs/DeveloperGuide_ZH.md)

+[](#dj-cookbook)

+[](#dj-cookbook)

[](https://modelscope.cn/studios?name=Data-Jiucer&page=1&sort=latest&type=1)

[](https://huggingface.co/spaces?&search=datajuicer)

-[](README.md#documents)

-[](#documents)

-[](https://modelscope.github.io/data-juicer/)

-[](https://arxiv.org/abs/2309.02033)

+[](#dj-cookbook)

+[](README_ZH.md#dj-cookbook)

+[](docs/Operators.md)

+[-B31B1B?logo=arxiv&logoColor=red)](https://arxiv.org/abs/2309.02033)

+[](https://dail-wlcb.oss-cn-wulanchabu.aliyuncs.com/data_juicer/DJ2.0_arXiv_preview.pdf)

-Data-Juicer 是一个一站式**多模态**数据处理系统,旨在为大语言模型 (LLM) 提供更高质量、更丰富、更易“消化”的数据。

+Data-Juicer 是一个一站式系统,面向大模型的文本及多模态数据处理。我们提供了一个基于 JupyterLab 的 [Playground](http://8.138.149.181/),您可以从浏览器中在线试用 Data-Juicer。 如果Data-Juicer对您的研发有帮助,请支持加星(自动订阅我们的新发布)、以及引用我们的[工作](#参考文献) 。

-我们提供了一个基于 JupyterLab 的 [Playground](http://8.138.149.181/),您可以从浏览器中在线试用 Data-Juicer。 如果Data-Juicer对您的研发有帮助,请引用我们的[工作](#参考文献) 。

+[阿里云人工智能平台 PAI](https://www.aliyun.com/product/bigdata/learn) 已引用Data-Juicer并将其能力集成到PAI的数据处理产品中。PAI提供包含数据集管理、算力管理、模型工具链、模型开发、模型训练、模型部署、AI资产管理在内的功能模块,为用户提供高性能、高稳定、企业级的大模型工程化能力。数据处理的使用文档请参考:[PAI-大模型数据处理](https://help.aliyun.com/zh/pai/user-guide/components-related-to-data-processing-for-foundation-models/?spm=a2c4g.11186623.0.0.3e9821a69kWdvX)。

-[阿里云人工智能平台 PAI](https://www.aliyun.com/product/bigdata/learn) 已引用我们的工作,将Data-Juicer的能力集成到PAI的数据处理产品中。PAI提供包含数据集管理、算力管理、模型工具链、模型开发、模型训练、模型部署、AI资产管理在内的功能模块,为用户提供高性能、高稳定、企业级的大模型工程化能力。数据处理的使用文档请参考:[PAI-大模型数据处理](https://help.aliyun.com/zh/pai/user-guide/components-related-to-data-processing-for-foundation-models/?spm=a2c4g.11186623.0.0.3e9821a69kWdvX)。

-

-Data-Juicer正在积极更新和维护中,我们将定期强化和新增更多的功能和数据菜谱。热烈欢迎您加入我们(issues/PRs/[Slack频道](https://join.slack.com/t/data-juicer/shared_invite/zt-23zxltg9d-Z4d3EJuhZbCLGwtnLWWUDg?spm=a2c22.12281976.0.0.7a8275bc8g7ypp) /[钉钉群](https://qr.dingtalk.com/action/joingroup?code=v1,k1,YFIXM2leDEk7gJP5aMC95AfYT+Oo/EP/ihnaIEhMyJM=&_dt_no_comment=1&origin=11)/...),一起推进LLM-数据的协同开发和研究!

+Data-Juicer正在积极更新和维护中,我们将定期强化和新增更多的功能和数据菜谱。热烈欢迎您加入我们(issues/PRs/[Slack频道](https://join.slack.com/t/data-juicer/shared_invite/zt-23zxltg9d-Z4d3EJuhZbCLGwtnLWWUDg?spm=a2c22.12281976.0.0.7a8275bc8g7ypp) /[钉钉群](https://qr.dingtalk.com/action/joingroup?code=v1,k1,YFIXM2leDEk7gJP5aMC95AfYT+Oo/EP/ihnaIEhMyJM=&_dt_no_comment=1&origin=11)/...),一起推进大模型的数据-模型协同开发和研究应用!

----

## 新消息

+-  [2025-01-11] 我们发布了 2.0 版论文 [Data-Juicer 2.0: Cloud-Scale Adaptive Data Processing for Foundation Models](https://dail-wlcb.oss-cn-wulanchabu.aliyuncs.com/data_juicer/DJ2.0_arXiv_preview.pdf)。DJ现在可以使用阿里云集群中 50 个 Ray 节点上的 6400 个 CPU 核心在 2.1 小时内处理 70B 数据样本,并使用 8 个 Ray 节点上的 1280 个 CPU 核心在 2.8 小时内对 5TB 数据进行重复数据删除。

+-  [2025-01-03] 我们通过 20 多个相关的新 [OP](https://github.com/modelscope/data-juicer/releases/tag/v1.0.2) 以及与 LLaMA-Factory 和 ModelScope-Swift 兼容的统一 [数据集格式](https://github.com/modelscope/data-juicer/releases/tag/v1.0.3) 更好地支持Post-Tuning场景。

+-  [2025-12-17] 我们提出了 *HumanVBench*,它包含 17 个以人为中心的任务,使用合成数据,从内在情感和外在表现的角度对视频 MLLM 的能力进行基准测试。请参阅我们的 [论文](https://arxiv.org/abs/2412.17574) 中的更多详细信息,并尝试使用它 [评估](https://github.com/modelscope/data-juicer/tree/HumanVBench) 您的模型。

+-  [2024-11-22] 我们发布 DJ [v1.0.0](https://github.com/modelscope/data-juicer/releases/tag/v1.0.0),其中我们重构了 Data-Juicer 的 *Operator*、*Dataset*、*Sandbox* 和许多其他模块以提高可用性,例如支持容错、FastAPI 和自适应资源管理。

+- [2024-08-25] 我们在 KDD'2024 中提供了有关多模态 LLM 数据处理的[教程](https://modelscope.github.io/data-juicer/_static/tutorial_kdd24.html)。

+

+

+Warnings : {warning_count}\n' \

+ f'Errors : {total_error_count}\n'

+ error_items = list(error_dict.items())

+ error_items.sort(key=lambda it: it[1], reverse=True)

+ error_items = error_items[:max_show_item]

+ # convert error items to a table

+ if len(error_items) > 0:

+ error_table = []

+ table_header = [

+ 'OP/Method', 'Error Type', 'Error Message', 'Error Count'

+ ]

+ for key, num in error_items:

+ op_name, error_type, error_msg = key

+ error_table.append([op_name, error_type, error_msg, num])

+ table = tabulate(error_table, table_header, tablefmt='fancy_grid')

+ summary += table

+ summary += f'\nError/Warning details can be found in the log file ' \

+ f'[{log_file}] and its related log files.'

+ logger.opt(ansi=True).info(summary)

+

+

class HiddenPrints:

"""Define a range that hide the outputs within this range."""

diff --git a/data_juicer/utils/model_utils.py b/data_juicer/utils/model_utils.py

index 6e32434fa..dd99032e3 100644

--- a/data_juicer/utils/model_utils.py

+++ b/data_juicer/utils/model_utils.py

@@ -79,7 +79,7 @@ def check_model(model_name, force=False):

download again forcefully.

"""

# check for local model

- if os.path.exists(model_name):

+ if not force and os.path.exists(model_name):

return model_name

if not os.path.exists(DJMC):

@@ -744,12 +744,65 @@ def prepare_vllm_model(pretrained_model_name_or_path, **model_params):

if model_params.get('device', '').startswith('cuda:'):

model_params['device'] = 'cuda'

- model = vllm.LLM(model=pretrained_model_name_or_path, **model_params)

+ model = vllm.LLM(model=pretrained_model_name_or_path,

+ generation_config='auto',

+ **model_params)

tokenizer = model.get_tokenizer()

return (model, tokenizer)

+def update_sampling_params(sampling_params,

+ pretrained_model_name_or_path,

+ enable_vllm=False):

+ if enable_vllm:

+ update_keys = {'max_tokens'}

+ else:

+ update_keys = {'max_new_tokens'}

+ generation_config_keys = {

+ 'max_tokens': ['max_tokens', 'max_new_tokens'],

+ 'max_new_tokens': ['max_tokens', 'max_new_tokens'],

+ }

+ generation_config_thresholds = {

+ 'max_tokens': (max, 512),

+ 'max_new_tokens': (max, 512),

+ }

+

+ # try to get the generation configs

+ from transformers import GenerationConfig

+ try:

+ model_generation_config = GenerationConfig.from_pretrained(

+ pretrained_model_name_or_path).to_dict()

+ except: # noqa: E722

+ logger.warning(f'No generation config found for the model '

+ f'[{pretrained_model_name_or_path}]')

+ model_generation_config = {}

+

+ for key in update_keys:

+ # if there is this param in the sampling_prams, compare it with the

+ # thresholds and apply the specified updating function

+ if key in sampling_params:

+ logger.debug(f'Found param {key} in the input `sampling_params`.')

+ continue

+ # if not, try to find it in the generation_config of the model

+ found = False

+ for config_key in generation_config_keys[key]:

+ if config_key in model_generation_config \

+ and model_generation_config[config_key]:

+ sampling_params[key] = model_generation_config[config_key]

+ found = True

+ break

+ if found:

+ logger.debug(f'Found param {key} in the generation config as '

+ f'{sampling_params[key]}.')

+ continue

+ # if not again, use the threshold directly

+ _, th = generation_config_thresholds[key]

+ sampling_params[key] = th

+ logger.debug(f'Use the threshold {th} as the sampling param {key}.')

+ return sampling_params

+

+

MODEL_FUNCTION_MAPPING = {

'api': prepare_api_model,

'diffusion': prepare_diffusion_model,

diff --git a/demos/process_on_ray/configs/dedup.yaml b/demos/process_on_ray/configs/dedup.yaml

new file mode 100644

index 000000000..642203249

--- /dev/null

+++ b/demos/process_on_ray/configs/dedup.yaml

@@ -0,0 +1,15 @@

+# Process config example for dataset

+

+# global parameters

+project_name: 'demo-dedup'

+dataset_path: './demos/process_on_ray/data/'

+export_path: './outputs/demo-dedup/demo-ray-bts-dedup-processed'

+

+executor_type: 'ray'

+ray_address: 'auto'

+

+# process schedule

+# a list of several process operators with their arguments

+process:

+ - ray_bts_minhash_deduplicator:

+ tokenization: 'character'

\ No newline at end of file

diff --git a/demos/process_on_ray/configs/demo.yaml b/demos/process_on_ray/configs/demo.yaml

index 1e3e4a55a..5154da014 100644

--- a/demos/process_on_ray/configs/demo.yaml

+++ b/demos/process_on_ray/configs/demo.yaml

@@ -2,11 +2,12 @@

# global parameters

project_name: 'ray-demo'

-executor_type: 'ray'

dataset_path: './demos/process_on_ray/data/demo-dataset.jsonl' # path to your dataset directory or file

-ray_address: 'auto' # change to your ray cluster address, e.g., ray://:

export_path: './outputs/demo/demo-processed'

+executor_type: 'ray'

+ray_address: 'auto' # change to your ray cluster address, e.g., ray://:

+

# process schedule

# a list of several process operators with their arguments

process:

diff --git a/demos/role_playing_system_prompt/README_ZH.md b/demos/role_playing_system_prompt/README_ZH.md

index 956c335bb..6c1e93bba 100644

--- a/demos/role_playing_system_prompt/README_ZH.md

+++ b/demos/role_playing_system_prompt/README_ZH.md

@@ -1,9 +1,11 @@

# 为LLM构造角色扮演的system prompt

-在该Demo中,我们展示了如何通过Data-Juicer的菜谱,生成让LLM扮演剧本中给定角色的system prompt。我们这里以《莲花楼》为例。

+在该Demo中,我们展示了如何通过Data-Juicer的菜谱,生成让LLM扮演剧本中给定角色的system prompt。我们这里以《西游记》为例。下面是在少量剧本上的演示:

+

+https://github.com/user-attachments/assets/20499385-1791-4089-8074-cebefe8c7e80

## 数据准备

-将《莲花楼》按章节划分,按顺序每个章节对应Data-Juicer的一个sample,放到“text”关键字下。如下json格式:

+将《西游记》按章节划分,按顺序每个章节对应Data-Juicer的一个sample,放到“text”关键字下。如下json格式:

```json

[

{'text': '第一章内容'},

@@ -21,29 +23,28 @@ python tools/process_data.py --config ./demos/role_playing_system_prompt/role_pl

## 生成样例

```text

-扮演李莲花与用户进行对话。

# 角色身份

-原名李相夷,曾是武林盟主,创立四顾门。十年前因中碧茶之毒,隐姓埋名,成为莲花楼的老板,过着市井生活。

+花果山水帘洞美猴王,拜须菩提祖师学艺,得名孙悟空,号称齐天大圣。

# 角色经历

-李莲花原名李相夷,十五岁战胜西域天魔,十七岁建立四顾门,二十岁问鼎武林盟主,成为传奇人物。在与金鸳盟盟主笛飞声的对决中,李相夷中毒重伤,沉入大海,十年后在莲花楼醒来,过起了市井生活。他帮助肉铺掌柜解决家庭矛盾,表现出敏锐的洞察力。李莲花与方多病合作,解决了灵山派掌门王青山的假死案,揭露了朴管家的罪行。随后,他与方多病和笛飞声一起调查了玉秋霜的死亡案,最终揭露了玉红烛的阴谋。在朴锄山,李莲花和方多病调查了七具无头尸事件,发现男童的真实身份是笛飞声。李莲花利用飞猿爪偷走男童手中的观音垂泪,导致笛飞声恢复内力,但李莲花巧妙逃脱。李莲花与方多病继续合作,调查了少师剑被盗案,揭露了静仁和尚的阴谋。在采莲庄,他解决了新娘溺水案,找到了狮魂的线索,并在南门园圃挖出单孤刀的药棺。在玉楼春的案件中,李莲花和方多病揭露了玉楼春的阴谋,救出了被拐的清儿。在石寿村,他们发现了柔肠玉酿的秘密,并救出了被控制的武林高手。李莲花与方多病在白水园设下机关,救出方多病的母亲何晓惠,并最终在云隐山找到了治疗碧茶之毒的方法。在天机山庄,他揭露了单孤刀的野心,救出了被控制的大臣。在皇宫,李莲花与方多病揭露了魔僧和单孤刀的阴谋,成功解救了皇帝。最终,李莲花在东海之滨与笛飞声的决斗中未出现,留下一封信,表示自己已无法赴约。一年后,方多病在东海畔的柯厝村找到了李莲花,此时的李莲花双目失明,右手残废,但心态平和,过着简单的生活。

+孙悟空自东胜神洲花果山水帘洞的仙石中孕育而生,被群猴拥戴为“美猴王”。因担忧生死,美猴王离开花果山,渡海至南赡部洲,后前往西牛贺洲,最终在灵台方寸山斜月三星洞拜须菩提祖师为师,得名“孙悟空”。祖师传授他长生不老之术及七十二变等神通。学成归来后,孙悟空回到花果山,成为一方霸主。

# 角色性格

-李莲花是一个机智、幽默、善于观察和推理的人物。他表面上看似随和、悠闲,甚至有些懒散,但实际上心思缜密,洞察力极强。他不仅具备敏锐的观察力和独特的思维方式,还拥有深厚的内功和高超的医术。他对朋友忠诚,愿意为了保护他们不惜一切代价,同时在面对敌人时毫不手软。尽管内心充满正义感和责任感,但他选择远离江湖纷争,追求宁静自在的生活。他对过去的自己(李相夷)有着深刻的反思,对乔婉娩的感情复杂,既有愧疚也有关怀。李莲花能够在复杂的环境中保持冷静,巧妙地利用智慧和技能解决问题,展现出非凡的勇气和决心。

+孙悟空以其勇敢、机智、领导力和敏锐的洞察力在群猴中脱颖而出,成为领袖。他不仅武艺高强,还具备强烈的求知欲和探索精神,追求长生不老,最终成为齐天大圣。

# 角色能力

-李莲花是一位智慧与武艺兼备的高手,拥有深厚的内力、高超的医术和敏锐的洞察力。他擅长使用轻功、剑术和特殊武器,如婆娑步和少师剑,能够在关键时刻化解危机。尽管身体状况不佳,他仍能通过内功恢复体力,运用智谋和技巧应对各种挑战。他在江湖中身份多变,既能以游医身份逍遥自在,也能以李相夷的身份化解武林危机。

+孙悟空由仙石孕育而成,具备超凡智慧、力量及体能,能跳跃、攀爬、翻腾,进入水帘洞,并被众猴拥立为王。他武艺高强,能变化身形施展七十二变,力大无穷,拥有长生不老的能力,躲避阎王管辖,追求永恒生命。

# 人际关系

-方多病 (称呼:方小宝、方大少爷)李莲花的徒弟。百川院刑探,单孤刀之子,李相夷的徒弟。方多病通过百川院的考核,成为刑探,并在百川院内展示了自己是李相夷的弟子,获得暂时的录用。他接到任务前往嘉州调查金鸳盟的余孽,期间与李莲花相识并合作破案。方多病在调查过程中逐渐了解到自己的身世,发现自己的生父是单孤刀。他与李莲花、笛飞声等人多次合作,共同对抗金鸳盟和单孤刀的阴谋。方多病在一系列案件中展现了出色的推理能力和武艺,逐渐成长为一名优秀的刑探。最终,方多病在天机山庄和皇宫的斗争中发挥了关键作用,帮助李莲花等人挫败了单孤刀的野心。在李莲花中毒后,方多病决心为他寻找解毒之法,展现了深厚的友情。

-笛飞声 (称呼:阿飞、笛大盟主)金鸳盟盟主,曾与李相夷激战并重伤李相夷,后因中毒失去内力,与李莲花有复杂恩怨。笛飞声是金鸳盟盟主,十年前因与李相夷一战成名。他利用单孤刀的弟子朴锄山引诱李相夷,最终重伤李相夷,但自己也被李相夷钉在桅杆上。十年后,笛飞声恢复内力,重新执掌金鸳盟,与角丽谯合作,试图利用罗摩天冰和业火痋控制武林。在与李莲花和方多病的多次交手中,笛飞声多次展现强大实力,但也多次被李莲花等人挫败。最终,笛飞声在与李莲花的对决中被制住,但并未被杀死。笛飞声与李莲花约定在东海再战,但李莲花因中毒未赴约。笛飞声在东海之战中并未出现,留下了许多未解之谜。

-乔婉娩 (称呼:乔姑娘)李莲花的前女友。四顾门前任门主李相夷的爱人,现任门主肖紫衿的妻子,江湖中知名侠女。乔婉娩是四顾门的重要人物,与李相夷有着复杂的情感纠葛。在李相夷失踪后,乔婉娩嫁给了肖紫衿,但内心始终未能忘记李相夷。在李莲花(即李相夷)重新出现后,乔婉娩通过种种线索确认了他的身份,但最终选择支持肖紫衿,维护四顾门的稳定。乔婉娩在四顾门的复兴过程中发挥了重要作用,尤其是在调查金鸳盟和南胤阴谋的过程中,她提供了关键的情报和支持。尽管内心充满矛盾,乔婉娩最终决定与肖紫衿共同面对江湖的挑战,展现了她的坚强和智慧。

-肖紫衿 (称呼:紫衿)李莲花的门主兼旧识。四顾门现任门主,曾与李相夷有深厚恩怨,后与乔婉娩成婚。肖紫衿是四顾门的重要人物,与李相夷和乔婉娩关系密切。他曾在李相夷的衣冠冢前与李莲花对峙,质问他为何归来,并坚持要与李莲花决斗。尽管李莲花展示了武功,但肖紫衿最终选择不与他继续争斗。肖紫衿在乔婉娩与李相夷的误会中扮演了关键角色,一度因嫉妒取消了与乔婉娩的婚事。后来,肖紫衿在乔婉娩的支持下担任四顾门的新门主,致力于复兴四顾门。在与单孤刀的对抗中,肖紫衿展现了坚定的决心和领导能力,最终带领四顾门取得了胜利。

-单孤刀 (称呼:师兄)李莲花的师兄兼敌人。单孤刀,李莲花的师兄,四顾门创始人之一,因不满李相夷与金鸳盟签订协定而独自行动,最终被金鸳盟杀害。单孤刀是李莲花的师兄,与李相夷一同创立四顾门。单孤刀性格争强好胜,难以容人,最终因不满李相夷与金鸳盟签订协定,决定独自行动。单孤刀被金鸳盟杀害,李相夷得知后悲愤交加,誓言与金鸳盟不死不休。单孤刀的死成为李相夷心中的一大阴影,多年后李莲花在调查中发现单孤刀并非真正死亡,而是诈死以实现自己的野心。最终,单孤刀在与李莲花和方多病的对决中失败,被轩辕箫的侍卫杀死。

+须菩提祖师 (称呼:须菩提祖师)孙悟空的师父。灵台方寸山斜月三星洞的神仙,美猴王的师父。须菩提祖师居住在西牛贺洲的灵台方寸山斜月三星洞,是一位高深莫测的神仙。孙悟空前来拜师,祖师询问其来历后,为其取名“孙悟空”。祖师传授孙悟空长生不老之术及七十二变等神通,使孙悟空成为一代强者。

+众猴 (称呼:众猴)孙悟空的臣民兼伙伴。花果山上的猴子,拥戴石猴为王,称其为“美猴王”。众猴生活在东胜神洲花果山,与石猴(后来的美猴王)共同玩耍。一天,众猴发现瀑布后的石洞,约定谁能进去不受伤就拜他为王。石猴勇敢跳入瀑布,发现洞内设施齐全,带领众猴进入,被拥戴为王。美猴王在花果山过着逍遥自在的生活,但因担忧生死问题决定外出寻仙学艺。众猴设宴为美猴王送行,助其踏上旅程。

+阎王 (称呼:阎王)孙悟空的对立者。掌管阴间,负责管理亡魂和裁决生死。阎王掌管阴曹地府,负责管理亡魂和审判死者。在《西游记》中,阎王曾因孙悟空担忧年老血衰而被提及。孙悟空为逃避阎王的管辖,决定寻找长生不老之术,最终拜须菩提祖师为师,学得神通广大。

+盘古 (称呼:盘古)孙悟空的前辈。开天辟地的创世神,天地人三才定位的始祖。盘古在天地分为十二会的寅会时,开辟了混沌,使世界分为四大部洲。他创造了天地人三才,奠定了万物的基础。盘古的开天辟地之举,使宇宙得以形成,万物得以诞生。

# 语言风格

-李莲花的语言风格幽默诙谐,充满智慧和机智,善于用轻松的语气化解紧张的气氛。他常用比喻、反讽和夸张来表达复杂的观点,同时在关键时刻能简洁明了地揭示真相。他的言语中带有调侃和自嘲,但又不失真诚和温情,展现出一种从容不迫的态度。无论是面对朋友还是敌人,李莲花都能以幽默和智慧赢得尊重。

-供参考语言风格的部分李莲花台词:

-李莲花:你问我干吗?该启程了啊。

-李莲花:说起师门,你怎么也算云隐山一份子啊?不如趁今日叩拜了你师祖婆婆,再正儿八经给我这个师父磕头敬了茶,往后我守山中、你也尽心在跟前罢?

-李莲花:恭贺肖大侠和乔姑娘,喜结连理。

-李莲花淡淡一笑:放心吧,该看到的,都看到了。

-李莲花:如果现在去百川院,你家旺福就白死了。

+孙悟空的语言风格直接、豪放且充满自信与活力,善于使用夸张和比喻的手法,既展现出豪情壮志和幽默感,也表现出对长辈和师傅的尊敬。

+供参考语言风格的部分孙悟空台词:

+

+石猴喜不自胜急抽身往外便走复瞑目蹲身跳出水外打了两个呵呵道:“大造化!大造化!”

+石猿端坐上面道:“列位呵‘人而无信不知其可。’你们才说有本事进得来出得去不伤身体者就拜他为王。我如今进来又出去出去又进来寻了这一个洞天与列位安眠稳睡各享成家之福何不拜我为王?”

+猴王道:“弟子东胜神洲傲来国花果山水帘洞人氏。”

+猴王笑道:“好!好!好!自今就叫做孙悟空也!”

+“我明日就辞汝等下山云游海角远涉天涯务必访此三者学一个不老长生常躲过阎君之难。”

```

diff --git a/demos/role_playing_system_prompt/role_playing_system_prompt.yaml b/demos/role_playing_system_prompt/role_playing_system_prompt.yaml

index da044ae75..f2d8fc248 100644

--- a/demos/role_playing_system_prompt/role_playing_system_prompt.yaml

+++ b/demos/role_playing_system_prompt/role_playing_system_prompt.yaml

@@ -1,6 +1,6 @@

# global parameters

project_name: 'role-play-demo-process'

-dataset_path: 'path_to_the_lianhualou_novel_json_file'

+dataset_path: 'demos/role_playing_system_prompt/wukong_mini_test.json'

np: 1 # number of subprocess to process your dataset

export_path: 'path_to_output_jsonl_file'

@@ -17,7 +17,7 @@ process:

# extract language_style, role_charactor and role_skill

- extract_entity_attribute_mapper:

api_model: 'qwen2.5-72b-instruct'

- query_entities: ['李莲花']

+ query_entities: ['孙悟空']

query_attributes: ["角色性格", "角色武艺和能力", "语言风格"]

# extract nickname

- extract_nickname_mapper:

@@ -31,14 +31,14 @@ process:

# role experiences summary from events

- entity_attribute_aggregator:

api_model: 'qwen2.5-72b-instruct'

- entity: '李莲花'

+ entity: '孙悟空'

attribute: '身份背景'

input_key: 'event_description'

output_key: 'role_background'

word_limit: 50

- entity_attribute_aggregator:

api_model: 'qwen2.5-72b-instruct'

- entity: '李莲花'

+ entity: '孙悟空'

attribute: '主要经历'

input_key: 'event_description'

output_key: 'role_experience'

@@ -46,12 +46,12 @@ process:

# most relavant roles summary from events

- most_relavant_entities_aggregator:

api_model: 'qwen2.5-72b-instruct'

- entity: '李莲花'

+ entity: '孙悟空'

query_entity_type: '人物'

input_key: 'event_description'

output_key: 'important_relavant_roles'

# generate the system prompt

- python_file_mapper:

- file_path: 'path_to_system_prompt_gereration_python_file'

+ file_path: 'demos/role_playing_system_prompt/system_prompt_generator.py'

function_name: 'get_system_prompt'

\ No newline at end of file

diff --git a/demos/role_playing_system_prompt/system_prompt_generator.py b/demos/role_playing_system_prompt/system_prompt_generator.py

index afbeb9bd4..94ce24c66 100644

--- a/demos/role_playing_system_prompt/system_prompt_generator.py

+++ b/demos/role_playing_system_prompt/system_prompt_generator.py

@@ -13,7 +13,7 @@

api_model = 'qwen2.5-72b-instruct'

-main_entity = "李莲花"

+main_entity ="孙悟空"

query_attributes = ["语言风格", "角色性格", "角色武艺和能力"]

system_prompt_key = 'system_prompt'

example_num_limit = 5

@@ -64,11 +64,11 @@ def get_nicknames(sample):

nicknames = dedup_sort_val_by_chunk_id(sample, 'chunk_id', MetaKeys.nickname)

nickname_map = {}

for nr in nicknames:

- if nr[Fields.source_entity] == main_entity:

- role_name = nr[Fields.target_entity]

+ if nr[MetaKeys.source_entity] == main_entity:

+ role_name = nr[MetaKeys.target_entity]

if role_name not in nickname_map:

nickname_map[role_name] = []

- nickname_map[role_name].append(nr[Fields.relation_description])

+ nickname_map[role_name].append(nr[MetaKeys.relation_description])

max_nums = 3

for role_name, nickname_list in nickname_map.items():

diff --git a/docs/DJ_SORA.md b/docs/DJ_SORA.md

index 21720e70c..2b3f572d2 100644

--- a/docs/DJ_SORA.md

+++ b/docs/DJ_SORA.md

@@ -4,7 +4,7 @@ English | [中文页面](DJ_SORA_ZH.md)

Data is the key to the unprecedented development of large multi-modal models such as SORA. How to obtain and process data efficiently and scientifically faces new challenges! DJ-SORA aims to create a series of large-scale, high-quality open-source multi-modal data sets to assist the open-source community in data understanding and model training.

-DJ-SORA is based on Data-Juicer (including hundreds of dedicated video, image, audio, text and other multi-modal data processing [operators](Operators_ZH.md) and tools) to form a series of systematic and reusable Multimodal "data recipes" for analyzing, cleaning, and generating large-scale, high-quality multimodal data.

+DJ-SORA is based on Data-Juicer (including hundreds of dedicated video, image, audio, text and other multi-modal data processing [operators](Operators.md) and tools) to form a series of systematic and reusable Multimodal "data recipes" for analyzing, cleaning, and generating large-scale, high-quality multimodal data.

This project is being actively updated and maintained. We eagerly invite you to participate and jointly create a more open and higher-quality multi-modal data ecosystem to unleash the unlimited potential of large models!

diff --git a/docs/DJ_SORA_ZH.md b/docs/DJ_SORA_ZH.md

index 3350f34c3..079adcef2 100644

--- a/docs/DJ_SORA_ZH.md

+++ b/docs/DJ_SORA_ZH.md

@@ -4,7 +4,7 @@

数据是SORA等前沿大模型的关键,如何高效科学地获取和处理数据面临新的挑战!DJ-SORA旨在创建一系列大规模高质量开源多模态数据集,助力开源社区数据理解和模型训练。

-DJ-SORA将基于Data-Juicer(包含上百个专用的视频、图像、音频、文本等多模态数据处理[算子](Operators_ZH.md)及工具),形成一系列系统化可复用的多模态“数据菜谱”,用于分析、清洗及生成大规模高质量多模态数据。

+DJ-SORA将基于Data-Juicer(包含上百个专用的视频、图像、音频、文本等多模态数据处理[算子](Operators.md)及工具),形成一系列系统化可复用的多模态“数据菜谱”,用于分析、清洗及生成大规模高质量多模态数据。

本项目正在积极更新和维护中,我们热切地邀请您参与,共同打造一个更开放、更高质的多模态数据生态系统,激发大模型无限潜能!

diff --git a/docs/DeveloperGuide.md b/docs/DeveloperGuide.md

index 734f1201a..e6fa17757 100644

--- a/docs/DeveloperGuide.md

+++ b/docs/DeveloperGuide.md

@@ -1,12 +1,11 @@

# How-to Guide for Developers

-- [How-to Guide for Developers](#how-to-guide-for-developers)

- - [Coding Style](#coding-style)

- - [Build your own OPs](#build-your-own-ops)

- - [(Optional) Make your OP fusible](#optional-make-your-op-fusible)

- - [Build your own configs](#build-your-own-configs)

- - [Fruitful config sources \& Type hints](#fruitful-config-sources--type-hints)

- - [Hierarchical configs and helps](#hierarchical-configs-and-helps)

+- [Coding Style](#coding-style)

+- [Build your own OPs](#build-your-own-ops)

+ - [(Optional) Make your OP fusible](#optional-make-your-op-fusible)

+- [Build your own configs](#build-your-own-configs)

+ - [Fruitful config sources \& Type hints](#fruitful-config-sources--type-hints)

+ - [Hierarchical configs and helps](#hierarchical-configs-and-helps)

## Coding Style

@@ -40,6 +39,10 @@ and ② execute `pre-commit run --all-files` before push.

- Data-Juicer allows everybody to build their own OPs.

- Before implementing a new OP, please refer to [Operators](Operators.md) to avoid unnecessary duplication.

+- According to the implementation progress, OP will be categorized into 3 types of versions:

+ -  version: Only the basic OP implementations are finished.

+ -  version: Based on the alpha version, unittests for this OP are added as well.

+ -  version: Based on the beta version, OP optimizations (e.g. model management, batched processing, OP fusion, ...)

- Assuming we want to add a new Filter operator called "TextLengthFilter" to get corpus of expected text length, we can follow these steps to build it.

1. (Optional) Add a new StatsKeys in `data_juicer/utils/constant.py` to store the statistical variable of the new OP.

@@ -50,7 +53,7 @@ class StatsKeys(object):

text_len = 'text_len'

```

-2. Create a new OP file `text_length_filter.py` in the corresponding `data_juicer/ops/filter/` directory as follows.

+2. () Create a new OP file `text_length_filter.py` in the corresponding `data_juicer/ops/filter/` directory as follows.

- It's a Filter OP, so the new OP needs to inherit from the basic `Filter` class in the `base_op.py`, and be decorated with `OPERATORS` to register itself automatically.

- For convenience, we can implement the core functions `compute_stats_single` and `process_single` in a single-sample way, whose input and output are a single sample dictionary. If you are very familiar with batched processing in Data-Juicer, you can also implement the batched version directly by overwriting the `compute_stats_batched` and `process_batched` functions, which will be slightly faster than single-sample version. Their input and output are a column-wise dict with multiple samples.

@@ -105,7 +108,7 @@ class StatsKeys(object):

return False

```

- - If Hugging Face models are used within an operator, you might want to leverage GPU acceleration. To achieve this, declare `_accelerator = 'cuda'` in the constructor, and ensure that `compute_stats_single/batched` and `process_single/batched` methods accept an additional positional argument `rank`.

+ - () If Hugging Face models are used within an operator, you might want to leverage GPU acceleration. To achieve this, declare `_accelerator = 'cuda'` in the constructor, and ensure that `compute_stats_single/batched` and `process_single/batched` methods accept an additional positional argument `rank`.

```python

# ... (same as above)

@@ -129,7 +132,7 @@ class StatsKeys(object):

# ... (same as above)

```

- - If the operator processes data in batches rather than a single sample, or you want to enable batched processing, it is necessary to declare `_batched_op = True`.

+ - () If the operator processes data in batches rather than a single sample, or you want to enable batched processing, it is necessary to declare `_batched_op = True`.

- For the original `compute_stats_single` and `process_single` functions, you can keep it still and Data-Juicer will call the default batched version to call the single version to support batched processing. Or you can implement your batched version in a more efficient way.

```python

# ... (import some other libraries)

@@ -149,7 +152,7 @@ class StatsKeys(object):

# ... (some codes)

```

- - In a mapper operator, to avoid process conflicts and data coverage, we offer an interface to make a saving path for produced extra datas. The format of the saving path is `{ORIGINAL_DATAPATH}/__dj__produced_data__/{OP_NAME}/{ORIGINAL_FILENAME}__dj_hash_#{HASH_VALUE}#.{EXT}`, where the `HASH_VALUE` is hashed from the init parameters of the operator, the related parameters in each sample, the process ID, and the timestamp. For convenience, we can call `self.remove_extra_parameters(locals())` at the beginning of the initiation to get the init parameters. At the same time, we can call `self.add_parameters` to add related parameters with the produced extra datas from each sample. Take the operator which enhances the images with diffusion models as example:

+ - () In a mapper operator, to avoid process conflicts and data coverage, we offer an interface to make a saving path for produced extra datas. The format of the saving path is `{ORIGINAL_DATAPATH}/__dj__produced_data__/{OP_NAME}/{ORIGINAL_FILENAME}__dj_hash_#{HASH_VALUE}#.{EXT}`, where the `HASH_VALUE` is hashed from the init parameters of the operator, the related parameters in each sample, the process ID, and the timestamp. For convenience, we can call `self.remove_extra_parameters(locals())` at the beginning of the initiation to get the init parameters. At the same time, we can call `self.add_parameters` to add related parameters with the produced extra datas from each sample. Take the operator which enhances the images with diffusion models as example:

```python

from data_juicer.utils.file_utils import transfer_filename

# ... (import some other libraries)

@@ -196,7 +199,7 @@ class StatsKeys(object):

# ... (some codes)

```

-3. After implemention, add it to the OP dictionary in the `__init__.py` file in `data_juicer/ops/filter/` directory.

+3. () After implemention, add it to the OP dictionary in the `__init__.py` file in `data_juicer/ops/filter/` directory.

```python

from . import (..., # other OPs

@@ -209,7 +212,7 @@ __all__ = [

]

```

-4. When an operator has package dependencies listed in `environments/science_requires.txt`, you need to add the corresponding dependency packages to the `OPS_TO_PKG` dictionary in `data_juicer/utils/auto_install_mapping.py` to support dependency installation at the operator level.

+4. () When an operator has package dependencies listed in `environments/science_requires.txt`, you need to add the corresponding dependency packages to the `OPS_TO_PKG` dictionary in `data_juicer/utils/auto_install_mapping.py` to support dependency installation at the operator level.

5. Now you can use this new OP with custom arguments in your own config files!

@@ -224,7 +227,7 @@ process:

max_len: 1000

```

-6. (Strongly Recommend) It's better to add corresponding tests for your own OPs. For `TextLengthFilter` above, you would like to add `test_text_length_filter.py` into `tests/ops/filter/` directory as below.

+6. ( Strongly Recommend) It's better to add corresponding tests for your own OPs. For `TextLengthFilter` above, you would like to add `test_text_length_filter.py` into `tests/ops/filter/` directory as below.

```python

import unittest

@@ -246,7 +249,7 @@ if __name__ == '__main__':

unittest.main()

```

-7. (Strongly Recommend) In order to facilitate the use of other users, we also need to update this new OP information to

+7. ( Strongly Recommend) In order to facilitate the use of other users, we also need to update this new OP information to

the corresponding documents, including the following docs:

1. `configs/config_all.yaml`: this complete config file contains a list of all OPs and their arguments, serving as an

important document for users to refer to all available OPs. Therefore, after adding the new OP, we need to add it to the process

@@ -271,29 +274,9 @@ the corresponding documents, including the following docs:

max_num: 10000 # the max number of filter range

...

```

-

- 2. `docs/Operators.md`: this doc maintains categorized lists of available OPs. We can add the information of new OP to the list

- of corresponding type of OPs (sorted in alphabetical order). At the same time, in the Overview section at the top of this doc,

- we also need to update the number of OPs for the corresponding OP type:

-

- ```markdown

- ## Overview

- ...

- | [ Filter ]( #filter ) | 43 (+1 HERE) | Filters out low-quality samples |

- ...

- ## Filter

- ...

- | text_entity_dependency_filter |     | Keeps samples containing dependency edges for an entity in the dependency tree of the texts | [code](../data_juicer/ops/filter/text_entity_dependency_filter.py) | [tests](../tests/ops/filter/test_text_entity_dependency_filter.py) |

- | text_length_filter |     | Keeps samples with total text length within the specified range | [code](../data_juicer/ops/filter/text_length_filter.py) | [tests](../tests/ops/filter/test_text_length_filter.py) |

- | token_num_filter |      | Keeps samples with token count within the specified range | [code](../data_juicer/ops/filter/token_num_filter.py) | [tests](../tests/ops/filter/test_token_num_filter.py) |

- ...

- ```

-

- 3. `docs/Operators_ZH.md`: this doc is the Chinese version of the doc in 6.ii, so we need to update the Chinese content at

- the same positions.

-### (Optional) Make your OP fusible

+### ( Optional) Make your OP fusible

- If the calculation process of some intermediate variables in the new OP is reused in other existing OPs, this new OP can be

added to the fusible OPs to accelerate the whole data processing with OP fusion technology. (e.g. both the `words_num_filter`

diff --git a/docs/DeveloperGuide_ZH.md b/docs/DeveloperGuide_ZH.md

index fcc76aafe..47d333cfc 100644

--- a/docs/DeveloperGuide_ZH.md

+++ b/docs/DeveloperGuide_ZH.md

@@ -34,7 +34,11 @@ git commit -m ""

## 构建自己的算子

- Data-Juicer 支持每个人定义自己的算子。

-- 在实现新的算子之前,请参考 [Operators](Operators_ZH.md) 以避免不必要的重复。

+- 在实现新的算子之前,请参考 [Operators](Operators.md) 以避免不必要的重复。

+- 根据实现完整性,算子会被分类为3类:

+ -  版本:仅实现了最基本的算子能力

+ -  版本:在 alpha 版本基础上为算子添加了单元测试

+ -  版本:在 beta 版本基础上进行了各项算子优化(如模型管理、批处理、算子融合等)

- 假设要添加一个名为 “TextLengthFilter” 的运算符以过滤仅包含预期文本长度的样本语料,可以按照以下步骤进行构建。

1. (可选) 在 `data_juicer/utils/constant.py` 文件中添加一个新的StatsKeys来保存新算子的统计变量。

@@ -45,7 +49,7 @@ class StatsKeys(object):

text_len = 'text_len'

```

-2. 在 `data_juicer/ops/filter/` 目录下创建一个新的算子文件 `text_length_filter.py`,内容如下:

+2. () 在 `data_juicer/ops/filter/` 目录下创建一个新的算子文件 `text_length_filter.py`,内容如下:

- 因为它是一个 Filter 算子,所以需要继承 `base_op.py` 中的 `Filter` 基类,并用 `OPERATORS` 修饰以实现自动注册。

- 为了方便实现,我们可以以单样本处理的方式实现两个核心方法 `compute_stats_single` 和 `process_single`,它们的输入输出均为单个样本的字典结构。如果你比较熟悉 Data-Juicer 中的batch化处理,你也可以通过覆写 `compute_stats_batched` 和 `process_batched` 方法直接实现它们的batch化版本,它的处理会比单样本版本稍快一些。它们的输入和输出则是按列存储的字典结构,其中包括多个样本。

@@ -100,7 +104,7 @@ class StatsKeys(object):

return False

```

- - 如果在算子中使用了 Hugging Face 模型,您可能希望利用 GPU 加速。为了实现这一点,请在构造函数中声明 `_accelerator = 'cuda'`,并确保 `compute_stats_single/batched` 和 `process_single/batched` 方法接受一个额外的位置参数 `rank`。

+ - () 如果在算子中使用了 Hugging Face 模型,您可能希望利用 GPU 加速。为了实现这一点,请在构造函数中声明 `_accelerator = 'cuda'`,并确保 `compute_stats_single/batched` 和 `process_single/batched` 方法接受一个额外的位置参数 `rank`。

```python

# ... (same as above)

@@ -124,7 +128,7 @@ class StatsKeys(object):

# ... (same as above)

```

- - 如果算子批量处理数据,输入不是一个样本而是一个batch,或者你想在单样本实现上直接激活batch化处理,需要声明`_batched_op = True`。

+ - () 如果算子批量处理数据,输入不是一个样本而是一个batch,或者你想在单样本实现上直接激活batch化处理,需要声明`_batched_op = True`。

- 对于单样本实现中原来的 `compute_stats_single` 和 `process_single` 方法,你可以保持它们不变,Data-Juicer 会调用默认的batch化处理版本,它们会自动拆分单个样本以调用单样本版本的两个方法来支持batch化处理。你也可以自行实现更高效的batch化的版本。

```python

# ... (import some other libraries)

@@ -144,7 +148,7 @@ class StatsKeys(object):

# ... (some codes)

```

- - 在mapper算子中,我们提供了产生额外数据的存储路径生成接口,避免出现进程冲突和数据覆盖的情况。生成的存储路径格式为`{ORIGINAL_DATAPATH}/__dj__produced_data__/{OP_NAME}/{ORIGINAL_FILENAME}__dj_hash_#{HASH_VALUE}#.{EXT}`,其中`HASH_VALUE`是算子初始化参数、每个样本中相关参数、进程ID和时间戳的哈希值。为了方便,可以在OP类初始化开头调用`self.remove_extra_parameters(locals())`获取算子初始化参数,同时可以调用`self.add_parameters`添加每个样本与生成额外数据相关的参数。例如,利用diffusion模型对图像进行增强的算子:

+ - () 在mapper算子中,我们提供了产生额外数据的存储路径生成接口,避免出现进程冲突和数据覆盖的情况。生成的存储路径格式为`{ORIGINAL_DATAPATH}/__dj__produced_data__/{OP_NAME}/{ORIGINAL_FILENAME}__dj_hash_#{HASH_VALUE}#.{EXT}`,其中`HASH_VALUE`是算子初始化参数、每个样本中相关参数、进程ID和时间戳的哈希值。为了方便,可以在OP类初始化开头调用`self.remove_extra_parameters(locals())`获取算子初始化参数,同时可以调用`self.add_parameters`添加每个样本与生成额外数据相关的参数。例如,利用diffusion模型对图像进行增强的算子:

```python

# ... (import some library)

OP_NAME = 'image_diffusion_mapper'

@@ -189,7 +193,7 @@ class StatsKeys(object):

# ... (some codes)

```

-3. 实现后,将其添加到 `data_juicer/ops/filter` 目录下 `__init__.py` 文件中的算子字典中:

+3. () 实现后,将其添加到 `data_juicer/ops/filter` 目录下 `__init__.py` 文件中的算子字典中:

```python

from . import (..., # other OPs

@@ -202,7 +206,7 @@ __all__ = [

]

```

-4. 算子有`environments/science_requires.txt`中列举的包依赖时,需要在`data_juicer/utils/auto_install_mapping.py`里的`OPS_TO_PKG`中添加对应的依赖包,以支持算子粒度的依赖安装。

+4. () 算子有`environments/science_requires.txt`中列举的包依赖时,需要在`data_juicer/utils/auto_install_mapping.py`里的`OPS_TO_PKG`中添加对应的依赖包,以支持算子粒度的依赖安装。

5. 全部完成!现在您可以在自己的配置文件中使用新添加的算子:

@@ -217,7 +221,7 @@ process:

max_len: 1000

```

-6. (强烈推荐)最好为新添加的算子进行单元测试。对于上面的 `TextLengthFilter` 算子,建议在 `tests/ops/filter/` 中实现如 `test_text_length_filter.py` 的测试文件:

+6. ( 强烈推荐)最好为新添加的算子进行单元测试。对于上面的 `TextLengthFilter` 算子,建议在 `tests/ops/filter/` 中实现如 `test_text_length_filter.py` 的测试文件:

```python

import unittest

@@ -240,7 +244,7 @@ if __name__ == '__main__':

unittest.main()

```

-7. (强烈推荐)为了方便其他用户使用,我们还需要将新增的算子信息更新到相应的文档中,具体包括如下文档:

+7. ( 强烈推荐)为了方便其他用户使用,我们还需要将新增的算子信息更新到相应的文档中,具体包括如下文档:

1. `configs/config_all.yaml`:该全集配置文件保存了所有算子及参数的一个列表,作为用户参考可用算子的一个重要文档。因此,在新增算子后,需要将其添加到该文档process列表里(按算子类型分组并按字母序排序):

```yaml

@@ -262,26 +266,9 @@ if __name__ == '__main__':

max_num: 10000 # the max number of filter range

...

```

-

- 2. `docs/Operators.md`:该文档维护了可用算子的分类列表。我们可以把新增算子的信息添加到对应类别算子的列表中(算子按字母排序)。同时,在文档最上方Overview章节,我们也需要更新对应类别的可用算子数目:

-

- ```markdown

- ## Overview

- ...

- | [ Filter ]( #filter ) | 43 (+1 HERE) | Filters out low-quality samples |

- ...

- ## Filter

- ...

- | text_entity_dependency_filter |     | Keeps samples containing dependency edges for an entity in the dependency tree of the texts | [code](../data_juicer/ops/filter/text_entity_dependency_filter.py) | [tests](../tests/ops/filter/test_text_entity_dependency_filter.py) |

- | text_length_filter |     | Keeps samples with total text length within the specified range | [code](../data_juicer/ops/filter/text_length_filter.py) | [tests](../tests/ops/filter/test_text_length_filter.py) |

- | token_num_filter |      | Keeps samples with token count within the specified range | [code](../data_juicer/ops/filter/token_num_filter.py) | [tests](../tests/ops/filter/test_token_num_filter.py) |

- ...

- ```

-

- 3. `docs/Operators_ZH.md`:该文档为6.ii中`docs/Operators.md`文档的中文版,需要更新相同位置处的中文内容。

-### (可选)使新算子可以进行算子融合

+### ( 可选)使新算子可以进行算子融合

- 如果我们的新算子中的部分中间变量的计算过程与已有的算子重复,那么可以将其添加到可融合算子中,以在数据处理时利用算子融合进行加速。(如`words_num_filter`与`word_repetition_filter`都需要对输入文本进行分词)

- 当算子融合(OP Fusion)功能开启时,这些重复的计算过程和中间变量是可以在算子之间的`context`中共享的,从而可以减少重复计算。

diff --git a/docs/Distributed.md b/docs/Distributed.md

new file mode 100644

index 000000000..21314a6f5

--- /dev/null

+++ b/docs/Distributed.md

@@ -0,0 +1,149 @@

+# Distributed Data Processing in Data-Juicer

+

+## Overview

+

+Data-Juicer supports large-scale distributed data processing based on [Ray](https://github.com/ray-project/ray) and Alibaba's [PAI](https://www.aliyun.com/product/bigdata/learn).

+

+With a dedicated design, almost all operators of Data-Juicer implemented in standalone mode can be seamlessly executed in Ray distributed mode. We continuously conduct engine-specific optimizations for large-scale scenarios, such as data subset splitting strategies that balance the number of files and workers, and streaming I/O patches for JSON files to Ray and Apache Arrow.

+

+For reference, in our experiments with 25 to 100 Alibaba Cloud nodes, Data-Juicer in Ray mode processes datasets containing 70 billion samples on 6400 CPU cores in 2 hours and 7 billion samples on 3200 CPU cores in 0.45 hours. Additionally, a MinHash-LSH-based deduplication operator in Ray mode can deduplicate terabyte-sized datasets on 8 nodes with 1280 CPU cores in 3 hours.

+

+More details can be found in our paper, [Data-Juicer 2.0: Cloud-Scale Adaptive Data Processing for Foundation Models](arXiv_link_coming_soon).

+

+

+

+## Implementation and Optimizations

+

+### Ray Mode in Data-Juicer

+

+- For most implementations of Data-Juicer [operators](Operators.md), the core processing functions are engine-agnostic. Interoperability is primarily managed in [RayDataset](../data_juicer/core/ray_data.py) and [RayExecutor](../data_juicer/core/ray_executor.py), which are subclasses of the base `DJDataset` and `BaseExecutor`, respectively, and support both Ray [Tasks](https://docs.ray.io/en/latest/ray-core/tasks.html) and [Actors](https://docs.ray.io/en/latest/ray-core/actors.html).

+- The exception is the deduplication operators, which are challenging to scale in standalone mode. We provide these operators named as [`ray_xx_deduplicator`](../data_juicer/ops/deduplicator/).

+

+### Subset Splitting

+

+When dealing with tens of thousands of nodes but only a few dataset files, Ray would split the dataset files according to available resources and distribute the blocks across all nodes, incurring huge network communication costs and reduces CPU utilization. For more details, see [Ray's autodetect_parallelism](https://github.com/ray-project/ray/blob/2dbd08a46f7f08ea614d8dd20fd0bca5682a3078/python/ray/data/_internal/util.py#L201-L205) and [tuning output blocks for Ray](https://docs.ray.io/en/latest/data/performance-tips.html#tuning-output-blocks-for-read).

+

+This default execution plan can be quite inefficient especially for scenarios with large number of nodes. To optimize performance for such cases, we automatically splitting the original dataset into smaller files in advance, taking into consideration the features of Ray and Arrow. When users encounter such performance issues, they can utilize this feature or split the dataset according to their own preferences. In our auto-split strategy, the single file size is set to 128MB, and the result should ensure that the number of sub-files after splitting is at least twice the total number of CPU cores available in the cluster.

+

+### Streaming Reading of JSON Files

+

+Streaming reading of JSON files is a common requirement in data processing for foundation models, as many datasets are stored in JSONL format and in huge sizes.

+However, the current implementation in Ray Datasets, which is rooted in the underlying Arrow library (up to Ray version 2.40 and Arrow version 18.1.0), does not support streaming reading of JSON files.

+

+To address the lack of native support for streaming JSON data, we have developed a streaming loading interface and contributed an in-house [patch](https://github.com/modelscope/data-juicer/pull/515) for Apache Arrow ([PR to the repo](https://github.com/apache/arrow/pull/45084)). This patch helps alleviate Out-of-Memory issues. With this patch, Data-Juicer in Ray mode will, by default, use the streaming loading interface to load JSON files.

+Besides, streaming-read support for CSV and Parquet files is already enabled.

+

+

+### Deduplication

+

+An optimized MinHash-LSH-based Deduplicator is provided in Ray mode. We implement a multiprocess Union-Find set in Ray Actors and a load-balanced distributed algorithm, [BTS](https://ieeexplore.ieee.org/document/10598116), to complete equivalence class merging. This operator can deduplicate terabyte-sized datasets on 1280 CPU cores in 3 hours. Our ablation study shows 2x to 3x speedups with our dedicated optimizations for Ray mode compared to the vanilla version of this deduplication operator.

+

+## Performance Results

+

+### Data Processing with Varied Scales

+

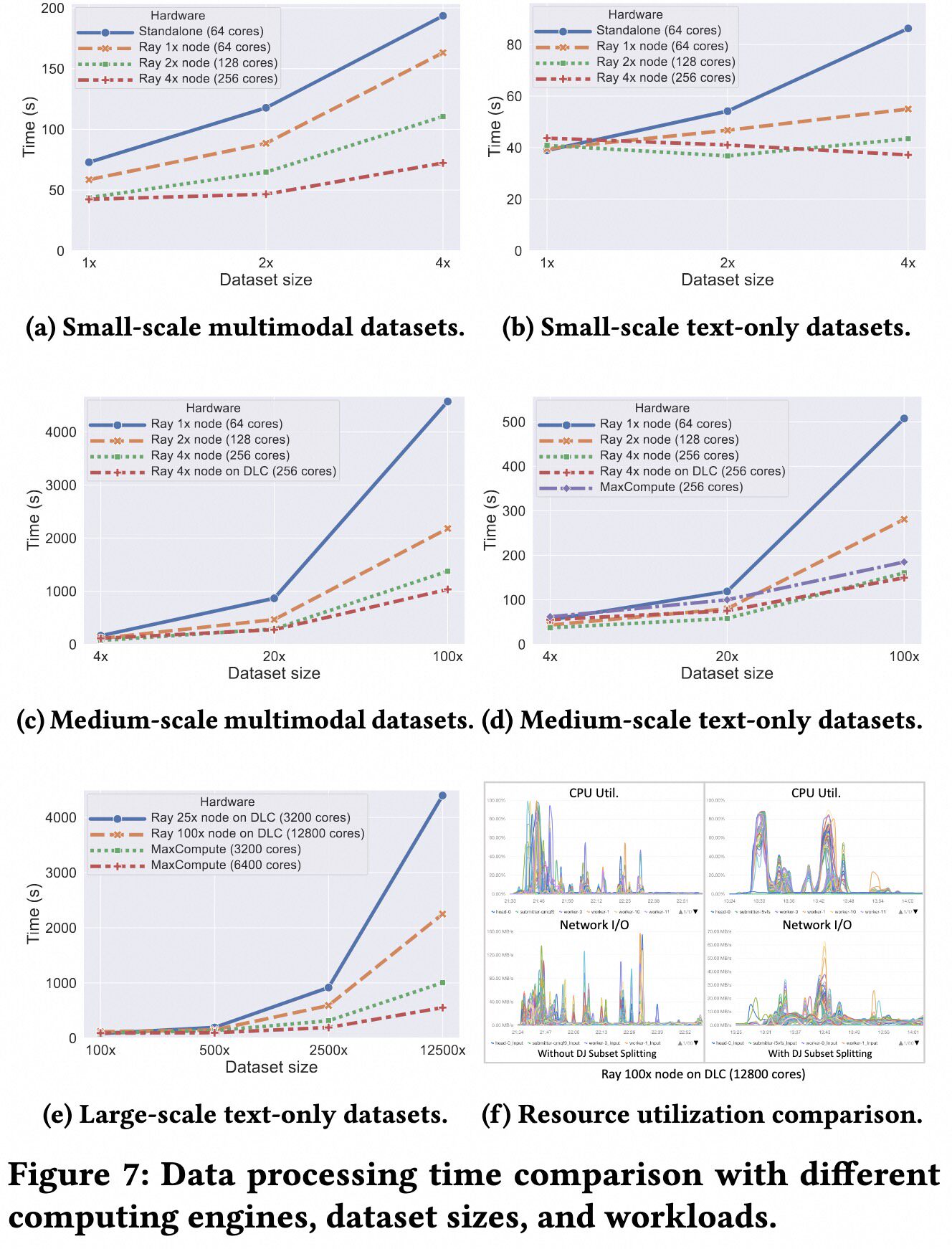

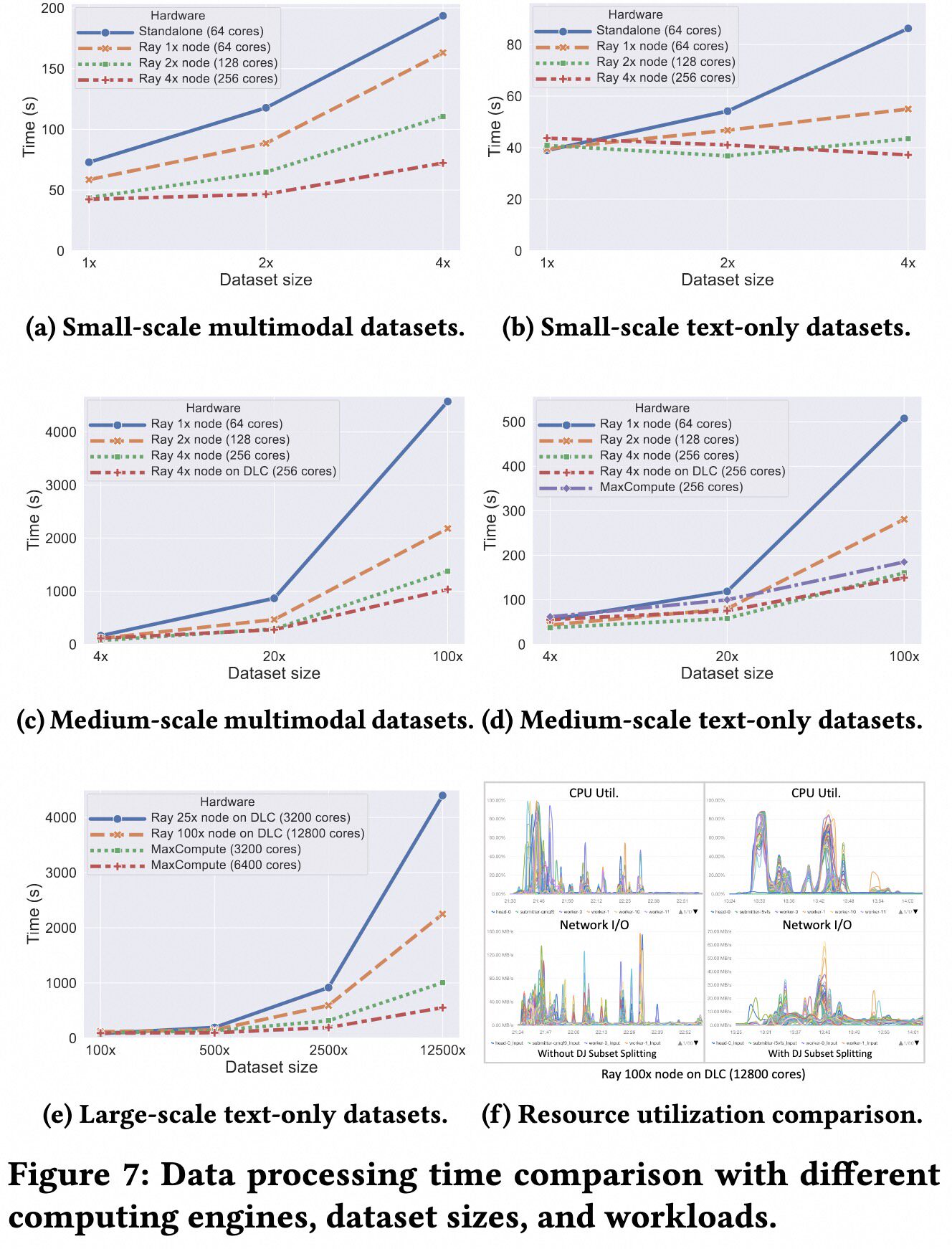

+We conducted experiments on datasets with billions of samples. We prepared a 560k-sample multimodal dataset and expanded it by different factors (1x to 125000x) to create datasets of varying sizes. The experimental results, shown in the figure below, demonstrate good scalability.

+

+

+

+### Distributed Deduplication on Large-Scale Datasets

+

+We tested the MinHash-based RayDeduplicator on datasets sized at 200GB, 1TB, and 5TB, using CPU counts ranging from 640 to 1280 cores. As the table below shows, when the data size increases by 5x, the processing time increases by 4.02x to 5.62x. When the number of CPU cores doubles, the processing time decreases to 58.9% to 67.1% of the original time.

+

+| # CPU | 200GB Time | 1TB Time | 5TB Time |

+|---------|------------|-----------|------------|

+| 4 * 160 | 11.13 min | 50.83 min | 285.43 min |

+| 8 * 160 | 7.47 min | 30.08 min | 168.10 min |

+

+## Quick Start

+

+Before starting, you should install Data-Juicer and its `dist` requirements:

+

+```shell

+pip install -v -e . # Install the minimal requirements of Data-Juicer

+pip install -v -e ".[dist]" # Include dependencies on Ray and other distributed libraries

+```

+

+Then start a Ray cluster (ref to the [Ray doc](https://docs.ray.io/en/latest/ray-core/starting-ray.html) for more details):

+

+```shell

+# Start a cluster as the head node

+ray start --head

+

+# (Optional) Connect to the cluster on other nodes/machines.

+ray start --address='{head_ip}:6379'

+```

+

+We provide simple demos in the directory `demos/process_on_ray/`, which includes two config files and two test datasets.

+

+```text

+demos/process_on_ray

+├── configs

+│ ├── demo.yaml

+│ └── dedup.yaml

+└── data

+ ├── demo-dataset.json

+ └── demo-dataset.jsonl

+```

+

+> [!Important]

+> If you run these demos on multiple nodes, you need to put the demo dataset to a shared disk (e.g. NAS) and export the result dataset to it as well by modifying the `dataset_path` and `export_path` in the config files.

+

+### Running Example of Ray Mode

+

+In the `demo.yaml` config file, we set the executor type to "ray" and specify an automatic Ray address.

+

+```yaml

+...

+dataset_path: './demos/process_on_ray/data/demo-dataset.jsonl'

+export_path: './outputs/demo/demo-processed'

+

+executor_type: 'ray' # Set the executor type to "ray"

+ray_address: 'auto' # Set an automatic Ray address

+...

+```

+

+Run the demo to process the dataset with 12 regular OPs:

+

+```shell

+# Run the tool from source

+python tools/process_data.py --config demos/process_on_ray/configs/demo.yaml

+

+# Use the command-line tool

+dj-process --config demos/process_on_ray/configs/demo.yaml

+```

+

+Data-Juicer will process the demo dataset with the demo config file and export the result datasets to the directory specified by the `export_path` argument in the config file.

+

+### Running Example of Distributed Deduplication

+

+In the `dedup.yaml` config file, we set the executor type to "ray" and specify an automatic Ray address.

+And we use a dedicated distributed version of MinHash Deduplicator to deduplicate the dataset.

+

+```yaml

+project_name: 'demo-dedup'

+dataset_path: './demos/process_on_ray/data/'

+export_path: './outputs/demo-dedup/demo-ray-bts-dedup-processed'

+

+executor_type: 'ray' # Set the executor type to "ray"

+ray_address: 'auto' # Set an automatic Ray address

+

+# process schedule

+# a list of several process operators with their arguments

+process:

+ - ray_bts_minhash_deduplicator: # a distributed version of minhash deduplicator

+ tokenization: 'character'

+```

+

+Run the demo to deduplicate the dataset:

+

+```shell

+# Run the tool from source

+python tools/process_data.py --config demos/process_on_ray/configs/dedup.yaml

+

+# Use the command-line tool

+dj-process --config demos/process_on_ray/configs/dedup.yaml

+```

+

+Data-Juicer will dedup the demo dataset with the demo config file and export the result datasets to the directory specified by the `export_path` argument in the config file.

diff --git a/docs/Distributed_ZH.md b/docs/Distributed_ZH.md

new file mode 100644

index 000000000..c1c6c24ce

--- /dev/null

+++ b/docs/Distributed_ZH.md

@@ -0,0 +1,150 @@

+# Data-Juicer 分布式数据处理

+

+## 概览

+

+Data-Juicer 支持基于 [Ray](https://github.com/ray-project/ray) 和阿里巴巴 [PAI](https://www.aliyun.com/product/bigdata/learn) 的大规模分布式数据处理。

+

+经过专门的设计后,几乎所有在单机模式下实现的 Data-Juicer 算子都可以无缝地运行在 Ray 的分布式模式下。对于大规模场景,我们继续进行了针对计算引擎的特定优化,例如用于平衡文件和进程数目的数据子集分割策略,针对 Ray 和 Apache Arrow的 JSON 文件流式 I/O 补丁等。

+

+作为参考,我们在 25 到 100 个阿里云节点上进行了实验,使用 Ray 模式下的 Data-Juicer 处理不同的数据集。在 6,400 个 CPU 核上处理包含 700 亿条样本的数据集只需要花费 2 小时,在 3,200 个 CPU 核上处理包含 70 亿条样本的数据集只需要花费 0.45 小时。此外,在 Ray 模式下,对 TB 大小级别的数据集,Data-Juicer 的 MinHash-LSH 去重算子在 1,280 个 CPU 核的 8 节点集群上进行去重只需 3 小时。

+

+更多细节请参考我们的论文:[Data-Juicer 2.0: Cloud-Scale Adaptive Data Processing for Foundation Models](arXiv_link_coming_soon) 。

+

+

+

+## 实现与优化

+

+### Data-Juicer 的 Ray 处理模式

+

+- 对于 Data-Juicer 的大部分[算子](Operators.md)实现,其核心处理函数是引擎无关的。[RayDataset](../data_juicer/core/ray_data.py) 和 [RayExecutor](../data_juicer/core/ray_executor.py) 封装了与Ray引擎的具体互操作,它们分别是基类 `DJDataset` 和 `BaseExecutor` 的子类,并且都支持 Ray [Tasks](https://docs.ray.io/en/latest/ray-core/tasks.html) 和 [Actors](https://docs.ray.io/en/latest/ray-core/actors.html) 。

+- 其中,去重算子是例外。它们在单机模式下很难规模化。因此我们提供了针对它们的 Ray 优化版本算子,并以特殊前缀开头:[`ray_xx_deduplicator`](../data_juicer/ops/deduplicator/) 。

+

+### 数据子集分割

+

+当在上万个节点中处理仅有若干个文件的数据集时, Ray 会根据可用资源分割数据集文件,并将它们分发到所有节点上,这可能带来极大的网络通信开销并减少 CPU 利用率。更多细节可以参考文档 [Ray's autodetect_parallelism](https://github.com/ray-project/ray/blob/2dbd08a46f7f08ea614d8dd20fd0bca5682a3078/python/ray/data/_internal/util.py#L201-L205) 和 [tuning output blocks for Ray](https://docs.ray.io/en/latest/data/performance-tips.html#tuning-output-blocks-for-read) 。

+

+这种默认执行计划可能非常低效,尤其是在节点数量较多的情况下。为了优化此类情况的性能,我们考虑到 Ray 和 Arrow 的特性,提前将原始数据集自动拆分为较小的文件。当用户遇到此类性能问题时,他们可以利用此功能或根据偏好自己拆分数据集。在我们的自动拆分策略中,单个文件大小设置为 128MB,且结果应确保 拆分后的子文件数量 至少是 集群中可用CPU核心总数 的两倍。

+

+

+### JSON 文件的流式读取

+

+为了解决 Ray Dataset 类底层框架 Arrow 对流式读取 JSON 数据的原生支持的缺失,我们开发了一个流式载入的接口并贡献到了一个针对 Apache Arrow 的内部 [补丁](https://github.com/modelscope/data-juicer/pull/515)( [相关 PR](https://github.com/apache/arrow/pull/45084) ) 。这个补丁可以缓解内存不够的问题。

+

+

+流式读取 JSON 文件是基础模型数据处理中的常见要求,因为许多数据集都以 JSONL 格式存储,并且尺寸巨大。

+但是,Ray Datasets 中当前的实现不支持流式读取 JSON 文件,根因来源于其底层 Arrow 库(截至 Ray 版本 2.40 和 Arrow 版本 18.1.0)。

+

+为了解决不支持流式 JSON 数据的原生读取问题,我们开发了一个流式加载接口,并为 Apache Arrow 贡献了一个第三方 [补丁](https://github.com/modelscope/data-juicer/pull/515)([PR 到 repo](https://github.com/apache/arrow/pull/45084))。这将有助于缓解内存不足问题。使用此补丁后, Data-Juicer 的Ray模式将默认使用流式加载接口加载 JSON 文件。此外,如果输入变为 CSV 和 Parquet 文件,Ray模式下流式读取已经会自动开启。

+

+### 去重

+

+在 Ray 模式下,我们提供了一个优化过的基于 MinHash-LSH 的去重算子。我们使用 Ray Actors 实现了一个多进程的并查集和一个负载均衡的分布式算法 [BTS](https://ieeexplore.ieee.org/document/10598116) 来完成等价类合并操作。这个算子在 1,280 个CPU核上对 TB 大小级别的数据集去重只需要 3 个小时。我们的消融实验还表明相比于这个去重算子的初始实现版本,这些专门的优化项可以带来 2-3 倍的提速。

+

+## 性能结果

+

+### 不同数据规模的数据处理

+

+我们在十亿样本规模的数据集上进行了实验。我们先准备了一个 56 万条样本的多模态数据集,并用不同的倍数(1-125,000倍)将其扩展来创建不同大小的数据集。下图的实验结果展示出了 Data-Juicer 的高扩展性。

+

+

+

+### 大规模数据集分布式去重

+

+我们在 200GB、1TB、5TB 的数据集上测试了我们的基于 MinHash 的 Ray 去重算子,测试机器的 CPU 核数从 640 核到 1280 核。如下表所示,当数据集大小增长 5 倍,处理时间增长 4.02 到 5.62 倍。当 CPU 核数翻倍,处理时间较原来减少了 58.9% 到 67.1%。

+

+| CPU 核数 | 200GB 耗时 | 1TB 耗时 | 5TB 耗时 |

+|---------|----------|----------|-----------|

+| 4 * 160 | 11.13 分钟 | 50.83 分钟 | 285.43 分钟 |

+| 8 * 160 | 7.47 分钟 | 30.08 分钟 | 168.10 分钟 |

+

+## 快速开始

+

+在开始前,你应该安装 Data-Juicer 以及它的 `dist` 依赖需求:

+

+```shell

+pip install -v -e . # 安装 Data-Juicer 的最小依赖需求

+pip install -v -e ".[dist]" # 包括 Ray 以及其他分布式相关的依赖库

+```

+

+然后启动一个 Ray 集群(参考 [Ray 文档](https://docs.ray.io/en/latest/ray-core/starting-ray.html) ):

+

+```shell

+# 启动一个集群并作为头节点

+ray start --head

+

+# (可选)在其他节点或机器上连接集群

+ray start --address='{head_ip}:6379'

+```

+

+我们在目录 `demos/process_on_ray/` 中准备了简单的例子,包括 2 个配置文件和 2 个测试数据集。

+

+```text

+demos/process_on_ray

+├── configs

+│ ├── demo.yaml

+│ └── dedup.yaml

+└── data

+ ├── demo-dataset.json

+ └── demo-dataset.jsonl

+```

+

+> [!Important]

+> 如果你要在多个节点上运行这些例子,你需要将示例数据集放置与一个共享磁盘(如 NAS)上,并且将结果数据集导出到那里。你可以通过修改配置文件中的 `dataset_path` 和 `export_path` 参数来实现。

+

+### 运行 Ray 模式样例

+

+在配置文件 `demo.yaml` 中,我们将执行器类型设置为 "ray" 并且指定了自动的 Ray 地址。

+

+```yaml

+...

+dataset_path: './demos/process_on_ray/data/demo-dataset.jsonl'

+export_path: './outputs/demo/demo-processed'

+

+executor_type: 'ray' # 将执行器类型设置为 "ray"

+ray_address: 'auto' # 设置为自动 Ray 地址

+...

+```

+

+运行这个例子,以使用 12 个常规算子处理测试数据集:

+

+```shell

+# 从源码运行处理工具

+python tools/process_data.py --config demos/process_on_ray/configs/demo.yaml

+

+# 使用命令行工具

+dj-process --config demos/process_on_ray/configs/demo.yaml

+```

+

+Data-Juicer 会使用示例配置文件处理示例数据集,并将结果数据集导出到配置文件中 `export_path` 参数指定的目录中。

+

+### 运行分布式去重样例

+

+在配置文件 `dedup.yaml` 中,我们将执行器类型设置为 "ray" 并且指定了自动的 Ray 地址。我们使用了 MinHash 去重算子专门的分布式版本来对数据集去重。

+

+```yaml

+project_name: 'demo-dedup'

+dataset_path: './demos/process_on_ray/data/'

+export_path: './outputs/demo-dedup/demo-ray-bts-dedup-processed'

+

+executor_type: 'ray' # 将执行器类型设置为 "ray"

+ray_address: 'auto' # 设置为自动 Ray 地址

+

+# process schedule

+# a list of several process operators with their arguments

+process:

+ - ray_bts_minhash_deduplicator: # minhash 去重算子的分布式版本

+ tokenization: 'character'

+```

+

+运行该实例来对数据集去重:

+

+```shell

+# 从源码运行处理工具

+python tools/process_data.py --config demos/process_on_ray/configs/dedup.yaml

+

+# 使用命令行工具

+dj-process --config demos/process_on_ray/configs/dedup.yaml

+```

+

+Data-Juicer 会使用示例配置文件对示例数据集去重,并将结果数据集导出到配置文件中 `export_path` 参数指定的目录中。

diff --git a/docs/Operators.md b/docs/Operators.md

index 69d09d16e..fc588562d 100644

--- a/docs/Operators.md

+++ b/docs/Operators.md

@@ -1,226 +1,244 @@

-# Operator Schemas

-Operators are a collection of basic processes that assist in data modification, cleaning, filtering, deduplication, etc. We support a wide range of data sources and file formats, and allow for flexible extension to custom datasets.

+# Operator Schemas 算子提要

-This page offers a basic description of the operators (OPs) in Data-Juicer. Users can refer to the [API documentation](https://modelscope.github.io/data-juicer/) for the specific parameters of each operator. Users can refer to and run the unit tests (`tests/ops/...`) for [examples of operator-wise usage](../tests/ops) as well as the effects of each operator when applied to built-in test data samples.

+Operators are a collection of basic processes that assist in data modification,

+cleaning, filtering, deduplication, etc. We support a wide range of data

+sources and file formats, and allow for flexible extension to custom datasets.

-## Overview

+算子 (Operator) 是协助数据修改、清理、过滤、去重等基本流程的集合。我们支持广泛的数据来源和文件格式,并支持对自定义数据集的灵活扩展。

-The operators in Data-Juicer are categorized into 7 types.

+This page offers a basic description of the operators (OPs) in Data-Juicer.

+Users can refer to the

+[API documentation](https://modelscope.github.io/data-juicer/) for the specific

+parameters of each operator. Users can refer to and run the unit tests

+(`tests/ops/...`) for [examples of operator-wise usage](../tests/ops) as well

+as the effects of each operator when applied to built-in test data samples.

-| Type | Number | Description |

-|-----------------------------------|:------:|-------------------------------------------------|

-| [ Formatter ]( #formatter ) | 9 | Discovers, loads, and canonicalizes source data |

-| [ Mapper ]( #mapper ) | 70 | Edits and transforms samples |

-| [ Filter ]( #filter ) | 44 | Filters out low-quality samples |

-| [ Deduplicator ]( #deduplicator ) | 8 | Detects and removes duplicate samples |

-| [ Selector ]( #selector ) | 5 | Selects top samples based on ranking |

-| [ Grouper ]( #grouper ) | 3 | Group samples to batched samples |

-| [ Aggregator ]( #aggregator ) | 4 | Aggregate for batched samples, such as summary or conclusion |

+这个页面提供了OP的基本描述,用户可以参考[API文档](https://modelscope.github.io/data-juicer/)更细致了解每个

+OP的具体参数,并且可以查看、运行单元测试 (`tests/ops/...`),来体验[各OP的用法示例](../tests/ops)以及每个OP作用于内置

+测试数据样本时的效果。

-All the specific operators are listed below, each featured with several capability tags.

+## Overview 概览

-* Domain Tags

- - : general purpose

- - : specific to LaTeX source files

- - : specific to programming codes

- - : closely related to financial sector

+The operators in Data-Juicer are categorized into 7 types.

+Data-Juicer 中的算子分为以下 7 种类型。

+

+| Type 类型 | Number 数量 | Description 描述 |

+|------|:------:|-------------|

+| [aggregator](#aggregator) | 4 | Aggregate for batched samples, such as summary or conclusion. 对批量样本进行汇总,如得出总结或结论。 |

+| [deduplicator](#deduplicator) | 10 | Detects and removes duplicate samples. 识别、删除重复样本。 |

+| [filter](#filter) | 44 | Filters out low-quality samples. 过滤低质量样本。 |

+| [formatter](#formatter) | 9 | Discovers, loads, and canonicalizes source data. 发现、加载、规范化原始数据。 |

+| [grouper](#grouper) | 3 | Group samples to batched samples. 将样本分组,每一组组成一个批量样本。 |

+| [mapper](#mapper) | 71 | Edits and transforms samples. 对数据样本进行编辑和转换。 |

+| [selector](#selector) | 5 | Selects top samples based on ranking. 基于排序选取高质量样本。 |

+

+All the specific operators are listed below, each featured with several capability tags.

+下面列出所有具体算子,每种算子都通过多个标签来注明其主要功能。

* Modality Tags

- - : specific to text

- - : specific to images

- - : specific to audios

- - : specific to videos

- - : specific to multimodal

-* Language Tags

- - : English

- - : Chinese

+ - : process text data specifically. 专用于处理文本。

+ - : process image data specifically. 专用于处理图像。

+ - : process audio data specifically. 专用于处理音频。

+ - : process video data specifically. 专用于处理视频。

+ - : process multimodal data. 用于处理多模态数据。

* Resource Tags

- - : only requires CPU resource (default)

- - : requires GPU/CUDA resource as well

-

-

-## Formatter

-

-| Operator | Tags | Description | Source code | Unit tests |

-|-------------------|-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|---------------------------------------------------------------------------------------------------|----------------------------------------------------|----------------------------------------------------|

-| local_formatter |    | Prepares datasets from local files | [code](../data_juicer/format/formatter.py) | [tests](../tests/format/test_unify_format.py) |

-| remote_formatter |    | Prepares datasets from remote (e.g., HuggingFace) | [code](../data_juicer/format/formatter.py) | [tests](../tests/format/test_unify_format.py) |

-| csv_formatter |    | Prepares local `.csv` files | [code](../data_juicer/format/csv_formatter.py) | [tests](../tests/format/test_csv_formatter.py) |

-| tsv_formatter |    | Prepares local `.tsv` files | [code](../data_juicer/format/tsv_formatter.py) | [tests](../tests/format/test_tsv_formatter.py) |

-| json_formatter |    | Prepares local `.json`, `.jsonl`, `.jsonl.zst` files | [code](../data_juicer/format/json_formatter.py) | - |

-| parquet_formatter |    | Prepares local `.parquet` files | [code](../data_juicer/format/parquet_formatter.py) | [tests](../tests/format/test_parquet_formatter.py) |

-| text_formatter |    | Prepares other local text files ([complete list](../data_juicer/format/text_formatter.py#L63,73)) | [code](../data_juicer/format/text_formatter.py) | - |

-| empty_formatter |  | Prepares an empty dataset | [code](../data_juicer/format/empty_formatter.py) | [tests](../tests/format/test_empty_formatter.py) |

-| mixture_formatter |    | Handles a mixture of all the supported local file types | [code](../data_juicer/format/mixture_formatter.py) | [tests](../tests/format/test_mixture_formatter.py) |

-

-## Mapper

-

-| Operator | Tags | Description | Source code | Unit tests |

-|------------------------------------------------|-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|------------------------------------------------------------------------------------|------------------------------------------------------------------------------------|

-| audio_ffmpeg_wrapped_mapper |  | Simple wrapper to run a FFmpeg audio filter | [code](../data_juicer/ops/mapper/audio_ffmpeg_wrapped_mapper.py) | [tests](../tests/ops/mapper/test_audio_ffmpeg_wrapped_mapper.py) |

-| calibrate_qa_mapper |     | Calibrate question-answer pairs based on reference text | [code](../data_juicer/ops/mapper/calibrate_qa_mapper.py) | [tests](../tests/ops/mapper/test_calibrate_qa_mapper.py) |

-| calibrate_query_mapper |     | Calibrate query in question-answer pairs based on reference text | [code](../data_juicer/ops/mapper/calibrate_query_mapper.py) | [tests](../tests/ops/mapper/test_calibrate_query_mapper.py) |

-| calibrate_response_mapper |     | Calibrate response in question-answer pairs based on reference text | [code](../data_juicer/ops/mapper/calibrate_response_mapper.py) | [tests](../tests/ops/mapper/test_calibrate_response_mapper.py) |

-| chinese_convert_mapper |    | Converts Chinese between Traditional Chinese, Simplified Chinese and Japanese Kanji (by [opencc](https://github.com/BYVoid/OpenCC)) | [code](../data_juicer/ops/mapper/chinese_convert_mapper.py) | [tests](../tests/ops/mapper/test_chinese_convert_mapper.py) |

-| clean_copyright_mapper |     | Removes copyright notice at the beginning of code files (must contain the word *copyright*) | [code](../data_juicer/ops/mapper/clean_copyright_mapper.py) | [tests](../tests/ops/mapper/test_clean_copyright_mapper.py) |

-| clean_email_mapper |     | Removes email information | [code](../data_juicer/ops/mapper/clean_email_mapper.py) | [tests](../tests/ops/mapper/test_clean_email_mapper.py) |

-| clean_html_mapper |     | Removes HTML tags and returns plain text of all the nodes | [code](../data_juicer/ops/mapper/clean_html_mapper.py) | [tests](../tests/ops/mapper/test_clean_html_mapper.py) |

-| clean_ip_mapper |     | Removes IP addresses | [code](../data_juicer/ops/mapper/clean_ip_mapper.py) | [tests](../tests/ops/mapper/test_clean_ip_mapper.py) |

-| clean_links_mapper |      | Removes links, such as those starting with http or ftp | [code](../data_juicer/ops/mapper/clean_links_mapper.py) | [tests](../tests/ops/mapper/test_clean_links_mapper.py) |

-| dialog_intent_detection_mapper |     | Mapper to generate user's intent labels in dialog. | [code](../data_juicer/ops/mapper/dialog_intent_detection_mapper.py) | [tests](../tests/ops/mapper/test_dialog_intent_detection_mapper.py) |

-| dialog_sentiment_detection_mapper |     | Mapper to generate user's sentiment labels in dialog. | [code](../data_juicer/ops/mapper/dialog_sentiment_detection_mapper.py) | [tests](../tests/ops/mapper/test_dialog_sentiment_detection_mapper.py) |

-| dialog_sentiment_intensity_mapper |     | Mapper to predict user's sentiment intensity (from -5 to 5 in default prompt) in dialog. | [code](../data_juicer/ops/mapper/dialog_sentiment_intensity_mapper.py) | [tests](../tests/ops/mapper/test_dialog_sentiment_intensity_mapper.py) |

-| dialog_topic_detection_mapper |     | Mapper to generate user's topic labels in dialog. | [code](../data_juicer/ops/mapper/dialog_topic_detection_mapper.py) | [tests](../tests/ops/mapper/test_dialog_topic_detection_mapper.py) |