+

+

+

+

+

+ +

+

+

+

+

+ +go-intelowl is a client library/SDK that allows developers to easily automate and integrate IntelOwl with their own set of tools!

+go-intelowl is a client library/SDK that allows developers to easily automate and integrate IntelOwl with their own set of tools!

Use go get to retrieve the SDK to add it to your GOPATH workspace, or project's Go module dependencies.

+ +This library was built with ease of use in mind! Here are some quick examples to get you started. If you need more example you can go to the examples directory

+To start using the go-intelowl library you first need to import it:

+ +Construct a new IntelOwlClient, then use the various services to easily access different parts of Intelowl's REST API. Here's an example of getting all jobs:

clientOptions := gointelowl.IntelOwlClientOptions{

+ Url: "your-cool-URL-goes-here",

+ Token: "your-super-secret-token-goes-here",

+ // This is optional

+ Certificate: "your-optional-certificate-goes-here",

+}

+

+intelowl := gointelowl.NewIntelOwlClient(

+ &clientOptions,

+ nil

+)

+

+ctx := context.Background()

+

+// returns *[]Jobs or an IntelOwlError!

+jobs, err := intelowl.JobService.List(ctx)

+For easy configuration and set up we opted for options structs. Where we can customize the client API or service endpoint to our liking! For more information go here. Here's a quick example!

// ...Making the client and context!

+

+tagOptions = gointelowl.TagParams{

+ Label: "NEW TAG",

+ Color: "#ffb703",

+}

+

+createdTag, err := intelowl.TagService.Create(ctx, tagOptions)

+if err != nil {

+ fmt.Println(err)

+} else {

+ fmt.Println(createdTag)

+}

+The examples directory contains a couple for clear examples, of which one is partially listed here as well:

+package main

+

+import (

+ "fmt"

+

+ "github.com/intelowlproject/go-intelowl/gointelowl"

+)

+

+func main(){

+ intelowlOptions := gointelowl.IntelOwlClientOptions{

+ Url: "your-cool-url-goes-here",

+ Token: "your-super-secret-token-goes-here",

+ Certificate: "your-optional-certificate-goes-here",

+ }

+

+ client := gointelowl.NewIntelOwlClient(

+ &intelowlOptions,

+ nil,

+ )

+

+ ctx := context.Background()

+

+ // Get User details!

+ user, err := client.UserService.Access(ctx)

+ if err != nil {

+ fmt.Println("err")

+ fmt.Println(err)

+ } else {

+ fmt.Println("USER Details")

+ fmt.Println(*user)

+ }

+}

+For complete usage of go-intelowl, see the full package docs.

+If you want to follow the updates, discuss, contribute, or just chat then please join our slack channel we'd love to hear your feedback!

+Licensed under the GNU AFFERO GENERAL PUBLIC LICENSE.

+You need a valid API key to interact with the IntelOwl server.

+You can get an API by doing the following:

+API Access/ SessionsKeys should be created from the admin interface of IntelOwl: you have to go in the Durin section (click on Auth tokens) and generate a key there.

+

+

+

+

+

+enrichmentHandle enrichment requests for a specific observable (domain or IP address).

+Parameters:

+| Name | +Type | +Description | +Default | +

|---|---|---|---|

+request

+ |

++ | +

+

+

+The incoming request object containing query parameters. + |

++required + | +

Returns:

+| Name | Type | +Description | +

|---|---|---|

Response | + | +

+

+

+A JSON response indicating whether the observable was found, + |

+

| + | +

+

+

+and if so, the corresponding IOC. + |

+

docs/Submodules/GreedyBear/api/views.pyfeedsHandle requests for IOC feeds with specific parameters and format the response accordingly.

+Parameters:

+| Name | +Type | +Description | +Default | +

|---|---|---|---|

+request

+ |

++ | +

+

+

+The incoming request object. + |

++required + | +

+feed_type

+ |

+

+str

+ |

+

+

+

+Type of feed (e.g., log4j, cowrie, etc.). + |

++required + | +

+attack_type

+ |

+

+str

+ |

+

+

+

+Type of attack (e.g., all, specific attack types). + |

++required + | +

+age

+ |

+

+str

+ |

+

+

+

+Age of the data to filter (e.g., recent, persistent). + |

++required + | +

+format_

+ |

+

+str

+ |

+

+

+

+Desired format of the response (e.g., json, csv, txt). + |

++required + | +

Returns:

+| Name | Type | +Description | +

|---|---|---|

Response | + | +

+

+

+The HTTP response with formatted IOC data. + |

+

docs/Submodules/GreedyBear/api/views.pyfeeds_paginationHandle requests for paginated IOC feeds based on query parameters.

+Parameters:

+| Name | +Type | +Description | +Default | +

|---|---|---|---|

+request

+ |

++ | +

+

+

+The incoming request object. + |

++required + | +

Returns:

+| Name | Type | +Description | +

|---|---|---|

Response | + | +

+

+

+The paginated HTTP response with IOC data. + |

+

docs/Submodules/GreedyBear/api/views.pyStatistics

+ Bases: ViewSet

A viewset for viewing and editing statistics related to feeds and enrichment data.

+Provides actions to retrieve statistics about the sources and downloads of feeds, +as well as statistics on enrichment data.

+docs/Submodules/GreedyBear/api/views.py279 +280 +281 +282 +283 +284 +285 +286 +287 +288 +289 +290 +291 +292 +293 +294 +295 +296 +297 +298 +299 +300 +301 +302 +303 +304 +305 +306 +307 +308 +309 +310 +311 +312 +313 +314 +315 +316 +317 +318 +319 +320 +321 +322 +323 +324 +325 +326 +327 +328 +329 +330 +331 +332 +333 +334 +335 +336 +337 +338 +339 +340 +341 +342 +343 +344 +345 +346 +347 +348 +349 +350 +351 +352 +353 +354 +355 +356 +357 +358 +359 +360 +361 +362 +363 +364 +365 +366 +367 +368 +369 +370 +371 +372 +373 +374 +375 +376 +377 +378 +379 +380 +381 +382 +383 +384 +385 +386 +387 +388 +389 +390 +391 +392 +393 +394 +395 +396 +397 +398 +399 +400 +401 +402 +403 +404 +405 +406 +407 +408 +409 +410 +411 +412 +413 +414 +415 +416 | |

__aggregation_response_static_ioc(annotations)

+Helper method to generate IOC response based on annotations.

+Parameters:

+| Name | +Type | +Description | +Default | +

|---|---|---|---|

+annotations

+ |

+

+dict

+ |

+

+

+

+Dictionary containing the annotations for the query. + |

++required + | +

Returns:

+| Name | Type | +Description | +

|---|---|---|

Response |

+Response

+ |

+

+

+

+A JSON response containing the aggregated IOC data. + |

+

docs/Submodules/GreedyBear/api/views.py__aggregation_response_static_statistics(annotations)

+Helper method to generate statistics response based on annotations.

+Parameters:

+| Name | +Type | +Description | +Default | +

|---|---|---|---|

+annotations

+ |

+

+dict

+ |

+

+

+

+Dictionary containing the annotations for the query. + |

++required + | +

Returns:

+| Name | Type | +Description | +

|---|---|---|

Response |

+Response

+ |

+

+

+

+A JSON response containing the aggregated statistics. + |

+

docs/Submodules/GreedyBear/api/views.py__parse_range(request)

+

+staticmethod

+

+Parse the range parameter from the request query string to determine the time range for the query.

+Parameters:

+| Name | +Type | +Description | +Default | +

|---|---|---|---|

+request

+ |

++ | +

+

+

+The incoming request object. + |

++required + | +

Returns:

+| Name | Type | +Description | +

|---|---|---|

tuple | + | +

+

+

+A tuple containing the delta time and basis for the query range. + |

+

docs/Submodules/GreedyBear/api/views.pyenrichment(request, pk=None)

+Retrieve enrichment statistics, including the number of sources and requests.

+Parameters:

+| Name | +Type | +Description | +Default | +

|---|---|---|---|

+request

+ |

++ | +

+

+

+The incoming request object. + |

++required + | +

+pk

+ |

+

+str

+ |

+

+

+

+The type of statistics to retrieve (e.g., "sources", "requests"). + |

+

+None

+ |

+

Returns:

+| Name | Type | +Description | +

|---|---|---|

Response | + | +

+

+

+A JSON response containing the requested statistics. + |

+

docs/Submodules/GreedyBear/api/views.pyfeeds(request, pk=None)

+Retrieve feed statistics, including the number of sources and downloads.

+Parameters:

+| Name | +Type | +Description | +Default | +

|---|---|---|---|

+request

+ |

++ | +

+

+

+The incoming request object. + |

++required + | +

+pk

+ |

+

+str

+ |

+

+

+

+The type of statistics to retrieve (e.g., "sources", "downloads"). + |

+

+None

+ |

+

Returns:

+| Name | Type | +Description | +

|---|---|---|

Response | + | +

+

+

+A JSON response containing the requested statistics. + |

+

docs/Submodules/GreedyBear/api/views.pyfeeds_types(request)

+Retrieve statistics for different types of feeds, including Log4j, Cowrie, +and general honeypots.

+Parameters:

+| Name | +Type | +Description | +Default | +

|---|---|---|---|

+request

+ |

++ | +

+

+

+The incoming request object. + |

++required + | +

Returns:

+| Name | Type | +Description | +

|---|---|---|

Response | + | +

+

+

+A JSON response containing the feed type statistics. + |

+

docs/Submodules/GreedyBear/api/views.pygeneral_honeypot_listRetrieve a list of all general honeypots, optionally filtering by active status.

+Parameters:

+| Name | +Type | +Description | +Default | +

|---|---|---|---|

+request

+ |

++ | +

+

+

+The incoming request object containing query parameters. + |

++required + | +

Returns:

+| Name | Type | +Description | +

|---|---|---|

Response | + | +

+

+

+A JSON response containing the list of general honeypots. + |

+

docs/Submodules/GreedyBear/api/views.py +

+

+

+

+

+Please refer to IntelOwl Documentation for everything missing here.

+GreedyBear welcomes contributors from anywhere and from any kind of education or skill level. We strive to create a community of developers that is welcoming, friendly and right.

+For this reason it is important to follow some easy rules based on a simple but important concept: Respect.

+Keeping to a consistent code style throughout the project makes it easier to contribute and collaborate. We make use of psf/black and isort for code formatting and flake8 for style guides.

To start with the development setup, make sure you go through all the steps in Installation Guide and properly installed it.

+Please create a new branch based on the develop branch that contains the most recent changes. This is mandatory.

+git checkout -b myfeature develop

Then we strongly suggest to configure pre-commit to force linters on every commits you perform:

+# create virtualenv to host pre-commit installation

+python3 -m venv venv

+source venv/bin/activate

+# from the project base directory

+pip install pre-commit

+pre-commit install -c .github/.pre-commit-config.yaml

+Remember that whenever you make changes, you need to rebuild the docker image to see the reflected changes.

+If you made any changes to an existing model/serializer/view, please run the following command to generate a new version of the API schema and docs:

+docker exec -it greedybear_uwsgi python manage.py spectacular --file docs/source/schema.yml && make html

+To start the frontend in "develop" mode, you can execute the startup npm script within the folder frontend:

cd frontend/

+# Install

+npm i

+# Start

+DANGEROUSLY_DISABLE_HOST_CHECK=true npm start

+# See https://create-react-app.dev/docs/proxying-api-requests-in-development/#invalid-host-header-errors-after-configuring-proxy for why we use that flag in development mode

+Most of the time you would need to test the changes you made together with the backend. In that case, you would need to run the backend locally too:

+ +The GreedyBear Frontend is tightly linked to the certego-ui library. Most of the React components are imported from there. Because of this, it may happen that, during development, you would need to work on that library too.

+To install the certego-ui library, please take a look to npm link and remember to start certego-ui without installing peer dependencies (to avoid conflicts with GreedyBear dependencies):

git clone https://github.com/certego/certego-ui.git

+# change directory to the folder where you have the cloned the library

+cd certego-ui/

+# install, without peer deps (to use packages of GreedyBear)

+npm i --legacy-peer-deps

+# create link to the project (this will globally install this package)

+sudo npm link

+# compile the library

+npm start

+Then, open another command line tab, create a link in the frontend to the certego-ui and re-install and re-start the frontend application (see previous section):

This trick will allow you to see reflected every changes you make in the certego-ui directly in the running frontend application.

The certego-ui application comes with an example project that showcases the components that you can re-use and import to other projects, like GreedyBear:

# To have the Example application working correctly, be sure to have installed `certego-ui` *without* the `--legacy-peer-deps` option and having it started in another command line

+cd certego-ui/

+npm i

+npm start

+# go to another tab

+cd certego-ui/example/

+npm i

+npm start

+Please create pull requests only for the branch develop. That code will be pushed to master only on a new release.

+Also remember to pull the most recent changes available in the develop branch before submitting your PR. If your PR has merge conflicts caused by this behavior, it won't be accepted.

+You have to install pre-commit to have your code adjusted and fixed with the available linters:

Once done that, you won't have to think about linters anymore.

+All the frontend tests must be run from the folder frontend.

+The tests can contain log messages, you can suppress then with the environment variable SUPPRESS_JEST_LOG=True.

npm test -- -t '<describeString> <testString>'

+// example

+npm test -- -t "Login component User login"

+if you get any errors, fix them. +Once you make sure that everything is working fine, please squash all of our commits into a single one and finally create a pull request.

+ +

+

+

+

+

+For requirements, please refer to IntelOwl requirements which are the same

+Note that GreedyBear needs a running instance of ElasticSearch of a T-POT to function. In docker/env_file, set the variable ELASTIC_ENDPOINT with the URL of your Elasticsearch T-POT.

If you don't have one, you can make the following changes to make GreeyBear spin up it's own ElasticSearch instance. +(...Care! This option would require enough RAM to run the additional containers. Suggested is >=16GB):

+docker/env_file, set the variable ELASTIC_ENDPOINT to http://elasticsearch:9200.:docker/elasticsearch.yml to the last defined COMPOSE_FILE variable or uncomment the # local development with elasticsearch container block in .env file.Start by cloning the project

+# clone the Greedybear project repository

+git clone https://github.com/honeynet/GreedyBear

+cd GreedyBear/

+

+# construct environment files from templates

+cp .env_template .env

+cd docker/

+cp env_file_template env_file

+cp env_file_postgres_template env_file_postgres

+Now you can start by building the image using docker-compose and run the project.

+# build the image locally

+docker-compose build

+

+# start the app

+docker-compose up

+

+# now the app is running on http://localhost:80

+

+# shut down the application

+docker-compose down

+Note: To create a superuser run the following:

+ +The app administrator can enable/disable the extraction of source IPs for specific honeypots from the Django Admin. +This is used for honeypots that are not specifically implemented to extract additional information (so not Log4Pot and Cowrie).

+In the env_file, configure different variables as explained below.

Required variable to set:

+DEFAULT_FROM_EMAIL: email address used for automated correspondence from the site manager (example: noreply@mydomain.com)DEFAULT_EMAIL: email address used for correspondence with users (example: info@mydomain.com)EMAIL_HOST: the host to use for sending email with SMTPEMAIL_HOST_USER: username to use for the SMTP server defined in EMAIL_HOSTEMAIL_HOST_PASSWORD: password to use for the SMTP server defined in EMAIL_HOST. This setting is used in conjunction with EMAIL_HOST_USER when authenticating to the SMTP server.EMAIL_PORT: port to use for the SMTP server defined in EMAIL_HOST.EMAIL_USE_TLS: whether to use an explicit TLS (secure) connection when talking to the SMTP server, generally used on port 587.EMAIL_USE_SSL: whether to use an implicit TLS (secure) connection when talking to the SMTP server, generally used on port 465.Optional configuration:

+SLACK_TOKEN: Slack token of your Slack application that will be used to send/receive notificationsDEFAULT_SLACK_CHANNEL: ID of the Slack channel you want to post the message toGreedybear leverages a python client for interacting with ElasticSearch which requires to be at the exact major version of the related T-POT ElasticSearch instance. +This means that there could problems if those versions do not match.

+The actual version of the client installed is the 8.15.0 which allows to run TPOT version from 22.04.0 to 24.04.0 without any problems (and some later ones...we regularly check T-POT releases but we could miss one or two here.)

+If you want to have compatibility with previous versions, you need to change the elasticsearch-dsl version here and re-build locally the project.

If you make some code changes and you like to rebuild the project, follow these steps:

+.env file has a COMPOSE_FILE variable which mounts the docker/local.override.yml compose file.docker-compose build to build the new docker image.docker-compose up.To update the project with the most recent available code you have to follow these steps:

+$ cd <your_greedy_bear_directory> # go into the project directory

+$ git pull # pull new repository changes

+$ docker pull intelowlproject/greedybear:prod # pull new docker images

+$ docker-compose down # stop and destroy the currently running GreedyBear containers

+$ docker-compose up # restart the GreedyBear application

+ +

+

+

+

+

+The project goal is to extract data of the attacks detected by a TPOT or a cluster of them and to generate some feeds that can be used to prevent and detect attacks.

+ +There are public feeds provided by The Honeynet Project in this site: greedybear.honeynet.org. Example

+To check all the available feeds, Please refer to our usage guide

+Please do not perform too many requests to extract feeds or you will be banned.

+If you want to be updated regularly, please download the feeds only once every 10 minutes (this is the time between each internal update).

+ +

+

+

+

+

+Since Greedybear v1.1.0 we added a Registration Page that can be used to manage Registration requests when providing GreedyBear as a Service.

+After an user registration, an email is sent to the user to verify their email address. If necessary, there are buttons on the login page to resend the verification email and to reset the password.

+Once the user has verified their email, they would be manually vetted before being allowed to use the GreedyBear platform. The registration requests would be handled in the Django Admin page by admins. +If you have GreedyBear deployed on an AWS instance you can use the SES service.

+In a development environment the emails that would be sent are written to the standard output.

+If you like, you could use Amazon SES for sending automated emails.

+First, you need to configure the environment variable AWS_SES to True to enable it.

+Then you have to add some credentials for AWS: if you have GreedyBear deployed on the AWS infrastructure, you can use IAM credentials:

+to allow that just set AWS_IAM_ACCESS to True. If that is not the case, you have to set both AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY.

Additionally, if you are not using the default AWS region of us-east-1, you need to specify your AWS_REGION.

+You can customize the AWS Region location of you services by changing the environment variable AWS_REGION. Default is eu-central-1.

GreedyBear is created with the aim to collect the information from the TPOTs and generate some actionable feeds, so that they can be easily accessible and act as valuable information to prevent and detect attacks.

+The feeds are reachable through the following URL:

+ +The available feed_type are:

+log4j: attacks detected from the Log4pot.cowrie: attacks detected from the Cowrie Honeypot.all: get all types at onceheraldingciscoasahoneytrapdionaeaconpotadbhoneytannercitrixhoneypotmailoneyipphoneyddospotelasticpotdicompotredishoneypotsentrypeergluttonThe available attack_type are:

+scanner: IP addresses captured by the honeypots while performing attackspayload_request: IP addresses and domains extracted from payloads that would have been executed after a speficic attack would have been successfulall: get all types at onceThe available age are:

+recent: most recent IOCs seen in the last 3 dayspersistent: these IOCs are the ones that were seen regularly by the honeypots. This feeds will start empty once no prior data was collected and will become bigger over time.The available formats are:

+txt: plain text (just one line for each IOC)csv: CSV-like file (just one line for each IOC)json: JSON file with additional information regarding the IOCsCheck the API specification or the to get all the details about how to use the available APIs.

+GreedyBear provides an easy-to-query API to get the information available in GB regarding the queried observable (domain or IP address).

+ +This "Enrichment" API is protected through authentication. Please reach out Matteo Lodi or another member of The Honeynet Project if you are interested in gain access to this API.

+If you would like to leverage this API without the need of writing even a line of code and together with a lot of other awesome tools, consider using IntelOwl.

+ +

+

+

+

+

+When you write or modify Python code in the codebase, it's important to add or update the docstrings accordingly. If you wish to display these docstrings in the documentation, follow these steps.

+Suppose the docstrings are located in the following path: docs/Submodules/IntelOwl/api_app/analyzers_manager/classes, and you want to show the description of a class, such as BaseAnalyzerMixin.

To include this in the documentation, use the following command:

+ +Warning

+Make sure your path is correct and syntax is correct. +If you face any issues even path is correct then read the Submodules Guide. +

+ Bases: Plugin

Abstract Base class for Analyzers. +Never inherit from this branch, +always use either one of ObservableAnalyzer or FileAnalyzer classes.

+docs/Submodules/IntelOwl/api_app/analyzers_manager/classes.py27 + 28 + 29 + 30 + 31 + 32 + 33 + 34 + 35 + 36 + 37 + 38 + 39 + 40 + 41 + 42 + 43 + 44 + 45 + 46 + 47 + 48 + 49 + 50 + 51 + 52 + 53 + 54 + 55 + 56 + 57 + 58 + 59 + 60 + 61 + 62 + 63 + 64 + 65 + 66 + 67 + 68 + 69 + 70 + 71 + 72 + 73 + 74 + 75 + 76 + 77 + 78 + 79 + 80 + 81 + 82 + 83 + 84 + 85 + 86 + 87 + 88 + 89 + 90 + 91 + 92 + 93 + 94 + 95 + 96 + 97 + 98 + 99 +100 +101 +102 +103 +104 +105 +106 +107 +108 +109 +110 +111 | |

analyzer_name: str

+

+property

+

+Returns the name of the analyzer.

+config_exception

+

+classmethod

+property

+

+Returns the AnalyzerConfigurationException class.

+config_model

+

+classmethod

+property

+

+Returns the AnalyzerConfig model.

+report_model

+

+classmethod

+property

+

+Returns the AnalyzerReport model.

+after_run_success(content)

+Handles actions after a successful run.

+Parameters:

+| Name | +Type | +Description | +Default | +

|---|---|---|---|

+content

+ |

+

+any

+ |

+

+

+

+The content to process after a successful run. + |

++required + | +

docs/Submodules/IntelOwl/api_app/analyzers_manager/classes.pyget_exceptions_to_catch()

+Returns additional exceptions to catch when running start fn

+ + +

+

+

+

+

+To set up and run the documentation site on your local machine, please follow the steps below:

+To create a virtual environment named venv in your project directory, use the following command:

Activate the virtual environment to ensure that all dependencies are installed locally within your project directory.

+On Linux/MacOS:

+ +On Windows:

+ +To install all the necessary Python packages listed in requirements.txt, run:

+ +Please run these commands to update and fetch the local Submodules.

+git submodule foreach --recursive 'git fetch --all'

+git submodule update --init --remote --recursive --depth 1

+git submodule sync --recursive

+git submodule update --remote --recursive

+Start a local development server to preview the documentation in your web browser. The server will automatically reload whenever you make changes to the documentation files.

+ +As you edit the documentation, you can view your changes in real-time through the local server. This step ensures everything looks as expected before deploying.

+Once you are satisfied with your changes, commit and push them to the GitHub repository. The documentation will be automatically deployed via GitHub Actions, making it live on the documentation site.

+ +

+

+

+

+

+This page includes details about some advanced features that Intel Owl provides which can be optionally configured by the administrator.

+Available for version > 6.1.0

+Right now only ElasticSearch v8 is supported.

+In the env_file_app_template, you'd see various elasticsearch related environment variables. The user should spin their own Elastic Search instance and configure these variables.

ELASTIC_HOST with the URL of the external instance.--elastic option you can run a container based Elasticsearch instance. In this case the ELASTIC_HOST must be set to https://elasticsearch:9200. Configure also ELASTIC_PASSWORD.Thanks to django-elasticsearch-dsl Job results are indexed into elasticsearch. The save and delete operations are auto-synced so you always have the latest data in ES.

With elasticsearch-py the AnalyzerReport, ConnectorReport and PivotReport objects are indexed into elasticsearch. In this way is possible to search data inside the report fields and many other via the UI. Each time IntelOwl is restarted the index template is updated and the every 5 minutes a task insert the reports in ElasticSearch.

+IntelOwl stores data that can be used for Business Intelligence purpose. +Since plugin reports are deleted periodically, this feature allows to save indefinitely small amount of data to keep track of how analyzers perform and user usage. +At the moment, the following information are sent to elastic:

+Documents are saved in the ELEASTICSEARCH_BI_INDEX-%YEAR-%MONTH, allowing to manage the retention accordingly.

+To activate this feature, it is necessary to set ELASTICSEARCH_BI_ENABLED to True in the env_file_app and

+ELASTICSEARCH_BI_HOST to elasticsearch:9200

+or your elasticsearch server.

An index template is created after the first bulk submission of reports.

+IntelOwl provides support for some of the most common authentication methods:

+The first step is to create a Google Cloud Platform project, and then create OAuth credentials for it.

+It is important to add the correct callback in the "Authorized redirect URIs" section to allow the application to redirect properly after the successful login. Add this:

+ +After that, specify the client ID and secret as GOOGLE_CLIENT_ID and GOOGLE_CLIENT_SECRET environment variables and restart IntelOwl to see the applied changes.

Note

+While configuring Google Auth2 you can choose either to enable access to the all users with a Google Account ("External" mode) or to enable access to only the users of your organization ("Internal" mode). +Reference +IntelOwl leverages Django-auth-ldap to perform authentication via LDAP.

+How to configure and enable LDAP on Intel Owl?

+configuration/ldap_config.py. This file is mounted as a docker volume, so you won't need to rebuild the image.Note

+For more details on how to configure this file, check the official documentation of the django-auth-ldap library. +LDAP_ENABLED as True in the environment configuration file env_file_app.

+ Finally, you can restart the application with docker-compose upIntelOwl leverages Django-radius to perform authentication +via RADIUS server.

+How to configure and enable RADIUS authentication on Intel Owl?

+configuration/radius_config.py. This file is mounted as a

+ docker volume, so you won't need to rebuild the image.Note

+For more details on how to configure this file, check the official documentation of the django-radius library. +RADIUS_AUTH_ENABLED as True in the environment

+ configuration file env_file_app. Finally, you can restart the application with docker-compose upLike many other integrations that we have, we have an Analyzer and a Connector for the OpenCTI platform.

+This allows the users to leverage these 2 popular open source projects and frameworks together.

+So why we have a section here? This is because there are various compatibility problems with the official PyCTI library.

+We found out (see issues in IntelOwl and PyCTI) that, most of the times, it is required that the OpenCTI version of the server you are using and the pycti version installed in IntelOwl must match perfectly.

+Because of that, we decided to provide to the users the chance to customize the version of PyCTI installed in IntelOwl based on the OpenCTI version that they are using.

+To do that, you would need to leverage the option --pycti-version provided by the ./start helper:

--pycti-version with ./start -h./start test build --pycti-version <your_version>./start test up -- --buildWe have support for several AWS services.

+You can customize the AWS Region location of you services by changing the environment variable AWS_REGION. Default is eu-central-1

You have to add some credentials for AWS: if you have IntelOwl deployed on the AWS infrastructure, you can use IAM credentials:

+to allow that just set AWS_IAM_ACCESS to True. If that is not the case, you have to set both AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY

If you prefer to use S3 to store the analyzed samples, instead of the local storage, you can do it.

+First, you need to configure the environment variable LOCAL_STORAGE to False to enable it and set AWS_STORAGE_BUCKET_NAME to the AWS bucket you want to use.

Then you need to configure permission access to the chosen S3 bucket.

+IntelOwl at the moment supports 3 different message brokers:

+The default broker, if nothing is specified, is Redis.

To use RabbitMQ, you must use the option --rabbitmq when launching IntelOwl with the ./start script.

To use AWS SQS, you must use the option --sqs when launching IntelOwl with the .start script.

+In that case, you should create new FIFO SQS queues in AWS called intelowl-<environment>-<queue_name>.fifo and give your instances on AWS the proper permissions to access it.

+Moreover, you must populate the AWS_USER_NUMBER. This is required to connect in the right way to the selected SQS queues.

+Only FIFO queues are supported.

If you want to use a remote message broker (like an ElasticCache or AmazonMQ instance), you must populate the BROKER_URL environment variable.

It is possible to use task priority inside IntelOwl: each User has default priority of 10, and robots users (like the Ingestors) have a priority of 7.

+You can customize these priorities inside Django Admin, in the Authentication.User Profiles section.

Redis is used for two different functions:

For this reason, a Redis instance is mandatory.

+You can personalize IntelOwl in two different way:

Redis instance.This is the default behaviour.

+Redis instance.You must use the option --use-external-redis when launching IntelOwl with the .start script.

+Moreover, you need to populate the WEBSOCKETS_URL environment variable. If you are using Redis as a message broker too, remember to populate the BROKER_URL environment variable

If you like, you could use AWS RDS instead of PostgreSQL for your database. In that case, you should change the database required options accordingly: DB_HOST, DB_PORT, DB_USER, DB_PASSWORD and setup your machine to access the service.

If you have IntelOwl deployed on the AWS infrastructure, you can use IAM credentials to access the Postgres DB.

+To allow that just set AWS_RDS_IAM_ROLE to True. In this case DB_PASSWORD is not required anymore.

Moreover, to avoid to run PostgreSQL locally, you would need to use the option --use-external-database when launching IntelOwl with the ./start script.

If you like, you could use Amazon SES for sending automated emails (password resets / registration requests, etc).

+You need to configure the environment variable AWS_SES to True to enable it.

You can use the "Secrets Manager" to store your credentials. In this way your secrets would be better protected.

+Instead of adding the variables to the environment file, you should just add them with the same name on the AWS Secrets Manager and Intel Owl will fetch them transparently.

+Obviously, you should have created and managed the permissions in AWS in advance and accordingly to your infrastructure requirements.

+Also, you need to set the environment variable AWS_SECRETS to True to enable this mode.

You can use a Network File System for the shared_files that are downloaded runtime by IntelOwl (for example Yara rules).

To use this feature, you would need to add the address of the remote file system inside the .env file,

+and you would need to use the option --nfs when launching IntelOwl with the ./start script.

Right now there is no official support for Kubernetes deployments.

+But we have an active community. Please refer to the following blog post for an example on how to deploy IntelOwl on Google Kubernetes Engine:

+Deploying Intel-Owl on GKE by Mayank Malik.

+IntelOwl provides an additional multi-queue.override.yml compose file allowing IntelOwl users to better scale with the performance of their own architecture.

+If you want to leverage it, you should add the option --multi-queue when starting the project. Example:

This functionality is not enabled by default because this deployment would start 2 more containers so the resource consumption is higher. We suggest to use this option only when leveraging IntelOwl massively.

+It is possible to define new celery workers: each requires the addition of a new container in the docker-compose file, as shown in the multi-queue.override.yml.

Moreover IntelOwl requires that the name of the workers are provided in the docker-compose file. This is done through the environment variable CELERY_QUEUES inside the uwsgi container. Each queue must be separated using the character ,, as shown in the example.

One can customize what analyzer should use what queue by specifying so in the analyzer entry in the analyzer_config.json configuration file. If no queue(s) are provided, the default queue will be selected.

IntelOwl provides an additional flower.override.yml compose file allowing IntelOwl users to use Flower features to monitor and manage queues and tasks

+If you want to leverage it, you should add the option --flower when starting the project. Example:

The flower interface is available at port 5555: to set the credentials for its access, update the environment variables

+ +or change the .htpasswd file that is created in the docker directory in the intelowl_flower container.

The ./start script essentially acts as a wrapper over Docker Compose, performing additional checks.

+IntelOwl can still be started by using the standard docker compose command, but all the dependencies have to be manually installed by the user.

The --project-directory and -p options are required to run the project.

+Default values set by ./start script are "docker" and "intel_owl", respectively.

The startup is based on chaining various Docker Compose YAML files using -f option.

+All Docker Compose files are stored in docker/ directory of the project.

+The default compose file, named default.yml, requires configuration for an external database and message broker.

+In their absence, the postgres.override.yml and rabbitmq.override.yml files should be chained to the default one.

The command composed, considering what is said above (using sudo), is

sudo docker compose --project-directory docker -f docker/default.yml -f docker/postgres.override.yml -f docker/rabbitmq.override.yml -p intel_owl up

+The other most common compose file that can be used is for the testing environment.

+The equivalent of running ./start test up is adding the test.override.yml file, resulting in:

sudo docker compose --project-directory docker -f docker/default.yml -f docker/postgres.override.yml -f docker/rabbitmq.override.yml -f docker/test.override.yml -p intel_owl up

+All other options available in the ./start script (./start -h to view them) essentially chain other compose file to docker compose command with corresponding filenames.

IntelOwl includes integrations with some analyzer that are not enabled by default.

+These analyzers, stored under the integrations/ directory, are packed within Docker Compose files.

+The compose.yml file has to be chained to include the analyzer.

+The additional compose-test.yml file has to be chained for testing environment.

+

+

+

+

+

+This page includes details about some advanced features that Intel Owl provides which can be optionally enabled. Namely,

+Starting from IntelOwl v4, a new "Organization" section is available on the GUI. This section substitute the previous permission management via Django Admin and aims to provide an easier way to manage users and visibility.

+Thanks to the "Organization" feature, IntelOwl can be used by multiple SOCs, companies, etc...very easily. +Right now it works very simply: only users in the same organization can see analysis of one another. An user can belong to an organization only.

+You can create a new organization by going to the "Organization" section, available under the Dropdown menu you cand find under the username.

+Once you create an organization, you are the unique "Owner" of that organization. So you are the only one who can delete the organization and promote/demote/kick users. +Another role, which is called "Admin", can be set to a user (via the Django Admin interface only for now). +Owners and admins share the following powers: they can manage invitations and the organization's plugin configuration.

+Once an invite has sent, the invited user has to login, go to the "Organization" section and accept the invite there. Afterwards the Administrator will be able to see the user in his "Organization" section.

+

From IntelOwl v4.1.0, Plugin Parameters and Secrets can be defined at the organization level, in the dedicated section. +This allows to share configurations between users of the same org while allowing complete multi-tenancy of the application. +Only Owners and Admins of the organization can set, change and delete them.

+The org admin can disable a specific plugin for all the users in a specific org. +To do that, Org Admins needs to go in the "Plugins" section and click the button "Enabled for organization" of the plugin that they want to disable.

+

Since IntelOwl v4.2.0 we added a Registration Page that can be used to manage Registration requests when providing IntelOwl as a Service.

+After a user registration has been made, an email is sent to the user to verify their email address. If necessary, there are buttons on the login page to resend the verification email and to reset the password.

+Once the user has verified their email, they would be manually vetted before being allowed to use the IntelOwl platform. The registration requests would be handled in the Django Admin page by admins. +If you have IntelOwl deployed on an AWS instance with an IAM role you can use the SES service.

+To have the "Registration" page to work correctly, you must configure some variables before starting IntelOwl. See Optional Environment Configuration

+In a development environment the emails that would be sent are written to the standard output.

+Some analyzers which run in their own Docker containers are kept disabled by default. They are disabled by default to prevent accidentally starting too many containers and making your computer unresponsive.

+ +| Name | +Analyzers | +Description | +

|---|---|---|

| Malware Tools Analyzers | +

+

|

+

+

|

+

| TOR Analyzers | +Onionscan |

+Scans TOR .onion domains for privacy leaks and information disclosures. | +

| CyberChef | +CyberChef |

+Run a transformation on a CyberChef server using pre-defined or custom recipes(rules that describe how the input has to be transformed). Check further instructions here | +

| PCAP Analyzers | +Suricata |

+You can upload a PCAP to have it analyzed by Suricata with the open Ruleset. The result will provide a list of the triggered signatures plus a more detailed report with all the raw data generated by Suricata. You can also add your own rules (See paragraph "Analyzers with special configuration"). The installation is optimized for scaling so the execution time is really fast. | +

| PhoneInfoga | +PhoneInfoga_scan |

+PhoneInfoga is one of the most advanced tools to scan international phone numbers. It allows you to first gather basic information such as country, area, carrier and line type, then use various techniques to try to find the VoIP provider or identify the owner. It works with a collection of scanners that must be configured in order for the tool to be effective. PhoneInfoga doesn't automate everything, it's just there to help investigating on phone numbers. here | +

| Phishing Analyzers | +

+

|

+This framework tries to render a potential phishing page and extract useful information from it. Also, if the page contains a form, it tries to submit the form using fake data. The goal is to extract IOCs and check whether the page is real phishing or not. | +

To enable all the optional analyzers you can add the option --all_analyzers when starting the project. Example:

Otherwise you can enable just one of the cited integration by using the related option. Example:

+ +Some analyzers provide the chance to customize the performed analysis based on parameters that are different for each analyzer.

+You can click on "Runtime Configuration"  button in the "Scan" page and add the runtime configuration in the form of a dictionary.

+Example:

button in the "Scan" page and add the runtime configuration in the form of a dictionary.

+Example:

While using send_observable_analysis_request or send_file_analysis_request endpoints, you can pass the parameter runtime_configuration with the optional values.

+Example:

runtime_configuration = {

+ "Doc_Info": {

+ "additional_passwords_to_check": ["passwd", "2020"]

+ }

+}

+pyintelowl_client.send_file_analysis_request(..., runtime_configuration=runtime_configuration)

+PhoneInfoga provides several Scanners to extract as much information as possible from a given phone number. Those scanners may require authentication, so they are automatically skipped when no authentication credentials are found.

+By default the scanner used is local.

+Go through this guide to initiate other required API keys related to this analyzer.

You can either use pre-defined recipes or create your own as +explained here.

+To use a pre-defined recipe, set the predefined_recipe_name argument to the name of the recipe as

+defined here. Else, leave the predefined_recipe_name argument empty and set

+the custom_recipe argument to the contents of

+the recipe you want to

+use.

Additionally, you can also (optionally) set the output_type argument.

[{"op": "To Decimal", "args": ["Space", False]}]The framework aims to be extandable and provides two different playbooks connected through a pivot.

+The first playbook, named PhishingExtractor, is in charge of extracting useful information from the web page rendered with Selenium-based browser.

+The second playbook is called PhishingAnalysis and its main purposes are to extract useful insights on the page itself

+and to try to submit forms with fake data to extract other IOCs.

XPath syntax is used to find elements in the page. These selectors are customizable via the plugin's config page.

+The parameter xpath_form_selector controls how the form is retrieved from the page and xpath_js_selector is used to search

+for JavaScript inside the page.

A mapping is used in order to compile the page with fake data. This is due to the fact that most input tags of type "text"

+do not have a specific role in the page, so there must be some degree of approximation.

+This behaviour is controlled through *-mapping parameters. They are a list that must contain the input tag's name to

+compile with fake data.

Here is an example of what a phishing investigation looks like started from PhishingExtractor playbook:

+

Some analyzers could require a special configuration:

+GoogleWebRisk: this analyzer needs a service account key with the Google Cloud credentials to work properly.

+ You should follow the official guide for creating the key.

+ Then you can populate the secret service_account_json for that analyzer with the JSON of the service account file.ClamAV: this Docker-based analyzer uses clamd daemon as its scanner and is communicating with clamdscan utility to scan files. The daemon requires 2 different configuration files: clamd.conf(daemon's config) and freshclam.conf (virus database updater's config). These files are mounted as docker volumes in /integrations/malware_tools_analyzers/clamav and hence, can be edited by the user as per needs, without restarting the application. Moreover ClamAV is integrated with unofficial open source signatures extracted with Fangfrisch. The configuration file fangfrisch.conf is mounted in the same directory and can be customized on your wish. For instance, you should change it if you want to integrate open source signatures from SecuriteInfoSuricata: you can customize the behavior of Suricata:

/integrations/pcap_analyzers/config/suricata/rules: here there are Suricata rules. You can change the custom.rules files to add your own rules at any time. Once you made this change, you need to either restart IntelOwl or (this is faster) run a new analysis with the Suricata analyzer and set the parameter reload_rules to true./integrations/pcap_analyzers/config/suricata/etc: here there are Suricata configuration files. Change it based on your wish. Restart IntelOwl to see the changes applied.Yara:repositories parameter and private_repositories secret to download and use different rules from the default that IntelOwl currently support.repositories values is what will be used to actually run the analysis: if you have added private repositories, remember to add the url in repositories too!/opt/deploy/files_required/yara/YOUR_USERNAME/custom_rules/. Please remember that these rules are not synced in a cluster deploy: for this reason is advised to upload them on GitHub and use the repositories or private_repositories attributes.Since v4, IntelOwl integrated the notification system from the certego_saas package, allowing the admins to create notification that every user will be able to see.

The user would find the Notifications button on the top right of the page:

+

There the user can read notifications provided by either the administrators or the IntelOwl Maintainers.

+As an Admin, if you want to add a notification to have it sent to all the users, you have to login to the Django Admin interface, go to the "Notifications" section and add it there.

+While adding a new notification, in the body section it is possible to even use HTML syntax, allowing to embed images, links, etc;

+in the app_name field, please remember to use intelowl as the app name.

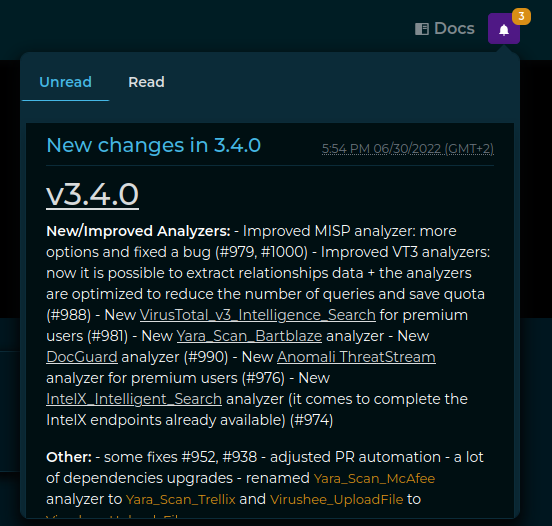

Everytime a new release is installed, once the backend goes up it will automatically create a new notification, +having as content the latest changes described in the CHANGELOG.md, +allowing the users to keep track of the changes inside intelowl itself.

+ +

+

+

+

+

+ask_analysis_availabilityAPI endpoint to check for existing analysis based on an MD5 hash.

+This endpoint helps avoid redundant analysis by checking if there is already an analysis +in progress or completed with status "running" or "reported_without_fails" for the provided MD5 hash. +The analyzers that need to be executed should be specified to ensure expected results.

+Deprecated: This endpoint will be deprecated after 01-07-2023.

+Parameters: +- request (POST): Contains the MD5 hash and analyzer details.

+Returns: +- 200: JSON response with the analysis status, job ID, and analyzers to be executed.

+docs/Submodules/IntelOwl/api_app/views.pyask_multi_analysis_availabilityAPI endpoint to check for existing analysis for multiple MD5 hashes.

+Similar to ask_analysis_availability, this endpoint checks for existing analysis for multiple MD5 hashes.

+It prevents redundant analysis by verifying if there are any jobs in progress or completed with status

+"running" or "reported_without_fails". The analyzers required should be specified to ensure accurate results.

Parameters: +- request (POST): Contains multiple MD5 hashes and analyzer details.

+Returns: +- 200: JSON response with the analysis status, job IDs, and analyzers to be executed for each MD5 hash.

+docs/Submodules/IntelOwl/api_app/views.pyanalyze_fileAPI endpoint to start an analysis job for a single file.

+This endpoint initiates an analysis job for a single file and sends it to the +specified analyzers. The file-related information and analyzers should be provided +in the request data.

+Parameters: +- request (POST): Contains file data and analyzer details.

+Returns: +- 200: JSON response with the job details after initiating the analysis.

+docs/Submodules/IntelOwl/api_app/views.pyanalyze_multiple_filesAPI endpoint to start analysis jobs for multiple files.

+This endpoint initiates analysis jobs for multiple files and sends them to the specified analyzers. +The file-related information and analyzers should be provided in the request data.

+Parameters: +- request (POST): Contains multiple file data and analyzer details.

+Returns: +- 200: JSON response with the job details for each initiated analysis.

+docs/Submodules/IntelOwl/api_app/views.pyanalyze_observableAPI endpoint to start an analysis job for a single observable.

+This endpoint initiates an analysis job for a single observable (e.g., domain, IP, URL, etc.) +and sends it to the specified analyzers. The observable-related information and analyzers should be +provided in the request data.

+Parameters: +- request (POST): Contains observable data and analyzer details.

+Returns: +- 200: JSON response with the job details after initiating the analysis.

+docs/Submodules/IntelOwl/api_app/views.pyanalyze_multiple_observablesAPI endpoint to start analysis jobs for multiple observables.

+This endpoint initiates analysis jobs for multiple observables (e.g., domain, IP, URL, etc.) +and sends them to the specified analyzers. The observables and analyzer details should +be provided in the request data.

+Parameters: +- request (POST): Contains multiple observable data and analyzer details.

+Returns: +- 200: JSON response with the job details for each initiated analysis.

+docs/Submodules/IntelOwl/api_app/views.pyCommentViewSet

+ Bases: ModelViewSet

CommentViewSet provides the following actions:

+Permissions: +- IsAuthenticated: Requires authentication for all actions. +- IsObjectUserPermission: Allows only the comment owner to update or delete the comment. +- IsObjectUserOrSameOrgPermission: Allows the comment owner or anyone in the same organization to retrieve the comment.

+Queryset: +- Filters comments to include only those associated with jobs visible to the authenticated user.

+docs/Submodules/IntelOwl/api_app/views.py325 +326 +327 +328 +329 +330 +331 +332 +333 +334 +335 +336 +337 +338 +339 +340 +341 +342 +343 +344 +345 +346 +347 +348 +349 +350 +351 +352 +353 +354 +355 +356 +357 +358 +359 +360 +361 +362 +363 +364 +365 +366 +367 +368 +369 +370 +371 +372 +373 +374 +375 +376 +377 +378 +379 +380 +381 +382 +383 +384 +385 +386 +387 +388 +389 +390 +391 +392 | |

get_permissions()

+Customizes permissions based on the action being performed.

+destroy, update, and partial_update actions, adds IsObjectUserPermission to ensure that only

+ the comment owner can perform these actions.retrieve action, adds IsObjectUserOrSameOrgPermission to allow the comment owner or anyone in the same

+ organization to retrieve the comment.Returns: +- List of applicable permissions.

+docs/Submodules/IntelOwl/api_app/views.pyget_queryset()

+Filters the queryset to include only comments related to jobs visible to the authenticated user.

+Returns: +- Filtered queryset of comments.

+docs/Submodules/IntelOwl/api_app/views.pyJobViewSet

+ Bases: ReadAndDeleteOnlyViewSet, SerializerActionMixin

JobViewSet provides the following actions:

+Permissions: +- IsAuthenticated: Requires authentication for all actions. +- IsObjectUserOrSameOrgPermission: Allows job deletion or killing only by the job owner or anyone in the same organization.

+Queryset: +- Prefetches related tags and orders jobs by request time, filtered to include only jobs visible to the authenticated user.

+docs/Submodules/IntelOwl/api_app/views.py395 +396 +397 +398 +399 +400 +401 +402 +403 +404 +405 +406 +407 +408 +409 +410 +411 +412 +413 +414 +415 +416 +417 +418 +419 +420 +421 +422 +423 +424 +425 +426 +427 +428 +429 +430 +431 +432 +433 +434 +435 +436 +437 +438 +439 +440 +441 +442 +443 +444 +445 +446 +447 +448 +449 +450 +451 +452 +453 +454 +455 +456 +457 +458 +459 +460 +461 +462 +463 +464 +465 +466 +467 +468 +469 +470 +471 +472 +473 +474 +475 +476 +477 +478 +479 +480 +481 +482 +483 +484 +485 +486 +487 +488 +489 +490 +491 +492 +493 +494 +495 +496 +497 +498 +499 +500 +501 +502 +503 +504 +505 +506 +507 +508 +509 +510 +511 +512 +513 +514 +515 +516 +517 +518 +519 +520 +521 +522 +523 +524 +525 +526 +527 +528 +529 +530 +531 +532 +533 +534 +535 +536 +537 +538 +539 +540 +541 +542 +543 +544 +545 +546 +547 +548 +549 +550 +551 +552 +553 +554 +555 +556 +557 +558 +559 +560 +561 +562 +563 +564 +565 +566 +567 +568 +569 +570 +571 +572 +573 +574 +575 +576 +577 +578 +579 +580 +581 +582 +583 +584 +585 +586 +587 +588 +589 +590 +591 +592 +593 +594 +595 +596 +597 +598 +599 +600 +601 +602 +603 +604 +605 +606 +607 +608 +609 +610 +611 +612 +613 +614 +615 +616 +617 +618 +619 +620 +621 +622 +623 +624 +625 +626 +627 +628 +629 +630 +631 +632 +633 +634 +635 +636 +637 +638 +639 +640 +641 +642 +643 +644 +645 +646 +647 +648 +649 +650 +651 +652 +653 +654 +655 +656 +657 +658 +659 +660 +661 +662 +663 +664 +665 +666 +667 +668 +669 +670 +671 +672 +673 +674 +675 +676 +677 +678 +679 +680 +681 +682 +683 +684 +685 +686 +687 +688 +689 +690 +691 +692 +693 +694 +695 +696 +697 +698 +699 +700 +701 +702 +703 +704 +705 +706 +707 +708 +709 +710 +711 +712 +713 +714 +715 +716 +717 +718 +719 +720 +721 +722 +723 +724 +725 +726 +727 +728 +729 +730 +731 +732 +733 +734 +735 +736 +737 +738 +739 +740 +741 +742 +743 +744 +745 +746 +747 +748 +749 +750 +751 +752 +753 +754 +755 +756 +757 +758 +759 +760 +761 +762 +763 +764 +765 +766 +767 +768 +769 +770 +771 +772 +773 +774 +775 +776 +777 +778 +779 +780 +781 +782 +783 +784 +785 +786 +787 +788 +789 +790 +791 +792 +793 +794 +795 +796 +797 +798 +799 +800 +801 +802 +803 +804 +805 +806 +807 +808 +809 +810 +811 +812 +813 +814 +815 +816 +817 +818 +819 +820 +821 +822 +823 +824 +825 +826 +827 +828 +829 +830 +831 +832 +833 +834 +835 +836 +837 +838 +839 +840 +841 +842 +843 +844 +845 +846 +847 +848 +849 +850 +851 +852 +853 +854 +855 +856 +857 +858 +859 +860 +861 +862 +863 +864 +865 +866 +867 +868 +869 +870 +871 +872 +873 +874 +875 +876 +877 +878 +879 +880 +881 +882 +883 +884 +885 +886 +887 +888 +889 +890 +891 +892 +893 +894 +895 +896 +897 +898 +899 +900 +901 +902 +903 +904 +905 +906 +907 +908 +909 +910 +911 +912 +913 | |

__aggregation_response_dynamic(field_name, group_by_date=True, limit=5, users=None)

+Dynamically aggregate Job objects based on a specified field and time range.

+This method identifies the most frequent values of a given field within +a specified time range and aggregates the Job objects accordingly. +Optionally, it can group the results by date and limit the number of +most frequent values.

+Parameters:

+| Name | +Type | +Description | +Default | +

|---|---|---|---|

+field_name

+ |

+

+str

+ |

+

+

+

+The name of the field to aggregate by. + |

++required + | +

+group_by_date

+ |

+

+bool

+ |

+

+

+

+Whether to group the results by date. Defaults to True. + |

+

+True

+ |

+

+limit

+ |

+

+int

+ |

+

+

+

+The maximum number of most frequent values to retrieve. Defaults to 5. + |

+

+5

+ |

+

+users

+ |

+

+list

+ |

+

+

+

+A list of users to filter the Job objects by. + |

+

+None

+ |

+

Returns:

+| Name | Type | +Description | +

|---|---|---|

Response |

+Response

+ |

+

+

+

+A Django REST framework Response object containing the most frequent values + |

+

+Response

+ |

+

+

+

+and the aggregated data. + |

+

docs/Submodules/IntelOwl/api_app/views.py813 +814 +815 +816 +817 +818 +819 +820 +821 +822 +823 +824 +825 +826 +827 +828 +829 +830 +831 +832 +833 +834 +835 +836 +837 +838 +839 +840 +841 +842 +843 +844 +845 +846 +847 +848 +849 +850 +851 +852 +853 +854 +855 +856 +857 +858 +859 +860 +861 +862 +863 +864 +865 +866 +867 +868 +869 +870 +871 +872 +873 +874 +875 +876 +877 +878 +879 +880 +881 +882 +883 +884 +885 +886 +887 +888 +889 +890 +891 | |

__aggregation_response_static(annotations, users=None)

+Generate a static aggregation of Job objects filtered by a time range.

+This method applies the provided annotations to aggregate Job objects +within the specified time range. Optionally, it filters the results by +the given list of users.

+Parameters:

+| Name | +Type | +Description | +Default | +

|---|---|---|---|

+annotations

+ |

+

+dict

+ |

+

+

+

+Annotations to apply for the aggregation. + |

++required + | +

+users

+ |

+

+list

+ |

+

+

+

+A list of users to filter the Job objects by. + |

+

+None

+ |

+

Returns:

+| Name | Type | +Description | +

|---|---|---|

Response |

+Response

+ |

+

+

+

+A Django REST framework Response object containing the aggregated data. + |

+

docs/Submodules/IntelOwl/api_app/views.py__parse_range(request)

+

+staticmethod

+

+Parse the time range from the request query parameters.

+This method attempts to extract the 'range' query parameter from the +request. If the parameter is not provided, it defaults to '7d' (7 days).

+Parameters:

+| Name | +Type | +Description | +Default | +

|---|---|---|---|

+request

+ |

++ | +

+

+

+The HTTP request object containing query parameters. + |

++required + | +

Returns:

+| Name | Type | +Description | +

|---|---|---|

tuple | + | +

+

+

+A tuple containing the parsed time delta and the basis for date truncation. + |

+

docs/Submodules/IntelOwl/api_app/views.pyaggregate_file_mimetype(request)

+Aggregate jobs by file MIME type.

+Returns: +- Aggregated count of jobs for each MIME type.

+docs/Submodules/IntelOwl/api_app/views.pyaggregate_md5(request)

+Aggregate jobs by MD5 hash.

+Returns: +- Aggregated count of jobs for each MD5 hash.

+docs/Submodules/IntelOwl/api_app/views.pyaggregate_observable_classification(request)

+Aggregate jobs by observable classification.

+Returns: +- Aggregated count of jobs for each observable classification.

+docs/Submodules/IntelOwl/api_app/views.pyaggregate_observable_name(request)

+Aggregate jobs by observable name.

+Returns: +- Aggregated count of jobs for each observable name.

+docs/Submodules/IntelOwl/api_app/views.pyaggregate_status(request)

+Aggregate jobs by their status.

+Returns: +- Aggregated count of jobs for each status.

+docs/Submodules/IntelOwl/api_app/views.pyaggregate_type(request)

+Aggregate jobs by type (file or observable).

+Returns: +- Aggregated count of jobs for each type.

+docs/Submodules/IntelOwl/api_app/views.pydownload_sample(request, pk=None)

+Download a sample associated with a job.

+If the job does not have a sample, raises a validation error.

+Returns: +- The file associated with the job as an attachment.

+:param url: pk (job_id) +:returns: bytes

+docs/Submodules/IntelOwl/api_app/views.pyget_org_members(request)

+

+staticmethod

+

+Retrieve members of the organization associated with the authenticated user.

+If the 'org' query parameter is set to 'true', this method returns all +users who are members of the authenticated user's organization.

+Parameters:

+| Name | +Type | +Description | +Default | +

|---|---|---|---|

+request

+ |

++ | +

+

+

+The HTTP request object containing user information and query parameters. + |

++required + | +

Returns:

+| Type | +Description | +

|---|---|

| + | +

+

+

+list or None: A list of users who are members of the user's organization + |

+

| + | +

+

+

+if the 'org' query parameter is 'true', otherwise None. + |

+

docs/Submodules/IntelOwl/api_app/views.pyget_permissions()

+Customizes permissions based on the action being performed.

+destroy and kill actions, adds IsObjectUserOrSameOrgPermission to ensure that only

+ the job owner or anyone in the same organization can perform these actions.Returns: +- List of applicable permissions.

+docs/Submodules/IntelOwl/api_app/views.pyget_queryset()

+Filters the queryset to include only jobs visible to the authenticated user, ordered by request time.

+Logs the request parameters and returns the filtered queryset.

+Returns: +- Filtered queryset of jobs.

+docs/Submodules/IntelOwl/api_app/views.pykill(request, pk=None)

+Kill a running job by closing celery tasks and marking the job as killed.

+If the job is not running, raises a validation error.

+Returns: +- No content (204) if the job is successfully killed.

+docs/Submodules/IntelOwl/api_app/views.pypivot(request, pk=None, pivot_config_pk=None)

+Perform a pivot operation from a job's reports based on a specified pivot configuration.

+Expects the following parameters:

+- pivot_config_pk: The primary key of the pivot configuration to use.

Returns: +- List of job IDs created as a result of the pivot.

+docs/Submodules/IntelOwl/api_app/views.pyrecent_scans(request)

+Retrieve recent jobs based on an MD5 hash, filtered by a maximum temporal distance.

+Expects the following parameters in the request data:

+- md5: The MD5 hash to filter jobs by.

+- max_temporal_distance: The maximum number of days to look back for recent jobs (default is 14 days).

Returns: +- List of recent jobs matching the MD5 hash.

+docs/Submodules/IntelOwl/api_app/views.pyrecent_scans_user(request)

+Retrieve recent jobs for the authenticated user, filtered by sample status.

+Expects the following parameters in the request data:

+- is_sample: Whether to filter jobs by sample status (required).

+- limit: The maximum number of recent jobs to return (default is 5).

Returns: +- List of recent jobs for the user.

+docs/Submodules/IntelOwl/api_app/views.pyretry(request, pk=None)

+Retry a job if its status is in a final state.

+If the job is currently running, raises a validation error.

+Returns: +- No content (204) if the job is successfully retried.

+docs/Submodules/IntelOwl/api_app/views.pyTagViewSet

+ Bases: ModelViewSet

A viewset that provides CRUD (Create, Read, Update, Delete) operations

+for the Tag model.

This viewset leverages Django REST framework's ModelViewSet to handle

+requests for the Tag model. It includes the default implementations

+for list, retrieve, create, update, partial_update, and destroy actions.

Attributes:

+| Name | +Type | +Description | +

|---|---|---|

queryset |

+

+QuerySet

+ |

+

+

+

+The queryset that retrieves all Tag objects from the database. + |

+

serializer_class |

+

+Serializer

+ |

+

+

+

+The serializer class used to convert Tag model instances to JSON and vice versa. + |

+

pagination_class |

++ | +

+

+

+Pagination is disabled for this viewset. + |

+

docs/Submodules/IntelOwl/api_app/views.pyModelWithOwnershipViewSet

+ Bases: ModelViewSet

A viewset that enforces ownership-based access control for models.

+This class extends the functionality of ModelViewSet to restrict access to

+objects based on ownership. It modifies the queryset for the list action

+to only include objects visible to the requesting user, and adds custom

+permission checks for destroy and update actions.

Methods:

+| Name | +Description | +

|---|---|

get_queryset |

+

+

+

+Returns the queryset of the model, filtered for visibility

+ to the requesting user during the |

+

get_permissions |

+

+

+

+Returns the permissions required for the current action,

+ with additional checks for ownership during |

+

docs/Submodules/IntelOwl/api_app/views.pyget_permissions()

+Retrieves the permissions required for the current action.

+For the destroy and update actions, additional checks are performed to

+ensure that only object owners or admins can perform these actions. Raises

+a PermissionDenied exception for PUT requests.

Returns:

+| Name | Type | +Description | +

|---|---|---|

list | + | +

+

+

+A list of permission instances. + |

+

docs/Submodules/IntelOwl/api_app/views.pyget_queryset()

+Retrieves the queryset for the viewset, modifying it for the list action

+to only include objects visible to the requesting user.

Returns:

+| Name | Type | +Description | +

|---|---|---|

QuerySet | + | +

+

+

+The queryset of the model, possibly filtered for visibility. + |

+

docs/Submodules/IntelOwl/api_app/views.pyPluginConfigViewSet

+ Bases: ModelWithOwnershipViewSet

A viewset for managing PluginConfig objects with ownership-based access control.

This viewset extends ModelWithOwnershipViewSet to handle PluginConfig objects,

+allowing users to list, retrieve, and delete configurations while ensuring that only

+authorized configurations are accessible. It customizes the queryset to exclude default

+values and orders the configurations by ID.

Attributes:

+| Name | +Type | +Description | +

|---|---|---|

serializer_class |

+

+class

+ |

+

+

+

+The serializer class used for |

+

pagination_class |

+

+class

+ |

+

+

+

+Specifies that pagination is not applied. + |

+

queryset |

+

+QuerySet

+ |

+

+

+

+The queryset for |

+

Methods:

+| Name | +Description | +

|---|---|

get_queryset |

+

+

+

+Returns the queryset for |

+

docs/Submodules/IntelOwl/api_app/views.pyget_queryset()

+Retrieves the queryset for PluginConfig objects, excluding those with default values

+(where the owner is NULL) and ordering the remaining objects by ID.

Returns:

+| Name | Type | +Description | +

|---|---|---|

QuerySet | + | +

+

+

+The filtered and ordered queryset of |

+

docs/Submodules/IntelOwl/api_app/views.pyPythonReportActionViewSet

+ Bases: GenericViewSet

A base view set for handling actions related to plugin reports.

+This view set provides methods for killing and retrying plugin reports,

+and requires users to have appropriate permissions based on the

+IsObjectUserOrSameOrgPermission.

Attributes:

+| Name | +Type | +Description | +

|---|---|---|

permission_classes |

+

+list

+ |

+

+

+

+List of permission classes to apply. + |

+

Methods: +get_queryset: Returns the queryset of reports based on the model class. +get_object: Retrieves a specific report object by job_id and report_id. +perform_kill: Kills a running plugin by terminating its Celery task and marking it as killed. +perform_retry: Retries a failed or killed plugin run. +kill: Handles the endpoint to kill a specific report. +retry: Handles the endpoint to retry a specific report.

+docs/Submodules/IntelOwl/api_app/views.py1080 +1081 +1082 +1083 +1084 +1085 +1086 +1087 +1088 +1089 +1090 +1091 +1092 +1093 +1094 +1095 +1096 +1097 +1098 +1099 +1100 +1101 +1102 +1103 +1104 +1105 +1106 +1107 +1108 +1109 +1110 +1111 +1112 +1113 +1114 +1115 +1116 +1117 +1118 +1119 +1120 +1121 +1122 +1123 +1124 +1125 +1126 +1127 +1128 +1129 +1130 +1131 +1132 +1133 +1134 +1135 +1136 +1137 +1138 +1139 +1140 +1141 +1142 +1143 +1144 +1145 +1146 +1147 +1148 +1149 +1150 +1151 +1152 +1153 +1154 +1155 +1156 +1157 +1158 +1159 +1160 +1161 +1162 +1163 +1164 +1165 +1166 +1167 +1168 +1169 +1170 +1171 +1172 +1173 +1174 +1175 +1176 +1177 +1178 +1179 +1180 +1181 +1182 +1183 +1184 +1185 +1186 +1187 +1188 +1189 +1190 +1191 +1192 +1193 +1194 +1195 +1196 +1197 +1198 +1199 +1200 +1201 +1202 +1203 +1204 +1205 +1206 +1207 +1208 +1209 +1210 +1211 +1212 +1213 +1214 +1215 +1216 +1217 +1218 +1219 +1220 +1221 +1222 +1223 +1224 +1225 +1226 +1227 +1228 +1229 +1230 +1231 +1232 +1233 +1234 +1235 +1236 +1237 +1238 +1239 +1240 +1241 +1242 +1243 +1244 +1245 +1246 +1247 +1248 +1249 +1250 +1251 +1252 +1253 +1254 +1255 +1256 +1257 +1258 +1259 +1260 +1261 +1262 +1263 +1264 +1265 +1266 +1267 +1268 +1269 +1270 +1271 +1272 +1273 +1274 +1275 +1276 +1277 +1278 +1279 +1280 +1281 +1282 +1283 +1284 +1285 +1286 +1287 +1288 +1289 +1290 +1291 +1292 +1293 +1294 +1295 +1296 +1297 +1298 +1299 +1300 +1301 +1302 +1303 +1304 | |

report_model

+

+abstractmethod

+classmethod

+property

+

+Abstract property that should return the model class for the report.

+Subclasses must implement this property to specify the model +class for the reports being handled by this view set.

+Returns:

+| Type | +Description | +

|---|---|

| + | +

+

+

+Type[AbstractReport]: The model class for the report. + |

+

Raises:

+| Type | +Description | +

|---|---|

+NotImplementedError

+ |

+

+

+

+If not overridden by a subclass. + |

+

get_object(job_id, report_id)

+Retrieves a specific report object by job_id and report_id.

+Overrides the drf's default get_object method to fetch a report object

+based on job_id and report_id, and checks the permissions for the object.

Parameters:

+| Name | +Type | +Description | +Default | +

|---|---|---|---|

+job_id

+ |

+

+int

+ |

+

+

+

+The ID of the job associated with the report. + |

++required + | +

+report_id

+ |

+

+int

+ |

+

+

+

+The ID of the report. + |

++required + | +

Returns:

+| Name | Type | +Description | +

|---|---|---|

AbstractReport |

+AbstractReport

+ |

+

+

+

+The report object. + |

+

Raises:

+| Type | +Description | +

|---|---|

+NotFound

+ |

+

+

+

+If the report does not exist. + |

+

docs/Submodules/IntelOwl/api_app/views.pyget_queryset()

+Returns the queryset of reports based on the model class.

+Filters the queryset to return all instances of the report model.

+Returns:

+| Name | Type | +Description | +

|---|---|---|

QuerySet | + | +

+

+

+A queryset of all report instances. + |

+

docs/Submodules/IntelOwl/api_app/views.pykill(request, job_id, report_id)

+Kills a specific report by terminating its Celery task and marking it as killed.

+This endpoint handles the patch request to kill a report if its status is +running or pending.

+Parameters:

+| Name | +Type | +Description | +Default | +

|---|---|---|---|

+request

+ |

+

+HttpRequest

+ |

+

+

+

+The request object containing the HTTP PATCH request. + |

++required + | +

+job_id

+ |

+

+int

+ |

+

+

+

+The ID of the job associated with the report. + |

++required + | +

+report_id

+ |

+

+int

+ |

+

+

+

+The ID of the report. + |

++required + | +

Returns:

+| Name | Type | +Description | +

|---|---|---|

Response | + | +

+

+

+HTTP 204 No Content if successful. + |

+

Raises:

+| Type | +Description | +

|---|---|

+ValidationError

+ |

+

+

+

+If the report is not in a valid state for killing. + |

+

docs/Submodules/IntelOwl/api_app/views.pyperform_kill(report)

+

+staticmethod

+

+Kills a running plugin by terminating its Celery task and marking it as killed.

+This method is a callback for performing additional actions after a +kill operation, including updating the report status and cleaning up +the associated job.

+Parameters:

+| Name | +Type | +Description | +Default | +

|---|---|---|---|

+report

+ |

+

+AbstractReport

+ |

+

+

+

+The report to be killed. + |

++required + | +

docs/Submodules/IntelOwl/api_app/views.pyperform_retry(report)

+

+staticmethod

+

+Retries a failed or killed plugin run.

+This method clears the errors and re-runs the plugin with the same arguments. +It fetches the appropriate task signature and schedules the job again.

+Parameters:

+| Name | +Type | +Description | +Default | +

|---|---|---|---|

+report

+ |

+

+AbstractReport

+ |

+

+

+

+The report to be retried. + |

++required + | +

Raises: