diff --git a/docs-website/docusaurus.config.js b/docs-website/docusaurus.config.js

index 52392d23b39579..3b6db41466bbe1 100644

--- a/docs-website/docusaurus.config.js

+++ b/docs-website/docusaurus.config.js

@@ -283,6 +283,9 @@ module.exports = {

path: "src/pages",

mdxPageComponent: "@theme/MDXPage",

},

+ googleTagManager: {

+ containerId: 'GTM-WK28RLTG',

+ },

},

],

],

@@ -296,7 +299,6 @@ module.exports = {

routeBasePath: "/docs/graphql",

},

],

- // '@docusaurus/plugin-google-gtag',

// [

// require.resolve("@easyops-cn/docusaurus-search-local"),

// {

diff --git a/docs-website/sidebars.js b/docs-website/sidebars.js

index 2b60906b794a29..f07f1aa031bc73 100644

--- a/docs-website/sidebars.js

+++ b/docs-website/sidebars.js

@@ -69,24 +69,38 @@ module.exports = {

type: "category",

items: [

{

- type: "doc",

- id: "docs/managed-datahub/observe/freshness-assertions",

- className: "saasOnly",

- },

- {

- type: "doc",

- id: "docs/managed-datahub/observe/volume-assertions",

- className: "saasOnly",

- },

- {

- type: "doc",

- id: "docs/managed-datahub/observe/custom-sql-assertions",

- className: "saasOnly",

+ label: "Assertions",

+ type: "category",

+ link: {

+ type: "doc",

+ id: "docs/managed-datahub/observe/assertions",

+ },

+ items: [

+ {

+ type: "doc",

+ id: "docs/managed-datahub/observe/freshness-assertions",

+ className: "saasOnly",

+ },

+ {

+ type: "doc",

+ id: "docs/managed-datahub/observe/volume-assertions",

+ className: "saasOnly",

+ },

+ {

+ type: "doc",

+ id: "docs/managed-datahub/observe/custom-sql-assertions",

+ className: "saasOnly",

+ },

+ {

+ type: "doc",

+ id: "docs/managed-datahub/observe/column-assertions",

+ className: "saasOnly",

+ },

+ ],

},

{

type: "doc",

- id: "docs/managed-datahub/observe/column-assertions",

- className: "saasOnly",

+ id: "docs/managed-datahub/observe/data-contract",

},

],

},

diff --git a/docs/managed-datahub/observe/assertions.md b/docs/managed-datahub/observe/assertions.md

new file mode 100644

index 00000000000000..f6d47ebfb30e2d

--- /dev/null

+++ b/docs/managed-datahub/observe/assertions.md

@@ -0,0 +1,48 @@

+# Assertions

+

+:::note Contract Monitoring Support

+Currently we support Snowflake, Databricks, Redshift, and BigQuery for out-of-the-box contract monitoring as part of Acryl Observe.

+:::

+

+An assertion is **a data quality test that finds data that violates a specified rule.**

+Assertions serve as the building blocks of [Data Contracts](/docs/managed-datahub/observe/data-contract.md) – this is how we verify the contract is met.

+

+## How to Create and Run Assertions

+

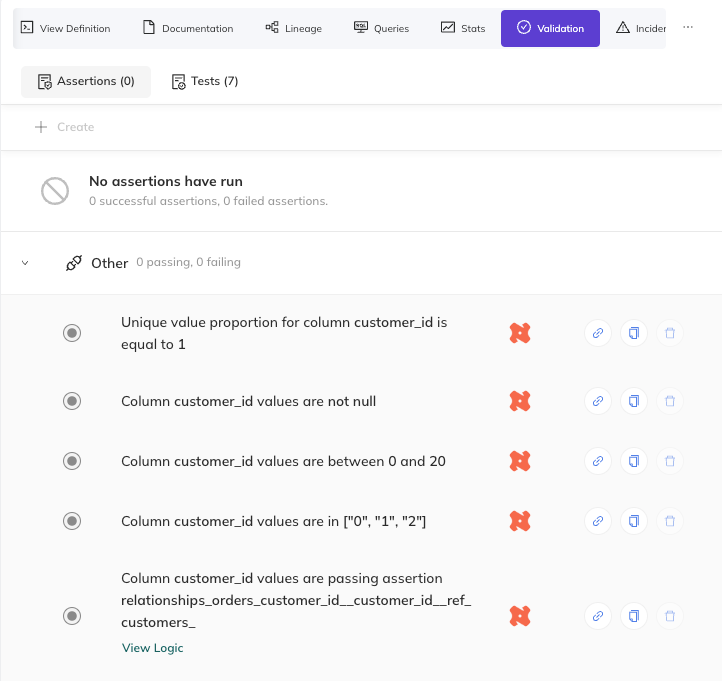

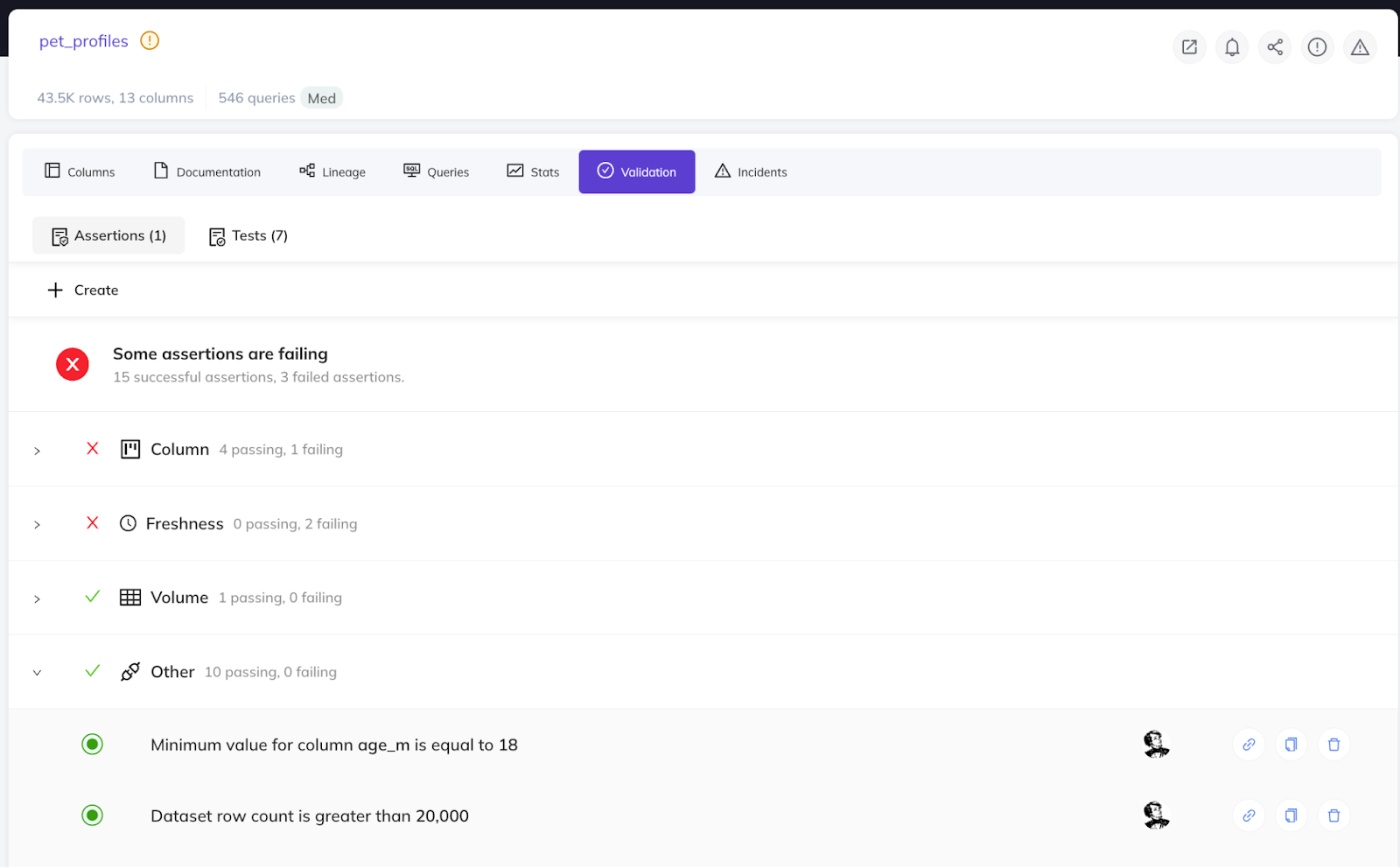

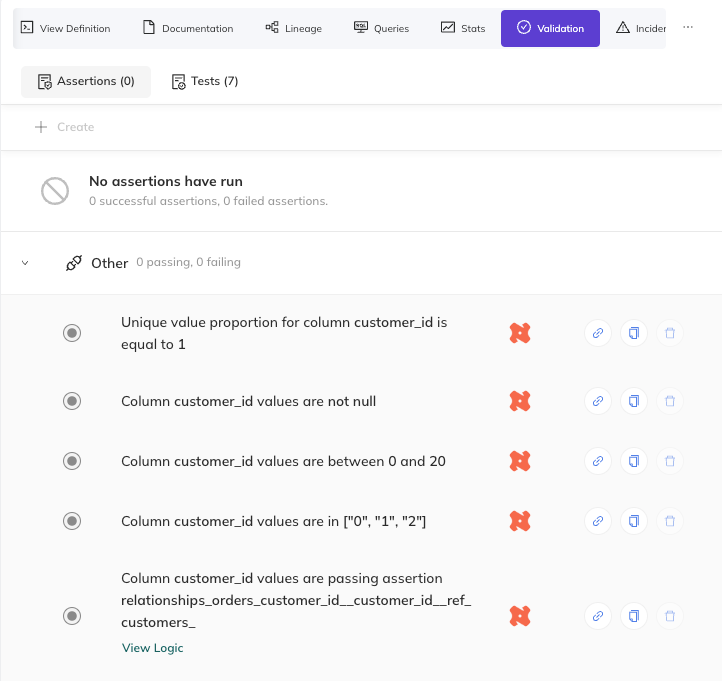

+Data quality tests (a.k.a. assertions) can be created and run by Acryl or ingested from a 3rd party tool.

+

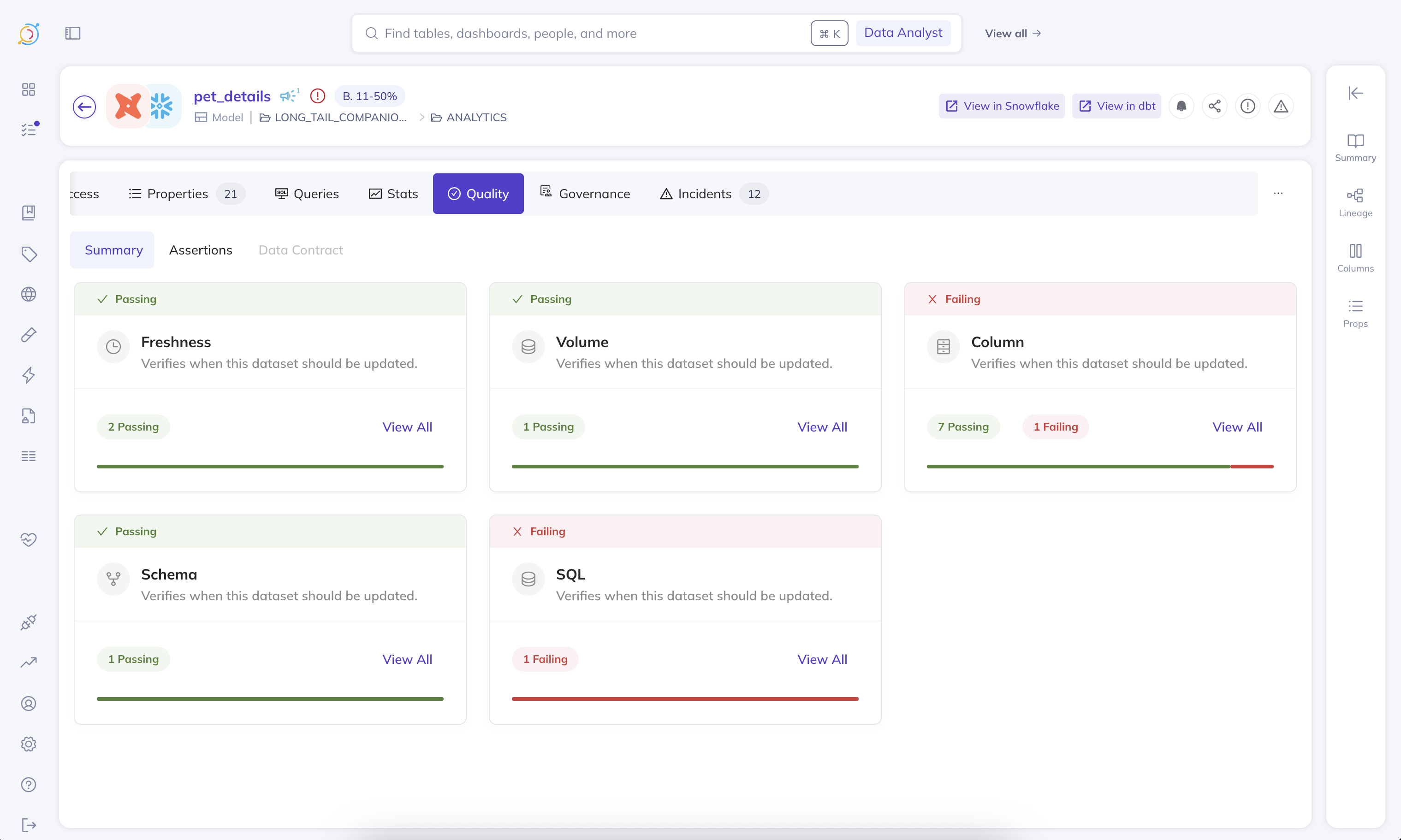

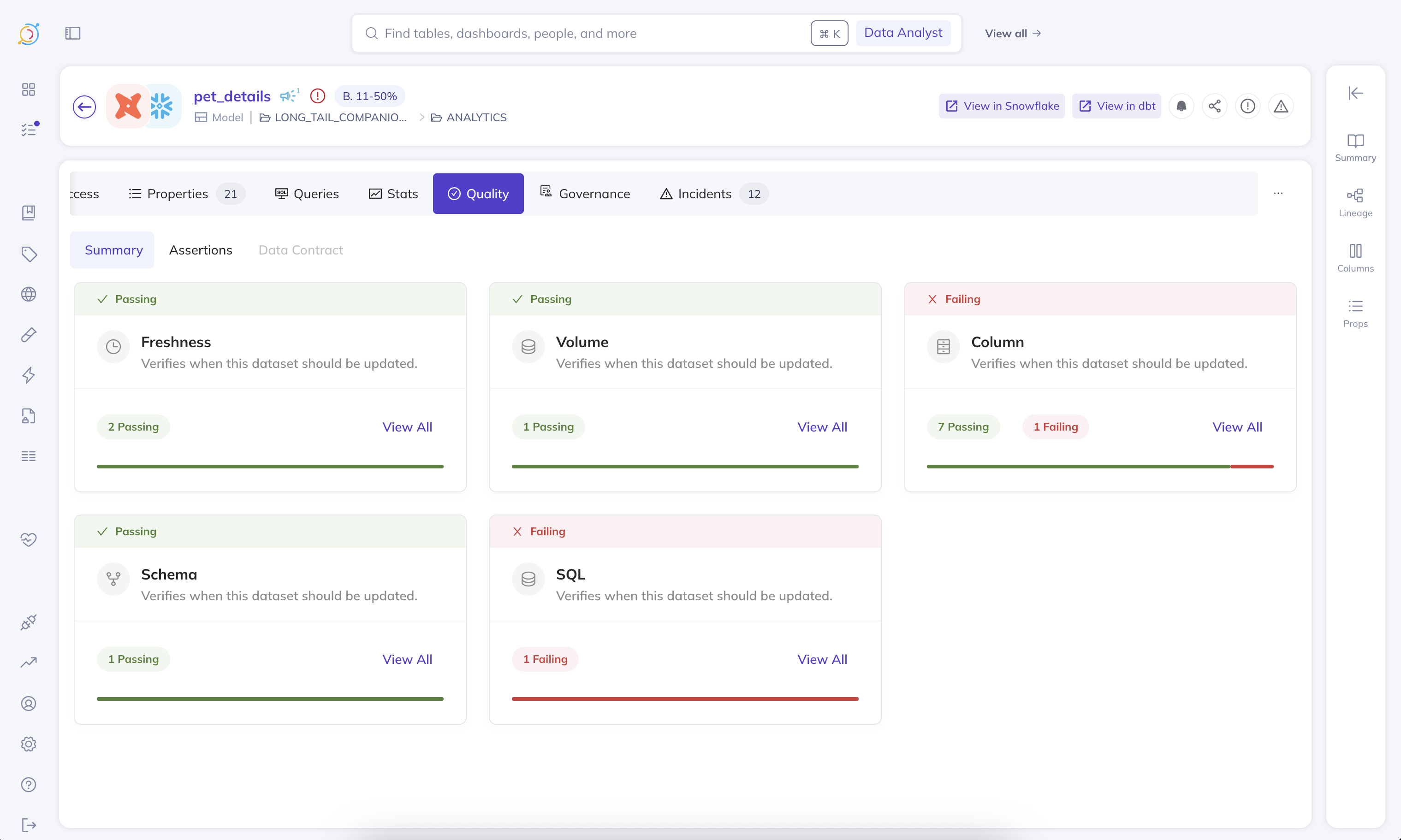

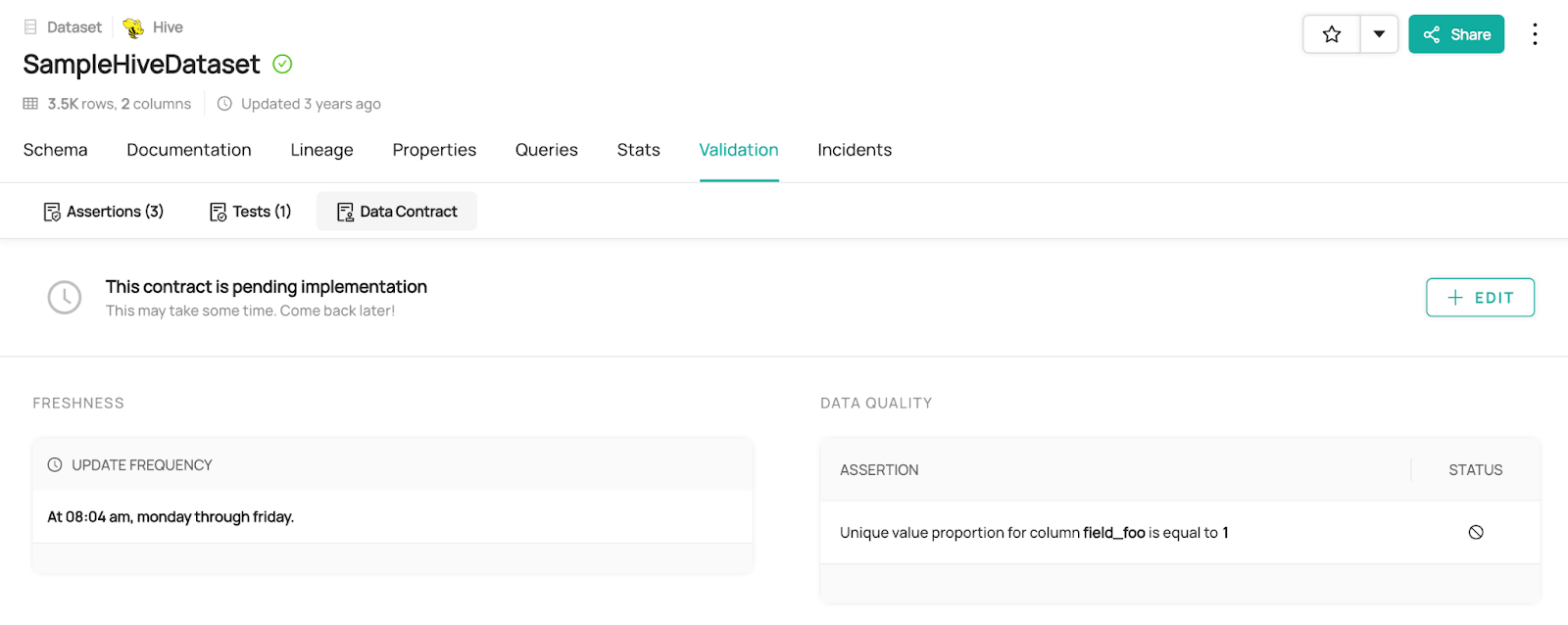

+### Acryl Observe

+

+For Acryl-provided assertion runners, we can deploy an agent in your environment to hit your sources and DataHub. Acryl Observe offers out-of-the-box evaluation of the following kinds of assertions:

+

+- [Freshness](/docs/managed-datahub/observe/freshness-assertions.md) (SLAs)

+- [Volume](/docs/managed-datahub/observe/volume-assertions.md)

+- [Custom SQL](/docs/managed-datahub/observe/custom-sql-assertions.md)

+- [Column](/docs/managed-datahub/observe/column-assertions.md)

+

+These can be defined through the DataHub API or the UI.

+

+

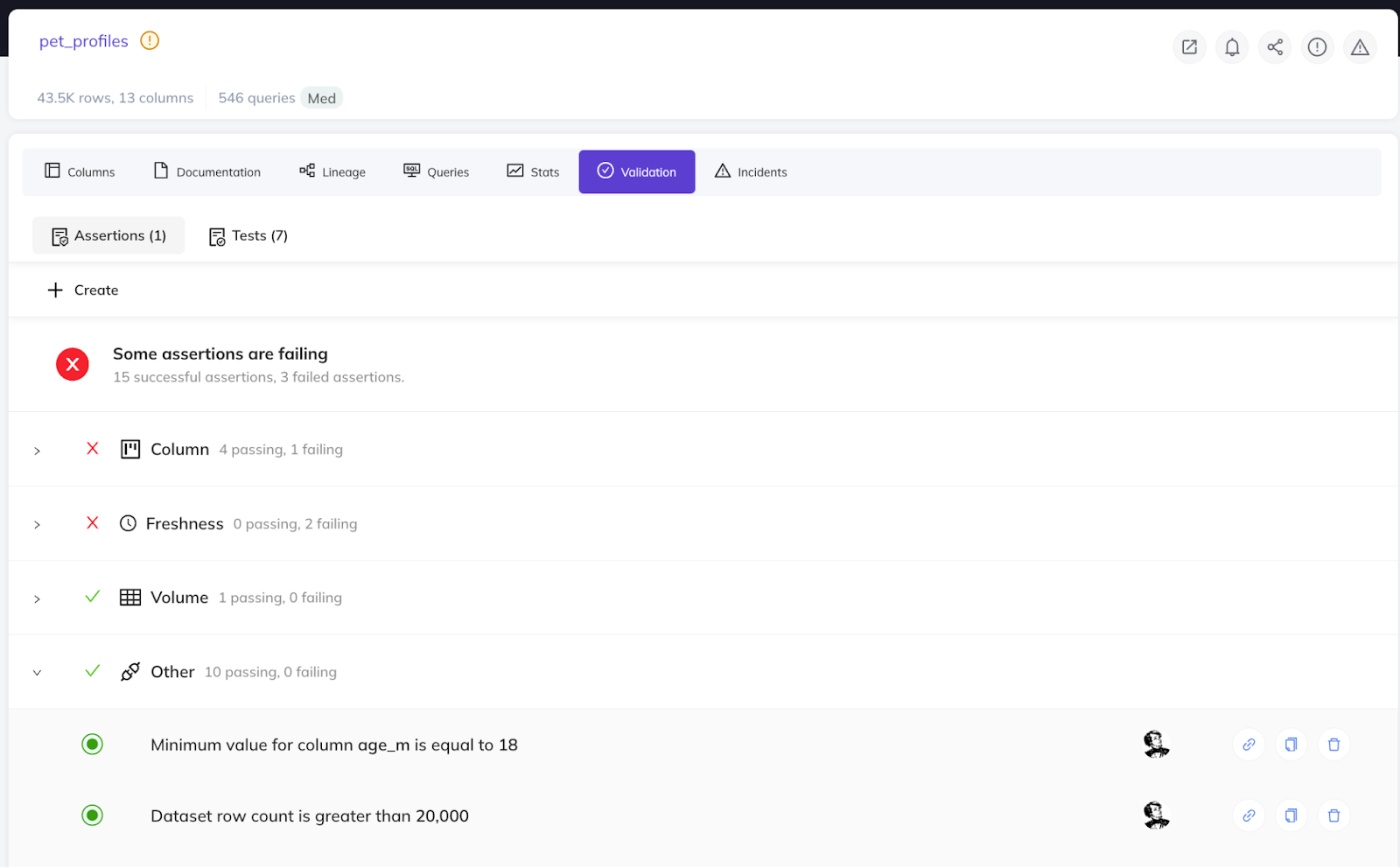

+  +

+

+

+### 3rd Party Runners

+

+You can integrate 3rd party tools as follows:

+

+- [DBT Test](/docs/generated/ingestion/sources/dbt.md#integrating-with-dbt-test)

+- [Great Expectations](../../../metadata-ingestion/integration_docs/great-expectations.md)

+

+If you opt for a 3rd party tool, it will be your responsibility to ensure the assertions are run based on the Data Contract spec stored in DataHub. With 3rd party runners, you can get the Assertion Change events by subscribing to our Kafka topic using the [DataHub Actions Framework](/docs/actions/README.md).

+

+

+## Alerts

+

+Beyond the ability to see the results of the assertion checks (and history of the results) both on the physical asset’s page in the DataHub UI and as the result of DataHub API calls, you can also get notified via [slack messages](/docs/managed-datahub/saas-slack-setup.md) (DMs or to a team channel) based on your [subscription](https://youtu.be/VNNZpkjHG_I?t=79) to an assertion change event. In the future, we’ll also provide the ability to subscribe directly to contracts.

+

+With Acryl Observe, you can get the Assertion Change event by getting API events via [AWS EventBridge](/docs/managed-datahub/operator-guide/setting-up-events-api-on-aws-eventbridge.md) (the availability and simplicity of setup of each solution dependent on your current Acryl setup – chat with your Acryl representative to learn more).

+

+

+## Cost

+

+We provide a plethora of ways to run your assertions, aiming to allow you to use the cheapest possible means to do so and/or the most accurate means to do so, depending on your use case. For example, for Freshness (SLA) assertions, it is relatively cheap to use either their Audit Log or Information Schema as a means to run freshness checks, and we support both of those as well as Last Modified Column, High Watermark Column, and DataHub Operation ([see the docs for more details](/docs/managed-datahub/observe/freshness-assertions.md#3-change-source)).

diff --git a/docs/managed-datahub/observe/data-contract.md b/docs/managed-datahub/observe/data-contract.md

new file mode 100644

index 00000000000000..31bb414b4bf69f

--- /dev/null

+++ b/docs/managed-datahub/observe/data-contract.md

@@ -0,0 +1,119 @@

+# Data Contracts

+

+## What Is a Data Contract

+

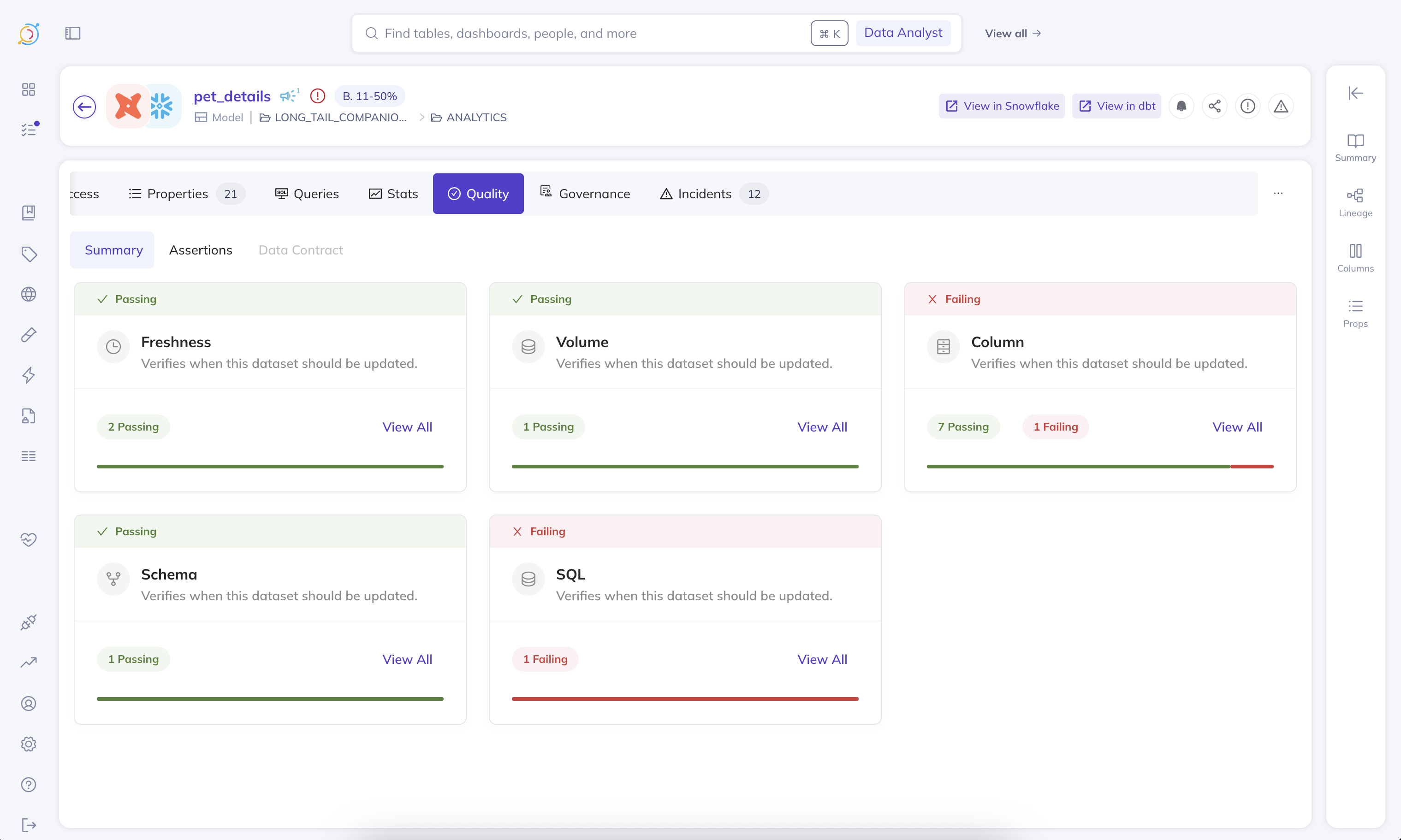

+A Data Contract is **an agreement between a data asset's producer and consumer**, serving as a promise about the quality of the data.

+It often includes [assertions](assertions.md) about the data’s schema, freshness, and data quality.

+

+Some of the key characteristics of a Data Contract are:

+

+- **Verifiable** : based on the actual physical data asset, not its metadata (e.g., schema checks, column-level data checks, and operational SLA-s but not documentation, ownership, and tags).

+- **A set of assertions** : The actual checks against the physical asset to determine a contract’s status (schema, freshness, volume, custom, and column)

+- **Producer oriented** : One contract per physical data asset, owned by the producer.

+

+

+

+Consumer Oriented Data contracts

+We’ve gone with producer-oriented contracts to keep the number of contracts manageable and because we expect consumers to desire a lot of overlap in a given physical asset’s contract. Although, we've heard feedback that consumer-oriented data contracts meet certain needs that producer-oriented contracts do not. For example, having one contract per consumer all on the same physical data asset would allow each consumer to get alerts only when the assertions they care about are violated.We welcome feedback on this in slack!

+

+

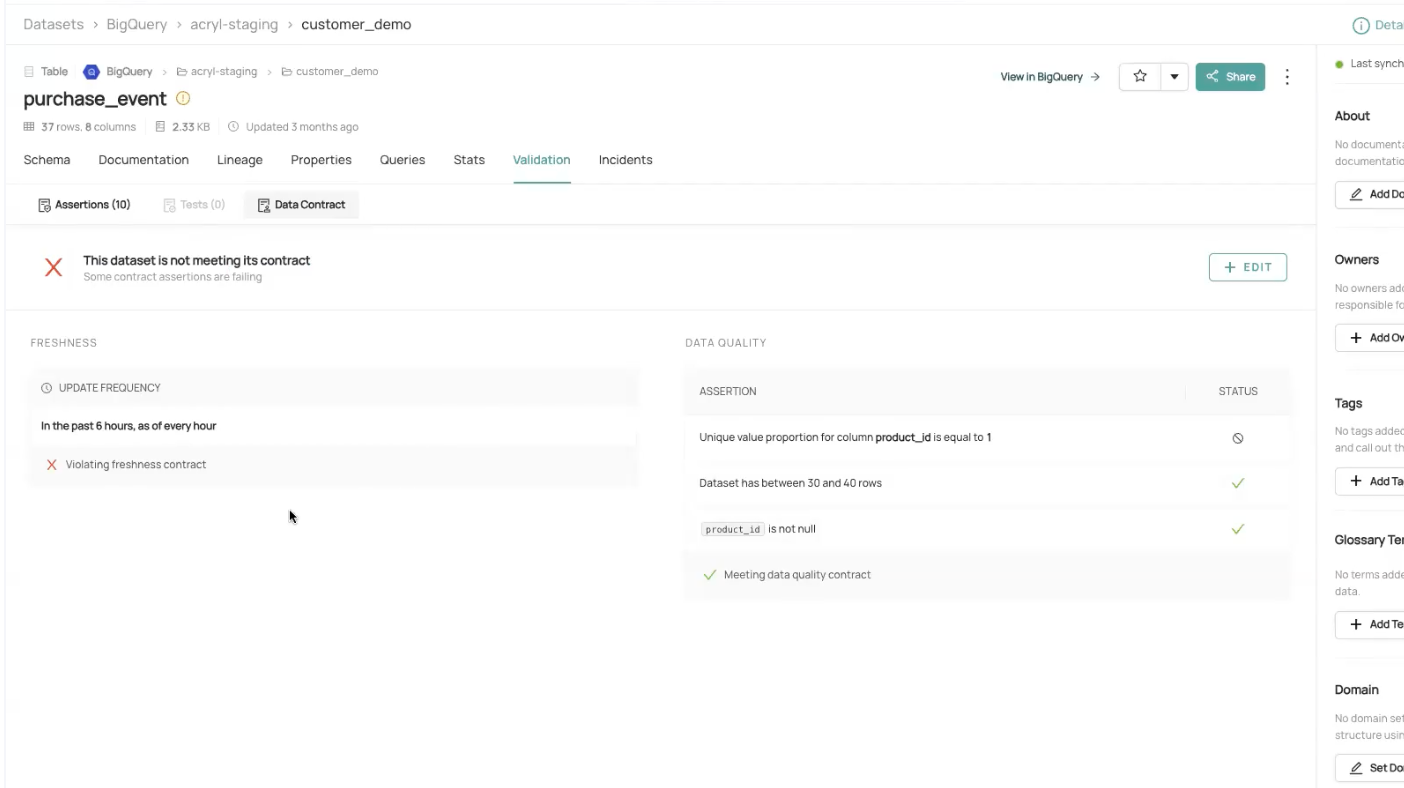

+Below is a screenshot of the Data Contracts UI in DataHub.

+

+

+  +

+

+

+## Data Contract and Assertions

+

+Another way to word our vision of data contracts is **A bundle of verifiable assertions on physical data assets representing a public producer commitment.**

+These can be all the assertions on an asset or only the subset you want publicly promised to consumers. Data Contracts allow you to **promote a selected group of your assertions** as a public promise: if this subset of assertions is not met, the Data Contract is failing.

+

+See docs on [assertions](/docs/managed-datahub/observe/assertions.md) for more details on the types of assertions and how to create and run them.

+

+:::note Ownership

+The owner of the physical data asset is also the owner of the contract and can accept proposed changes and make changes themselves to the contract.

+:::

+

+

+## How to Create Data Contracts

+

+Data Contracts can be created via DataHub CLI (YAML), API, or UI.

+

+### DataHub CLI using YAML

+

+For creation via CLI, it’s a simple CLI upsert command that you can integrate into your CI/CD system to publish your Data Contracts and any change to them.

+

+1. Define your data contract.

+

+```yaml

+{{ inline /metadata-ingestion/examples/library/create_data_contract.yml show_path_as_comment }}

+```

+

+2. Use the CLI to create the contract by running the below command.

+

+```shell

+datahub datacontract upsert -f contract_definition.yml

+```

+

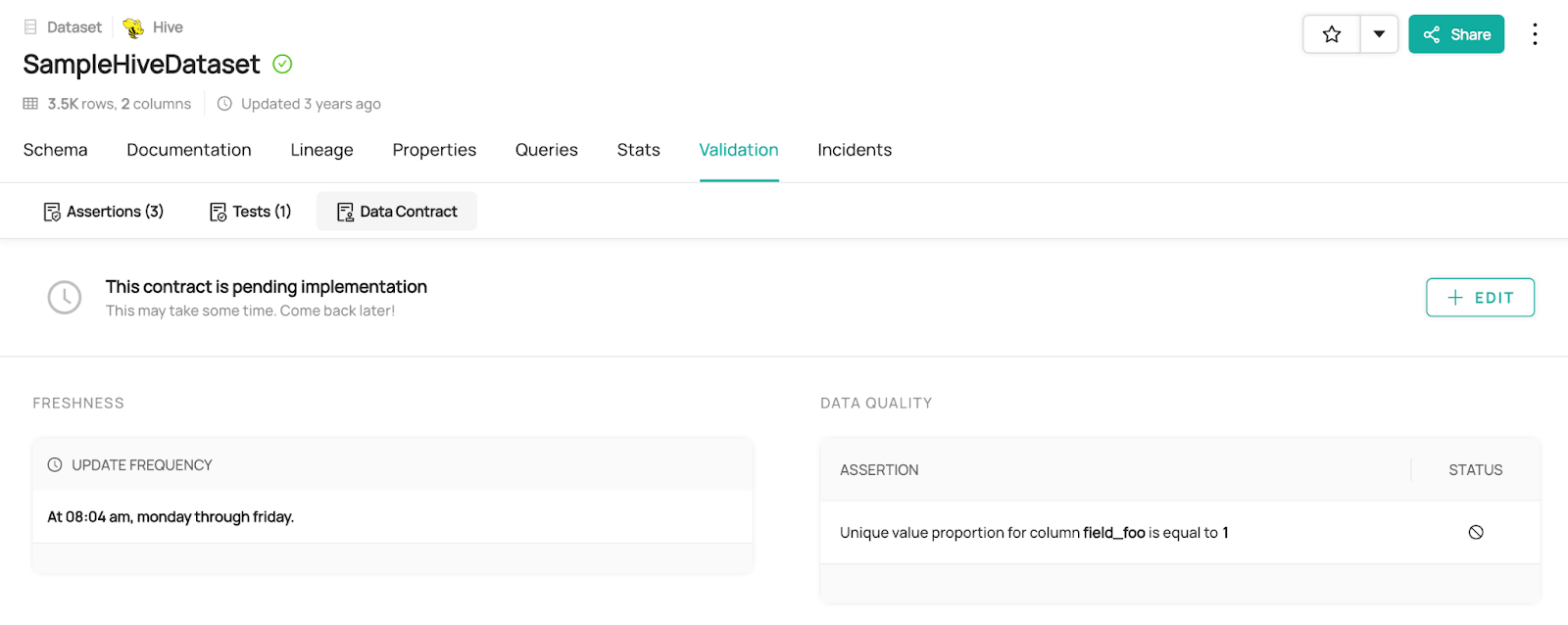

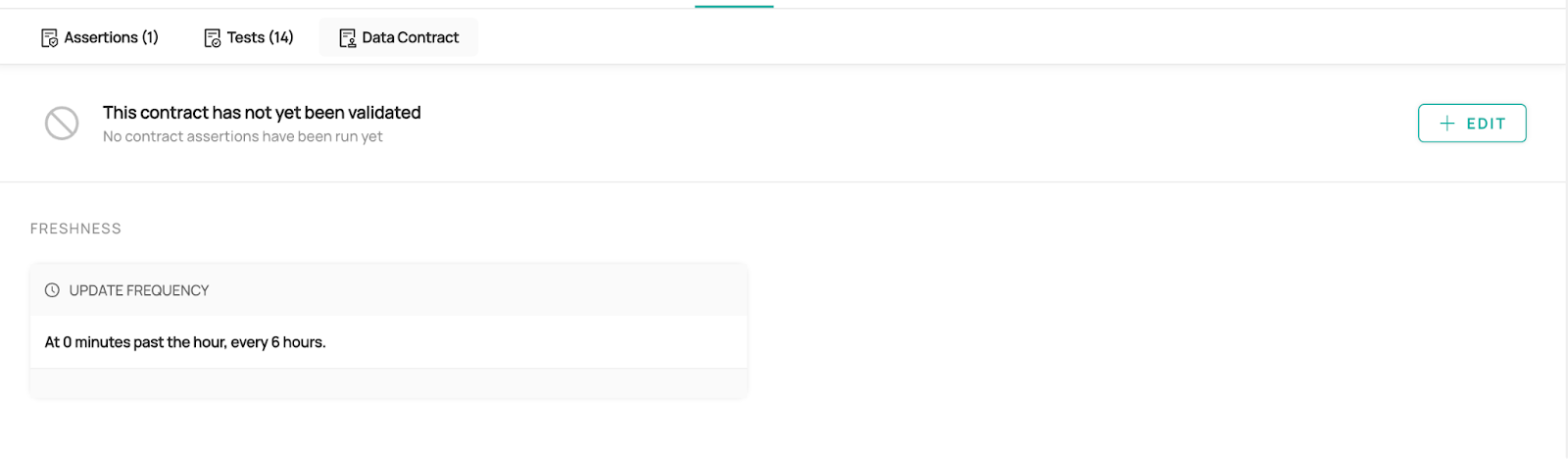

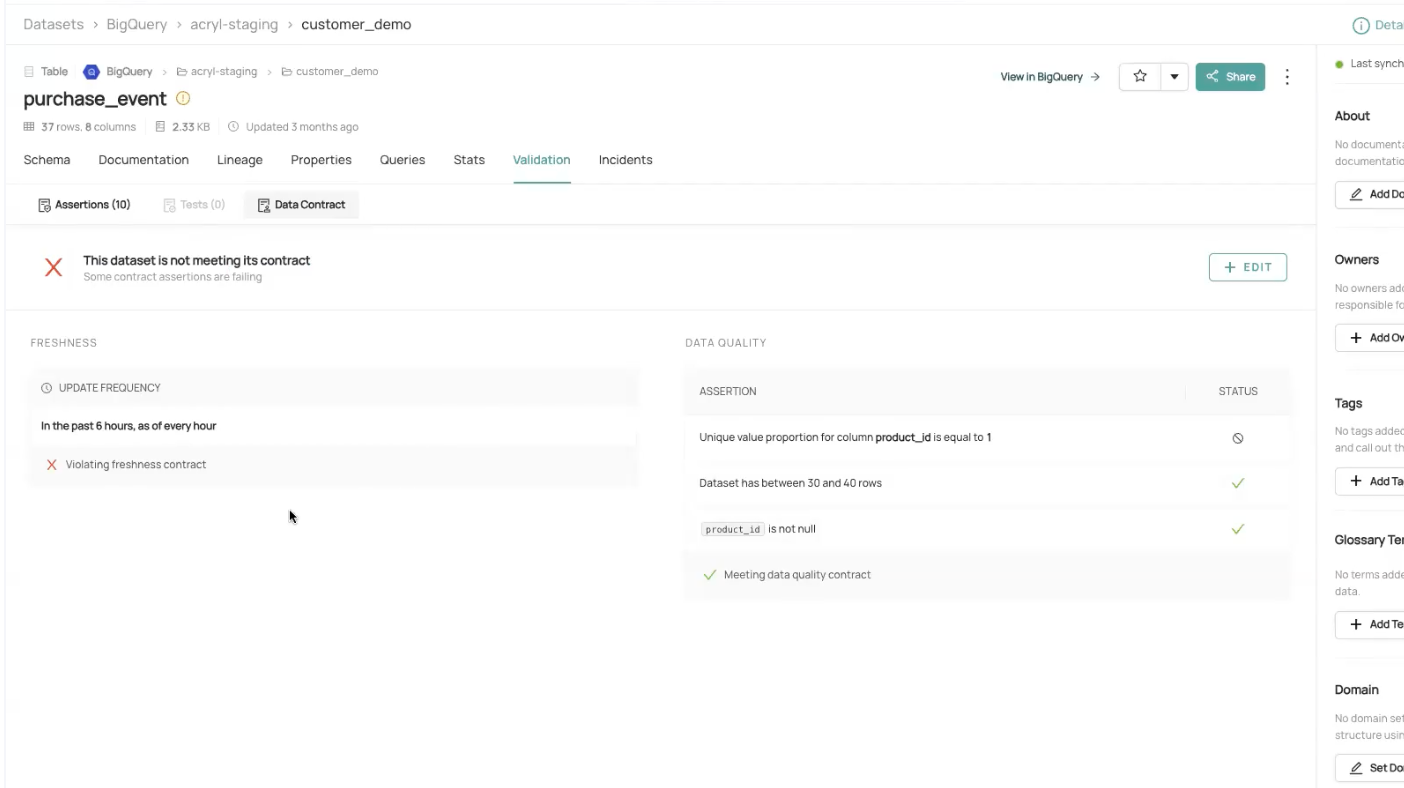

+3. Now you can see your contract on the UI.

+

+

+  +

+

+

+

+### UI

+

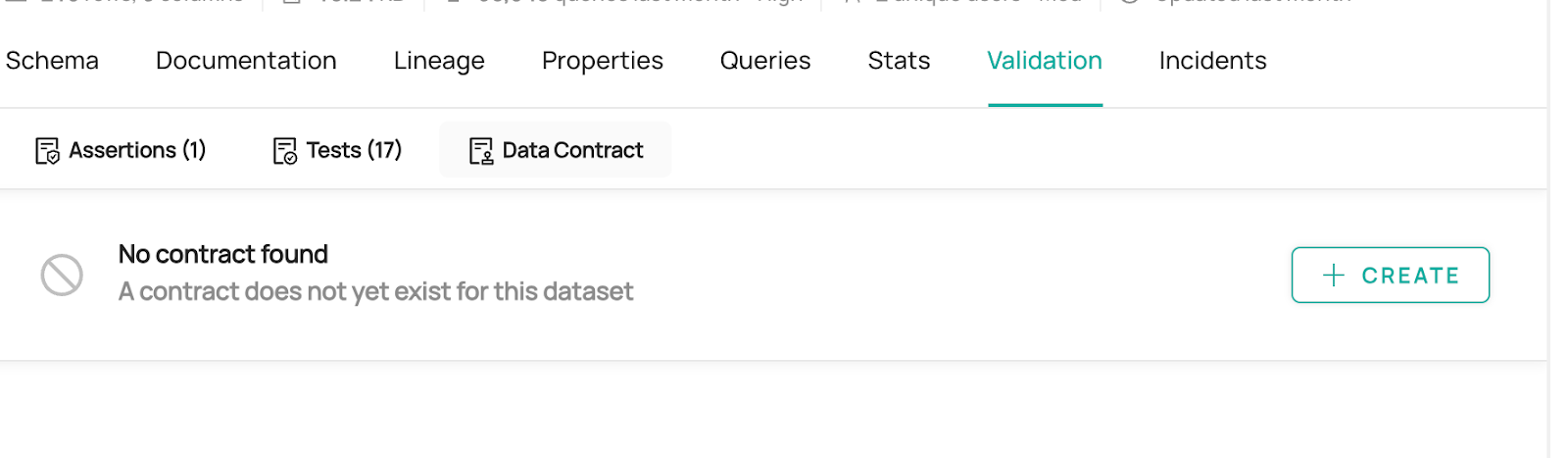

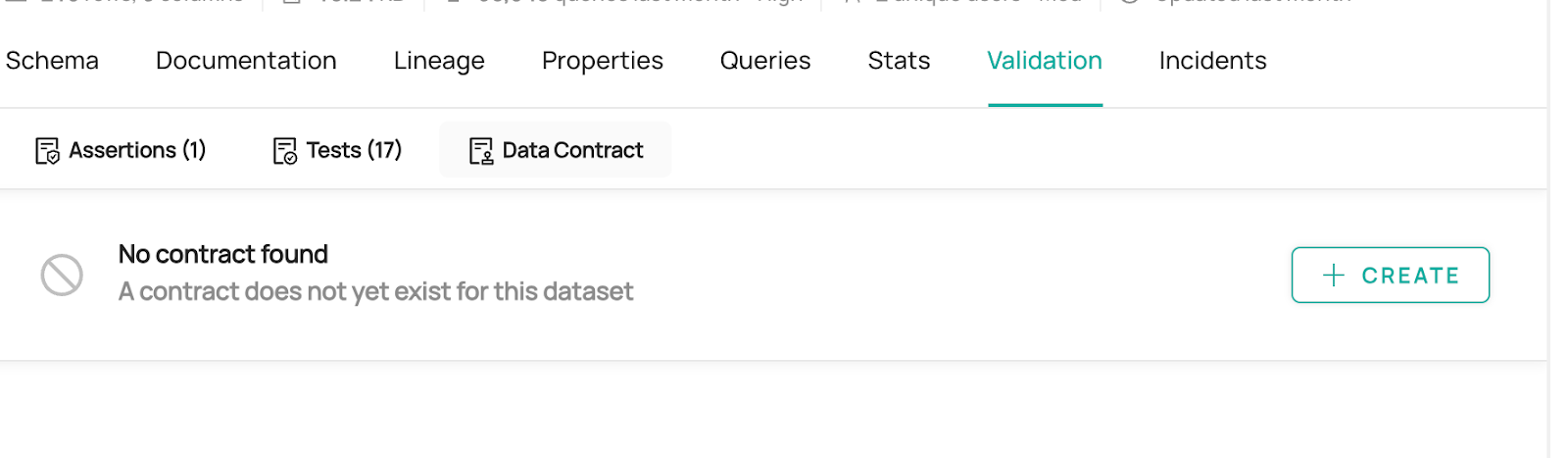

+1. Navigate to the Dataset Profile for the dataset you wish to create a contract for

+2. Under the **Validations** > **Data Contracts** tab, click **Create**.

+

+

+  +

+

+

+

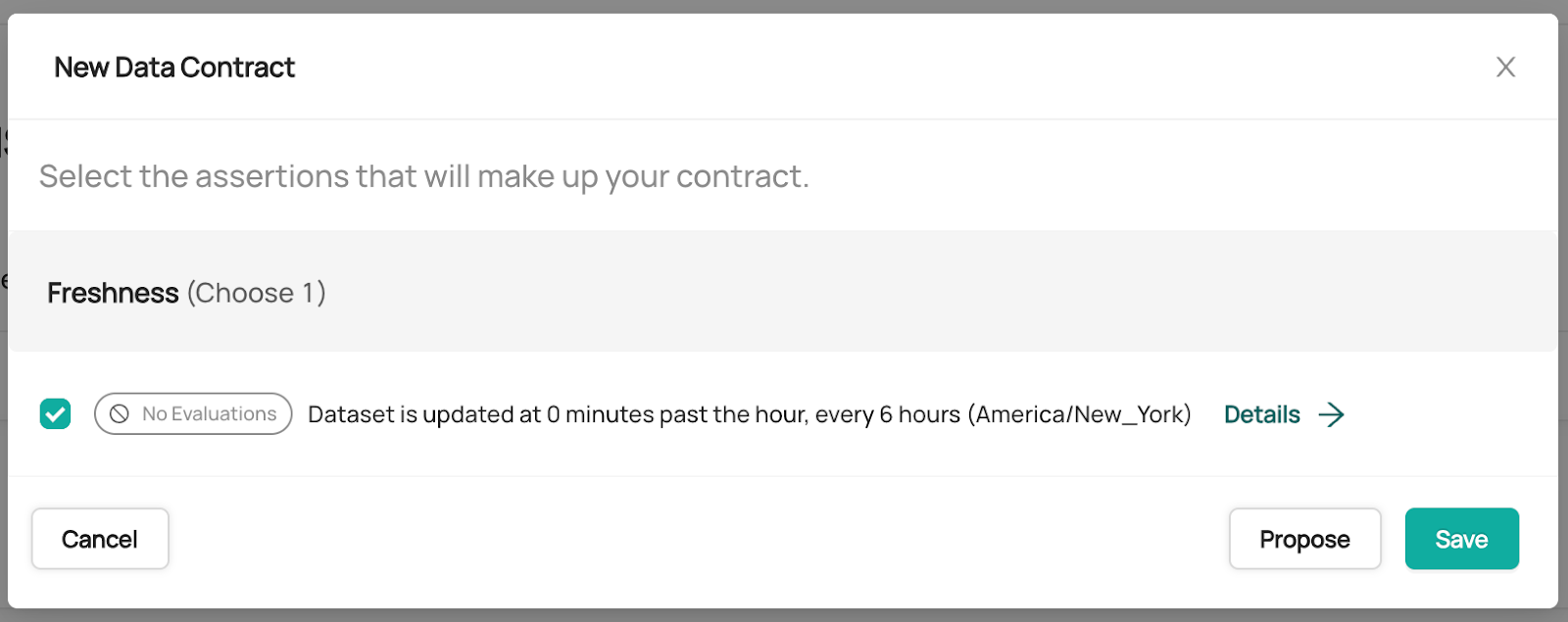

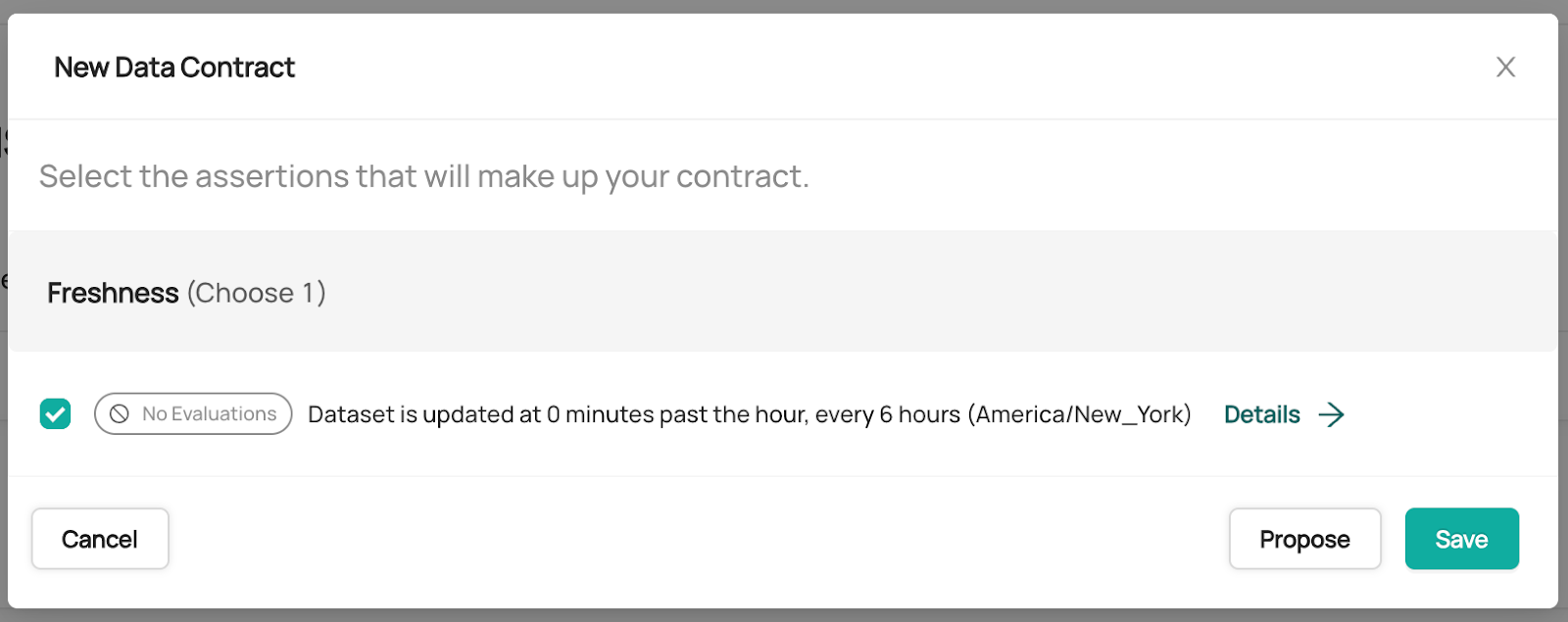

+3. Select the assertions you wish to be included in the Data Contract.

+

+

+  +

+

+

+

+:::note Create Data Contracts via UI

+When creating a Data Contract via UI, the Freshness, Schema, and Data Quality assertions must be created first.

+:::

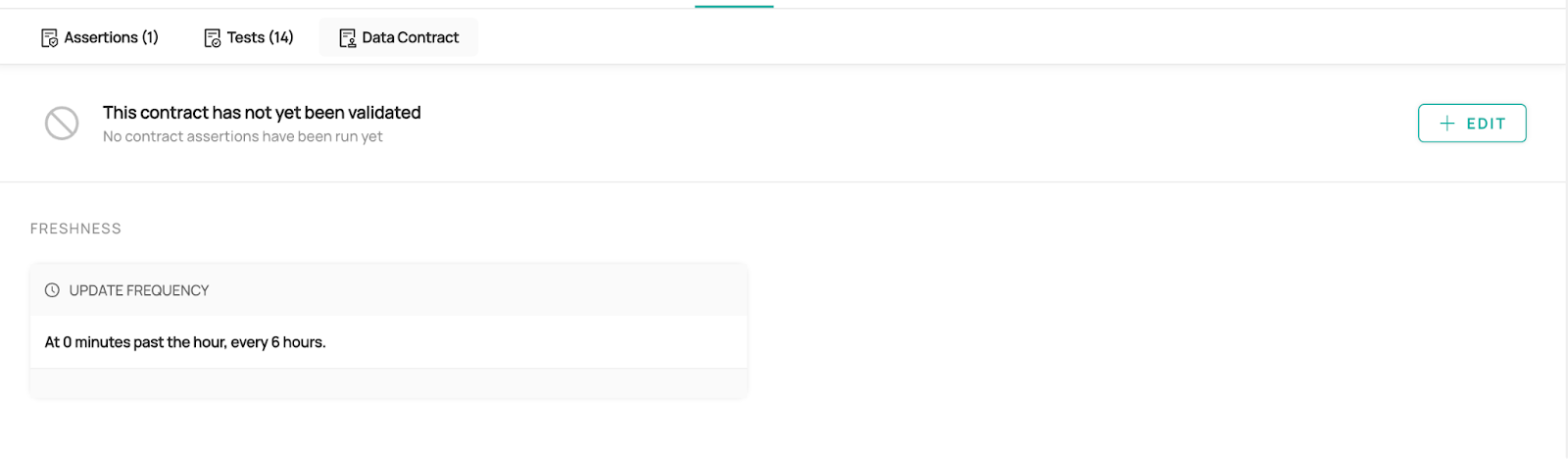

+4. Now you can see it in the UI.

+

+

+  +

+

+

+

+### API

+

+_API guide on creating data contract is coming soon!_

+

+

+## How to Run Data Contracts

+

+Running Data Contracts is dependent on running the contract’s assertions and getting the results on Datahub. Using Acryl Observe (available on SAAS), you can schedule assertions on Datahub itself. Otherwise, you can run your assertions outside of Datahub and have the results published back to Datahub.

+

+Datahub integrates nicely with DBT Test and Great Expectations, as described below. For other 3rd party assertion runners, you’ll need to use our APIs to publish the assertion results back to our platform.

+

+### DBT Test

+

+During DBT Ingestion, we pick up the dbt `run_results` file, which contains the dbt test run results, and translate it into assertion runs. [See details here.](/docs/generated/ingestion/sources/dbt.md#module-dbt)

+

+

+  +

+

+

+

+

+### Great Expectations

+

+For Great Expectations, you can integrate the **DataHubValidationAction** directly into your Great Expectations Checkpoint in order to have the assertion (aka. expectation) results to Datahub. [See the guide here](../../../metadata-ingestion/integration_docs/great-expectations.md).

+

+

+  +

+

diff --git a/metadata-ingestion/examples/library/create_data_contract.yml b/metadata-ingestion/examples/library/create_data_contract.yml

new file mode 100644

index 00000000000000..774b3ffd7ebb28

--- /dev/null

+++ b/metadata-ingestion/examples/library/create_data_contract.yml

@@ -0,0 +1,39 @@

+# id: sample_data_contract # Optional: if not provided, an id will be generated

+entity: urn:li:dataset:(urn:li:dataPlatform:hive,SampleHiveDataset,PROD)

+version: 1

+freshness:

+ type: cron

+ cron: "4 8 * * 1-5"

+data_quality:

+ - type: unique

+ column: field_foo

+## here's an example of how you'd define the schema

+# schema:

+# type: json-schema

+# json-schema:

+# type: object

+# properties:

+# field_foo:

+# type: string

+# native_type: VARCHAR(100)

+# field_bar:

+# type: boolean

+# native_type: boolean

+# field_documents:

+# type: array

+# items:

+# type: object

+# properties:

+# docId:

+# type: object

+# properties:

+# docPolicy:

+# type: object

+# properties:

+# policyId:

+# type: integer

+# fileId:

+# type: integer

+# required:

+# - field_bar

+# - field_documents

diff --git a/metadata-ingestion/src/datahub/ingestion/source/dbt/dbt_common.py b/metadata-ingestion/src/datahub/ingestion/source/dbt/dbt_common.py

index 549ca32757e23e..21e4b0b5d7ae5d 100644

--- a/metadata-ingestion/src/datahub/ingestion/source/dbt/dbt_common.py

+++ b/metadata-ingestion/src/datahub/ingestion/source/dbt/dbt_common.py

@@ -407,8 +407,12 @@ def validate_include_column_lineage(

def validate_skip_sources_in_lineage(

cls, skip_sources_in_lineage: bool, values: Dict

) -> bool:

- entites_enabled: DBTEntitiesEnabled = values["entities_enabled"]

- if skip_sources_in_lineage and entites_enabled.sources == EmitDirective.YES:

+ entites_enabled: Optional[DBTEntitiesEnabled] = values.get("entities_enabled")

+ if (

+ skip_sources_in_lineage

+ and entites_enabled

+ and entites_enabled.sources == EmitDirective.YES

+ ):

raise ValueError(

"When `skip_sources_in_lineage` is enabled, `entities_enabled.sources` must be set to NO."

)

diff --git a/metadata-ingestion/tests/unit/test_dbt_source.py b/metadata-ingestion/tests/unit/test_dbt_source.py

index c38ae0db28f3e6..5d7826b7ed6520 100644

--- a/metadata-ingestion/tests/unit/test_dbt_source.py

+++ b/metadata-ingestion/tests/unit/test_dbt_source.py

@@ -197,6 +197,30 @@ def test_dbt_entity_emission_configuration():

DBTCoreConfig.parse_obj(config_dict)

+def test_dbt_config_skip_sources_in_lineage():

+ with pytest.raises(

+ ValidationError,

+ match="skip_sources_in_lineage.*entities_enabled.sources.*set to NO",

+ ):

+ config_dict = {

+ "manifest_path": "dummy_path",

+ "catalog_path": "dummy_path",

+ "target_platform": "dummy_platform",

+ "skip_sources_in_lineage": True,

+ }

+ config = DBTCoreConfig.parse_obj(config_dict)

+

+ config_dict = {

+ "manifest_path": "dummy_path",

+ "catalog_path": "dummy_path",

+ "target_platform": "dummy_platform",

+ "skip_sources_in_lineage": True,

+ "entities_enabled": {"sources": "NO"},

+ }

+ config = DBTCoreConfig.parse_obj(config_dict)

+ assert config.skip_sources_in_lineage is True

+

+

def test_dbt_s3_config():

# test missing aws config

config_dict: dict = {

diff --git a/metadata-service/openapi-servlet/build.gradle b/metadata-service/openapi-servlet/build.gradle

index 32c40c31df42d7..04ed75d8800c27 100644

--- a/metadata-service/openapi-servlet/build.gradle

+++ b/metadata-service/openapi-servlet/build.gradle

@@ -9,6 +9,7 @@ dependencies {

implementation project(':metadata-service:auth-impl')

implementation project(':metadata-service:factories')

implementation project(':metadata-service:schema-registry-api')

+ implementation project (':metadata-service:openapi-servlet:models')

implementation externalDependency.reflections

implementation externalDependency.springBoot

diff --git a/metadata-service/openapi-servlet/models/build.gradle b/metadata-service/openapi-servlet/models/build.gradle

new file mode 100644

index 00000000000000..e4100b2d094e04

--- /dev/null

+++ b/metadata-service/openapi-servlet/models/build.gradle

@@ -0,0 +1,16 @@

+plugins {

+ id 'java'

+}

+

+dependencies {

+ implementation project(':entity-registry')

+ implementation project(':metadata-operation-context')

+ implementation project(':metadata-auth:auth-api')

+

+ implementation externalDependency.jacksonDataBind

+ implementation externalDependency.httpClient

+

+ compileOnly externalDependency.lombok

+

+ annotationProcessor externalDependency.lombok

+}

\ No newline at end of file

diff --git a/metadata-service/openapi-servlet/models/src/main/java/io/datahubproject/openapi/client/OpenApiClient.java b/metadata-service/openapi-servlet/models/src/main/java/io/datahubproject/openapi/client/OpenApiClient.java

new file mode 100644

index 00000000000000..267d95f1dddbfb

--- /dev/null

+++ b/metadata-service/openapi-servlet/models/src/main/java/io/datahubproject/openapi/client/OpenApiClient.java

@@ -0,0 +1,88 @@

+package io.datahubproject.openapi.client;

+

+import com.fasterxml.jackson.databind.ObjectMapper;

+import io.datahubproject.metadata.context.OperationContext;

+import io.datahubproject.openapi.v2.models.BatchGetUrnRequest;

+import io.datahubproject.openapi.v2.models.BatchGetUrnResponse;

+import java.io.ByteArrayOutputStream;

+import java.io.IOException;

+import java.io.InputStream;

+import java.nio.charset.StandardCharsets;

+import lombok.Getter;

+import lombok.extern.slf4j.Slf4j;

+import org.apache.hc.client5.http.classic.methods.HttpPost;

+import org.apache.hc.client5.http.impl.classic.CloseableHttpClient;

+import org.apache.hc.client5.http.impl.classic.HttpClientBuilder;

+import org.apache.hc.core5.http.ClassicHttpResponse;

+import org.apache.hc.core5.http.ContentType;

+import org.apache.hc.core5.http.HttpHeaders;

+import org.apache.hc.core5.http.io.entity.StringEntity;

+

+/** TODO: This should be autogenerated from our own OpenAPI */

+@Slf4j

+public class OpenApiClient {

+

+ private final CloseableHttpClient httpClient;

+ private final String gmsHost;

+ private final int gmsPort;

+ private final boolean useSsl;

+ @Getter private final OperationContext systemOperationContext;

+

+ private static final String OPENAPI_PATH = "/openapi/v2/entity/batch/";

+ private static final ObjectMapper OBJECT_MAPPER = new ObjectMapper();

+

+ public OpenApiClient(

+ String gmsHost, int gmsPort, boolean useSsl, OperationContext systemOperationContext) {

+ this.gmsHost = gmsHost;

+ this.gmsPort = gmsPort;

+ this.useSsl = useSsl;

+ httpClient = HttpClientBuilder.create().build();

+ this.systemOperationContext = systemOperationContext;

+ }

+

+ public BatchGetUrnResponse getBatchUrnsSystemAuth(String entityName, BatchGetUrnRequest request) {

+ return getBatchUrns(

+ entityName,

+ request,

+ systemOperationContext.getSystemAuthentication().get().getCredentials());

+ }

+

+ public BatchGetUrnResponse getBatchUrns(

+ String entityName, BatchGetUrnRequest request, String authCredentials) {

+ String url =

+ (useSsl ? "https://" : "http://") + gmsHost + ":" + gmsPort + OPENAPI_PATH + entityName;

+ HttpPost httpPost = new HttpPost(url);

+ httpPost.setHeader(HttpHeaders.AUTHORIZATION, authCredentials);

+ try {

+ httpPost.setEntity(

+ new StringEntity(

+ OBJECT_MAPPER.writeValueAsString(request), ContentType.APPLICATION_JSON));

+ httpPost.setHeader("Content-type", "application/json");

+ return httpClient.execute(httpPost, OpenApiClient::mapResponse);

+ } catch (IOException e) {

+ log.error("Unable to execute Batch Get request for urn: " + request.getUrns(), e);

+ throw new RuntimeException(e);

+ }

+ }

+

+ private static BatchGetUrnResponse mapResponse(ClassicHttpResponse response) {

+ BatchGetUrnResponse serializedResponse;

+ try {

+ ByteArrayOutputStream result = new ByteArrayOutputStream();

+ InputStream contentStream = response.getEntity().getContent();

+ byte[] buffer = new byte[1024];

+ int length = contentStream.read(buffer);

+ while (length > 0) {

+ result.write(buffer, 0, length);

+ length = contentStream.read(buffer);

+ }

+ serializedResponse =

+ OBJECT_MAPPER.readValue(

+ result.toString(StandardCharsets.UTF_8), BatchGetUrnResponse.class);

+ } catch (IOException e) {

+ log.error("Wasn't able to convert response into expected type.", e);

+ throw new RuntimeException(e);

+ }

+ return serializedResponse;

+ }

+}

diff --git a/metadata-service/openapi-servlet/models/src/main/java/io/datahubproject/openapi/v2/models/BatchGetUrnRequest.java b/metadata-service/openapi-servlet/models/src/main/java/io/datahubproject/openapi/v2/models/BatchGetUrnRequest.java

new file mode 100644

index 00000000000000..02afe8d40dd528

--- /dev/null

+++ b/metadata-service/openapi-servlet/models/src/main/java/io/datahubproject/openapi/v2/models/BatchGetUrnRequest.java

@@ -0,0 +1,30 @@

+package io.datahubproject.openapi.v2.models;

+

+import com.fasterxml.jackson.annotation.JsonInclude;

+import com.fasterxml.jackson.annotation.JsonProperty;

+import com.fasterxml.jackson.databind.annotation.JsonDeserialize;

+import io.swagger.v3.oas.annotations.media.Schema;

+import java.io.Serializable;

+import java.util.List;

+import lombok.Builder;

+import lombok.EqualsAndHashCode;

+import lombok.Value;

+

+@Value

+@EqualsAndHashCode

+@Builder

+@JsonDeserialize(builder = BatchGetUrnRequest.BatchGetUrnRequestBuilder.class)

+@JsonInclude(JsonInclude.Include.NON_NULL)

+public class BatchGetUrnRequest implements Serializable {

+ @JsonProperty("urns")

+ @Schema(required = true, description = "The list of urns to get.")

+ List urns;

+

+ @JsonProperty("aspectNames")

+ @Schema(required = true, description = "The list of aspect names to get")

+ List aspectNames;

+

+ @JsonProperty("withSystemMetadata")

+ @Schema(required = true, description = "Whether or not to retrieve system metadata")

+ boolean withSystemMetadata;

+}

diff --git a/metadata-service/openapi-servlet/models/src/main/java/io/datahubproject/openapi/v2/models/BatchGetUrnResponse.java b/metadata-service/openapi-servlet/models/src/main/java/io/datahubproject/openapi/v2/models/BatchGetUrnResponse.java

new file mode 100644

index 00000000000000..628733e4fd4ae7

--- /dev/null

+++ b/metadata-service/openapi-servlet/models/src/main/java/io/datahubproject/openapi/v2/models/BatchGetUrnResponse.java

@@ -0,0 +1,20 @@

+package io.datahubproject.openapi.v2.models;

+

+import com.fasterxml.jackson.annotation.JsonInclude;

+import com.fasterxml.jackson.annotation.JsonProperty;

+import com.fasterxml.jackson.databind.annotation.JsonDeserialize;

+import io.swagger.v3.oas.annotations.media.Schema;

+import java.io.Serializable;

+import java.util.List;

+import lombok.Builder;

+import lombok.Value;

+

+@Value

+@Builder

+@JsonInclude(JsonInclude.Include.NON_NULL)

+@JsonDeserialize(builder = BatchGetUrnResponse.BatchGetUrnResponseBuilder.class)

+public class BatchGetUrnResponse implements Serializable {

+ @JsonProperty("entities")

+ @Schema(description = "List of entity responses")

+ List entities;

+}

diff --git a/metadata-service/openapi-servlet/src/main/java/io/datahubproject/openapi/v2/models/GenericEntity.java b/metadata-service/openapi-servlet/models/src/main/java/io/datahubproject/openapi/v2/models/GenericEntity.java

similarity index 82%

rename from metadata-service/openapi-servlet/src/main/java/io/datahubproject/openapi/v2/models/GenericEntity.java

rename to metadata-service/openapi-servlet/models/src/main/java/io/datahubproject/openapi/v2/models/GenericEntity.java

index f1e965ca05464f..cb049c5ba131a8 100644

--- a/metadata-service/openapi-servlet/src/main/java/io/datahubproject/openapi/v2/models/GenericEntity.java

+++ b/metadata-service/openapi-servlet/models/src/main/java/io/datahubproject/openapi/v2/models/GenericEntity.java

@@ -2,22 +2,34 @@

import com.datahub.util.RecordUtils;

import com.fasterxml.jackson.annotation.JsonInclude;

+import com.fasterxml.jackson.annotation.JsonProperty;

import com.fasterxml.jackson.databind.ObjectMapper;

import com.linkedin.data.template.RecordTemplate;

import com.linkedin.mxe.SystemMetadata;

import com.linkedin.util.Pair;

+import io.swagger.v3.oas.annotations.media.Schema;

import java.io.IOException;

import java.nio.charset.StandardCharsets;

import java.util.Map;

import java.util.stream.Collectors;

+import lombok.AccessLevel;

+import lombok.AllArgsConstructor;

import lombok.Builder;

import lombok.Data;

+import lombok.NoArgsConstructor;

@Data

@Builder

@JsonInclude(JsonInclude.Include.NON_NULL)

+@NoArgsConstructor(force = true, access = AccessLevel.PRIVATE)

+@AllArgsConstructor

public class GenericEntity {

+ @JsonProperty("urn")

+ @Schema(description = "Urn of the entity")

private String urn;

+

+ @JsonProperty("aspects")

+ @Schema(description = "Map of aspect name to aspect")

private Map aspects;

public static class GenericEntityBuilder {

diff --git a/metadata-service/openapi-servlet/src/main/java/io/datahubproject/openapi/v2/models/GenericRelationship.java b/metadata-service/openapi-servlet/models/src/main/java/io/datahubproject/openapi/v2/models/GenericRelationship.java

similarity index 100%

rename from metadata-service/openapi-servlet/src/main/java/io/datahubproject/openapi/v2/models/GenericRelationship.java

rename to metadata-service/openapi-servlet/models/src/main/java/io/datahubproject/openapi/v2/models/GenericRelationship.java

diff --git a/metadata-service/openapi-servlet/src/main/java/io/datahubproject/openapi/v2/models/GenericScrollResult.java b/metadata-service/openapi-servlet/models/src/main/java/io/datahubproject/openapi/v2/models/GenericScrollResult.java

similarity index 100%

rename from metadata-service/openapi-servlet/src/main/java/io/datahubproject/openapi/v2/models/GenericScrollResult.java

rename to metadata-service/openapi-servlet/models/src/main/java/io/datahubproject/openapi/v2/models/GenericScrollResult.java

diff --git a/metadata-service/openapi-servlet/src/main/java/io/datahubproject/openapi/v2/models/GenericTimeseriesAspect.java b/metadata-service/openapi-servlet/models/src/main/java/io/datahubproject/openapi/v2/models/GenericTimeseriesAspect.java

similarity index 100%

rename from metadata-service/openapi-servlet/src/main/java/io/datahubproject/openapi/v2/models/GenericTimeseriesAspect.java

rename to metadata-service/openapi-servlet/models/src/main/java/io/datahubproject/openapi/v2/models/GenericTimeseriesAspect.java

diff --git a/metadata-service/openapi-servlet/src/main/java/io/datahubproject/openapi/v2/models/PatchOperation.java b/metadata-service/openapi-servlet/models/src/main/java/io/datahubproject/openapi/v2/models/PatchOperation.java

similarity index 100%

rename from metadata-service/openapi-servlet/src/main/java/io/datahubproject/openapi/v2/models/PatchOperation.java

rename to metadata-service/openapi-servlet/models/src/main/java/io/datahubproject/openapi/v2/models/PatchOperation.java

diff --git a/metadata-service/openapi-servlet/src/main/java/io/datahubproject/openapi/v2/controller/EntityController.java b/metadata-service/openapi-servlet/src/main/java/io/datahubproject/openapi/v2/controller/EntityController.java

index 7a11d60a567f95..55bb8ebe625ae9 100644

--- a/metadata-service/openapi-servlet/src/main/java/io/datahubproject/openapi/v2/controller/EntityController.java

+++ b/metadata-service/openapi-servlet/src/main/java/io/datahubproject/openapi/v2/controller/EntityController.java

@@ -38,6 +38,8 @@

import com.linkedin.mxe.SystemMetadata;

import com.linkedin.util.Pair;

import io.datahubproject.metadata.context.OperationContext;

+import io.datahubproject.openapi.v2.models.BatchGetUrnRequest;

+import io.datahubproject.openapi.v2.models.BatchGetUrnResponse;

import io.datahubproject.openapi.v2.models.GenericEntity;

import io.datahubproject.openapi.v2.models.GenericScrollResult;

import io.swagger.v3.oas.annotations.Operation;

@@ -45,9 +47,11 @@

import java.lang.reflect.InvocationTargetException;

import java.net.URISyntaxException;

import java.nio.charset.StandardCharsets;

+import java.util.ArrayList;

import java.util.HashSet;

import java.util.List;

import java.util.Map;

+import java.util.Optional;

import java.util.Set;

import java.util.stream.Collectors;

import java.util.stream.Stream;

@@ -140,8 +144,45 @@ public ResponseEntity> getEntities(

}

@Tag(name = "Generic Entities")

- @GetMapping(value = "/{entityName}/{entityUrn}", produces = MediaType.APPLICATION_JSON_VALUE)

- @Operation(summary = "Get an entity")

+ @PostMapping(value = "/batch/{entityName}", produces = MediaType.APPLICATION_JSON_VALUE)

+ @Operation(summary = "Get a batch of entities")

+ public ResponseEntity getEntityBatch(

+ @PathVariable("entityName") String entityName, @RequestBody BatchGetUrnRequest request)

+ throws URISyntaxException {

+

+ if (restApiAuthorizationEnabled) {

+ Authentication authentication = AuthenticationContext.getAuthentication();

+ EntitySpec entitySpec = entityRegistry.getEntitySpec(entityName);

+ request

+ .getUrns()

+ .forEach(

+ entityUrn ->

+ checkAuthorized(

+ authorizationChain,

+ authentication.getActor(),

+ entitySpec,

+ entityUrn,

+ ImmutableList.of(PoliciesConfig.GET_ENTITY_PRIVILEGE.getType())));

+ }

+

+ return ResponseEntity.of(

+ Optional.of(

+ BatchGetUrnResponse.builder()

+ .entities(

+ new ArrayList<>(

+ toRecordTemplates(

+ request.getUrns().stream()

+ .map(UrnUtils::getUrn)

+ .collect(Collectors.toList()),

+ new HashSet<>(request.getAspectNames()),

+ request.isWithSystemMetadata())))

+ .build()));

+ }

+

+ @Tag(name = "Generic Entities")

+ @GetMapping(

+ value = "/{entityName}/{entityUrn:urn:li:.+}",

+ produces = MediaType.APPLICATION_JSON_VALUE)

public ResponseEntity getEntity(

@PathVariable("entityName") String entityName,

@PathVariable("entityUrn") String entityUrn,

diff --git a/settings.gradle b/settings.gradle

index 57d7d1dbc36f22..27928ae7446a1b 100644

--- a/settings.gradle

+++ b/settings.gradle

@@ -68,3 +68,4 @@ include 'metadata-service:services'

include 'metadata-service:configuration'

include ':metadata-jobs:common'

include ':metadata-operation-context'

+include ':metadata-service:openapi-servlet:models'

+

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+