-

Notifications

You must be signed in to change notification settings - Fork 335

App crashing while converting video frames to Images iOS #216

Comments

|

@rohitphogat19 it's not a bug from our end. it's the way how you save the video frame is wrong. |

|

@plutoless I was able to convert frames to Images. But how to write these images to a Asset writer to save as video file. I was able to convert AgoraVideoRawData to CVPixelBuffer in swift and Images are also fine. My code is func mediaDataPlugin(_ mediaDataPlugin: AgoraMediaDataPlugin, didCapturedVideoRawData videoRawData: AgoraVideoRawData) -> AgoraVideoRawData {

//planeBaseAddress: UnsafeMutablePointer<UnsafeMutableRawPointer?>

let imageHeight = Int(videoRawData.height)

let imageWidth = Int(videoRawData.width)

let yStrideValue = Int(videoRawData.yStride)

let uvStrideValue = Int(videoRawData.uStride)

let uvBufferLength = imageHeight * uvStrideValue

// Buffers

let uBuffer = videoRawData.uBuffer // UnsafeMutablePointer<Int8>

let vBuffer = videoRawData.vBuffer // UnsafeMutablePointer<Int8>

let yBuffer = videoRawData.yBuffer // UnsafeMutablePointer<Int8>

let uvBuffer = UnsafeMutablePointer<Int8>.allocate(capacity: uvBufferLength)

var uv = 0, u = 0

while uv < uvBufferLength {

// swtich the location of U、V,to NV12

uvBuffer[uv] = uBuffer![u]

uvBuffer[uv + 1] = vBuffer![u]

uv += 2

u += 1

}

var planeBaseAddressValues = [UnsafeMutableRawPointer(yBuffer), UnsafeMutableRawPointer(uvBuffer)]

let planeBaseAddress = UnsafeMutablePointer<UnsafeMutableRawPointer?>.allocate(capacity: 2)

planeBaseAddress.initialize(from: &planeBaseAddressValues, count: 2)

var planewidthValues: [Int] = [imageWidth, imageWidth/2]

let planeWidth = UnsafeMutablePointer<Int>.allocate(capacity: 2)

planeWidth.initialize(from: &planewidthValues, count: 2)

var planeHeightValues: [Int] = [imageHeight, imageHeight/2]

let planeHeight = UnsafeMutablePointer<Int>.allocate(capacity: 2)

planeHeight.initialize(from: &planeHeightValues, count: 2)

let planeBytesPerRowValues: [Int] = [yStrideValue, uvStrideValue * 2]

let planeBytesPerRow = UnsafeMutablePointer<Int>.allocate(capacity: 2)

planeBytesPerRow.initialize(from: planeBytesPerRowValues, count: 2)

var pixelBuffer: CVPixelBuffer? = nil

let result = CVPixelBufferCreateWithPlanarBytes(kCFAllocatorDefault, imageWidth, imageHeight, kCVPixelFormatType_420YpCbCr8BiPlanarVideoRange, nil, 0, 2, planeBaseAddress, planeWidth, planeHeight, planeBytesPerRow, nil, nil, nil, &pixelBuffer)

if result != kCVReturnSuccess {

print("Unable to create cvpixelbuffer \(result)")

}

guard let buffer = pixelBuffer else {

return videoRawData

}

self.frameCounts += 1

self.handleAndWriteBuffer(buffer: buffer, renderTime: videoRawData.renderTimeMs, frameCount: self.frameCounts)

planeHeight.deinitialize(count: 2)

planeWidth.deinitialize(count: 2)

planeBytesPerRow.deinitialize(count: 2)

planeBaseAddress.deinitialize(count: 2)

uvBuffer.deinitialize(count: uvBufferLength)

planeBaseAddress.deallocate()

planeWidth.deallocate()

planeHeight.deallocate()

planeBytesPerRow.deallocate()

uvBuffer.deallocate()

return videoRawData

}

private func handleAndWriteBuffer(buffer: CVPixelBuffer, renderTime: Int64, frameCount: Int) {

if frameCount == 1 {

let videoPath = self.getFileFinalPath(directory: "Recordings", fileName: self.fileName)

let writer = try! AVAssetWriter(outputURL: videoPath!, fileType: .mov)

var videoSettings = [String:Any]()

videoSettings.updateValue(1280, forKey: AVVideoHeightKey)

videoSettings.updateValue(720, forKey: AVVideoWidthKey)

videoSettings.updateValue(AVVideoCodecType.h264, forKey: AVVideoCodecKey)

let input = AVAssetWriterInput(mediaType: .video, outputSettings: videoSettings)

input.expectsMediaDataInRealTime = false

let adapter = AVAssetWriterInputPixelBufferAdaptor(assetWriterInput: input, sourcePixelBufferAttributes: [kCVPixelBufferPixelFormatTypeKey as String : NSNumber(value: Int32(kCVPixelFormatType_420YpCbCr8BiPlanarVideoRange))])

if writer.canAdd(input) {

writer.add(input)

}

writer.startWriting()

writer.startSession(atSourceTime: CMTime(value: renderTime, timescale: 1000))

self.assetWriter = writer

self.videoWriterInput = input

self.videoAdapter = adapter

} else {

print(self.assetWriter.status.rawValue)

renderQueue.async {

if let sampluBuffer = self.getSampleBuffer(buffer: buffer, renderTime: renderTime) {

let time = CMSampleBufferGetPresentationTimeStamp(sampluBuffer)

print(time)

print(time.seconds)

self.videoWriterInput.append(sampluBuffer)

}

}

}

}Asset writer status changes from writing to failed after a few frames. |

|

@rohitphogat19 any failure reasons? |

|

@plutoless Just got this assetWriter.error: Optional(Error Domain=AVFoundationErrorDomain Code=-11800 "The operation could not be completed" UserInfo={NSLocalizedFailureReason=An unknown error occurred (-12780), NSLocalizedDescription=The operation could not be completed, NSUnderlyingError=0x281cffa50 {Error Domain=NSOSStatusErrorDomain Code=-12780 "(null)"}}) |

|

input.expectsMediaDataInRealTime = false |

|

@plutoless I have tried that also but no success. Also, render time in AgoraVideoRawData is in the range of like 131252828 while if we use AVCapturesession it comes in the range of below Maybe that's why assetWriter is failed. How can we convert |

|

if you believe this is caused by CMTime, try using |

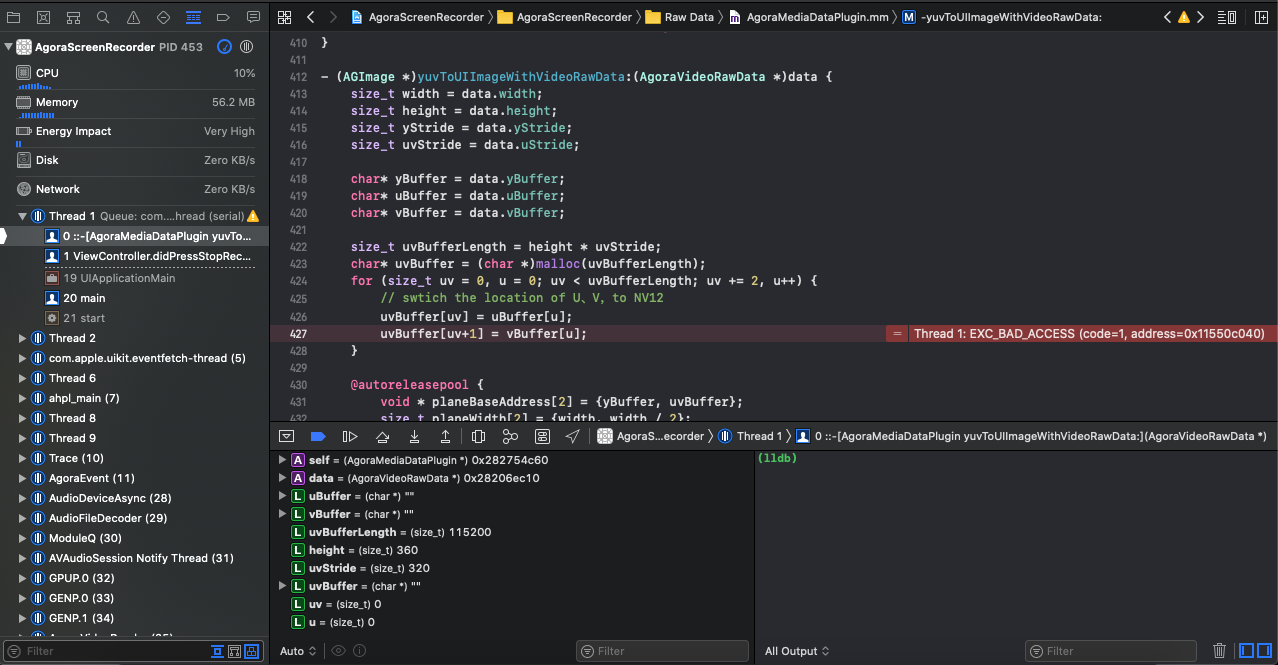

I am using the Agora-Plugin-Raw-Data-API-Objective-C sample to record and save local video during a video call. But it is crashing when converting all stored Video frames to Images. I have used and modify image creation function in AgoraMediaDataPlugin.mm file from the sample. The issue comes when I press the stop recording button and start converting all stored frames to images.

Swift Code:

AgoraMediaDataPlugin.mm code

Crash:

Also, is there any alternatives to save the local video other than using Video Raw data?

The text was updated successfully, but these errors were encountered: